Mats Jirstrand

MCMC-Correction of Score-Based Diffusion Models for Model Composition

Jul 26, 2023

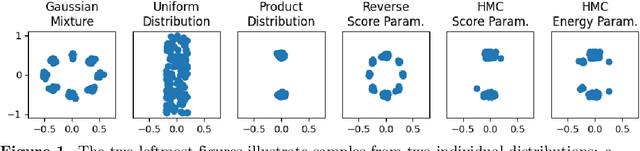

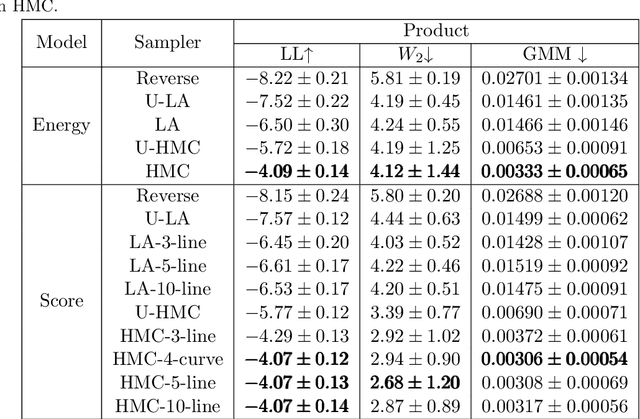

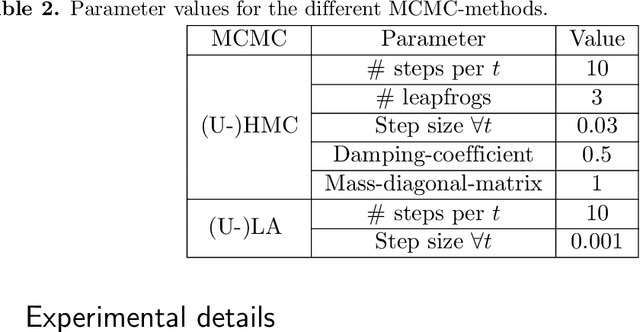

Abstract:Diffusion models can be parameterised in terms of either a score or an energy function. The energy parameterisation has better theoretical properties, mainly that it enables an extended sampling procedure with a Metropolis--Hastings correction step, based on the change in total energy in the proposed samples. However, it seems to yield slightly worse performance, and more importantly, due to the widespread popularity of score-based diffusion, there are limited availability of off-the-shelf pre-trained energy-based ones. This limitation undermines the purpose of model composition, which aims to combine pre-trained models to sample from new distributions. Our proposal, however, suggests retaining the score parameterization and instead computing the energy-based acceptance probability through line integration of the score function. This allows us to re-use existing diffusion models and still combine the reverse process with various Markov-Chain Monte Carlo (MCMC) methods. We evaluate our method on a 2D experiment and find that it achieve similar or arguably better performance than the energy parameterisation.

S-RASTER: Contraction Clustering for Evolving Data Streams

Nov 21, 2019

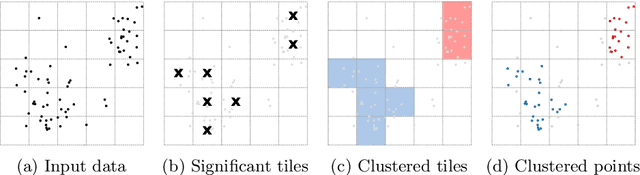

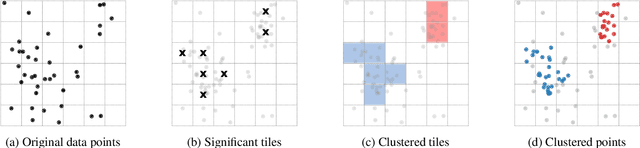

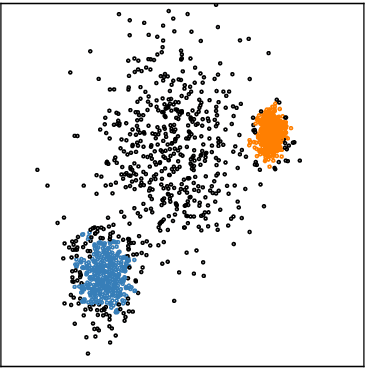

Abstract:Contraction Clustering (RASTER) is a very fast algorithm for density-based clustering, which requires only a single pass. It can process arbitrary amounts of data in linear time and in constant memory, quickly identifying approximate clusters. It also exhibits good scalability in the presence of multiple CPU cores. Yet, RASTER is limited to batch processing. In contrast, S-RASTER is an adaptation of RASTER to the stream processing paradigm that is able to identify clusters in evolving data streams. This algorithm retains the main benefits of its parent algorithm, i.e. single-pass linear time cost and constant memory requirements for each discrete time step in the sliding window. The sliding window is efficiently pruned, and clustering is still performed in linear time. Like RASTER, S-RASTER trades off an often negligible amount of precision for speed. It is therefore very well suited to real-world scenarios where clustering does not happen continually but only periodically. We describe the algorithm, including a discussion of implementation details.

Contraction Clustering : A Very Fast Big Data Algorithm for Sequential and Parallel Density-Based Clustering in Linear Time, Constant Memory, and a Single Pass

Jul 08, 2019

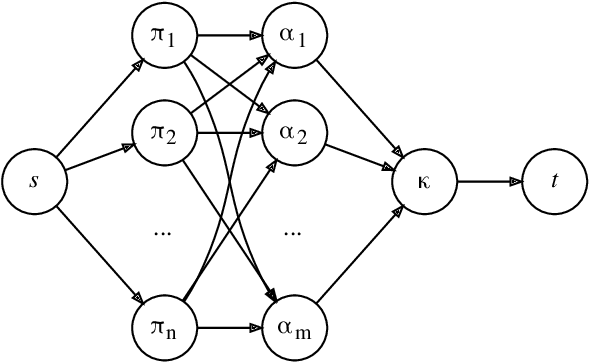

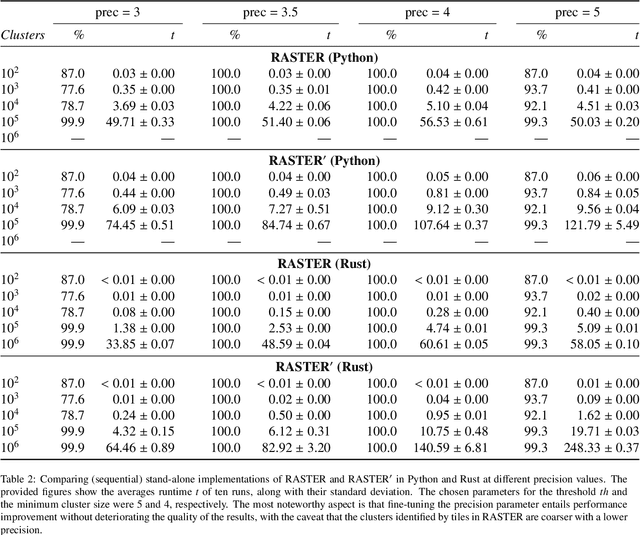

Abstract:Clustering is an essential data mining tool for analyzing and grouping similar objects. In big data applications, however, many clustering algorithms are infeasible due to their high memory requirements and/or unfavorable runtime complexity. In contrast, Contraction Clustering (RASTER) is a single-pass algorithm for identifying density-based clusters with linear time complexity. Due to its favorable runtime and the fact that its memory requirements are constant, this algorithm is highly suitable for big data applications where the amount of data to be processed is huge. It consists of two steps: (1) a contraction step which projects objects onto tiles and (2) an agglomeration step which groups tiles into clusters. This algorithm is extremely fast in both sequential and parallel execution. In single-threaded execution on a contemporary workstation, an implementation in Rust processes a batch of 500 million points with 1 million clusters in less than 50 seconds. The speedup due to parallelization is significant, amounting to a factor of around 4 on an 8-core machine.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge