Mathieu Barthet

The GigaMIDI Dataset with Features for Expressive Music Performance Detection

Feb 24, 2025Abstract:The Musical Instrument Digital Interface (MIDI), introduced in 1983, revolutionized music production by allowing computers and instruments to communicate efficiently. MIDI files encode musical instructions compactly, facilitating convenient music sharing. They benefit Music Information Retrieval (MIR), aiding in research on music understanding, computational musicology, and generative music. The GigaMIDI dataset contains over 1.4 million unique MIDI files, encompassing 1.8 billion MIDI note events and over 5.3 million MIDI tracks. GigaMIDI is currently the largest collection of symbolic music in MIDI format available for research purposes under fair dealing. Distinguishing between non-expressive and expressive MIDI tracks is challenging, as MIDI files do not inherently make this distinction. To address this issue, we introduce a set of innovative heuristics for detecting expressive music performance. These include the Distinctive Note Velocity Ratio (DNVR) heuristic, which analyzes MIDI note velocity; the Distinctive Note Onset Deviation Ratio (DNODR) heuristic, which examines deviations in note onset times; and the Note Onset Median Metric Level (NOMML) heuristic, which evaluates onset positions relative to metric levels. Our evaluation demonstrates these heuristics effectively differentiate between non-expressive and expressive MIDI tracks. Furthermore, after evaluation, we create the most substantial expressive MIDI dataset, employing our heuristic, NOMML. This curated iteration of GigaMIDI encompasses expressively-performed instrument tracks detected by NOMML, containing all General MIDI instruments, constituting 31% of the GigaMIDI dataset, totalling 1,655,649 tracks.

Between the AI and Me: Analysing Listeners' Perspectives on AI- and Human-Composed Progressive Metal Music

Jul 31, 2024

Abstract:Generative AI models have recently blossomed, significantly impacting artistic and musical traditions. Research investigating how humans interact with and deem these models is therefore crucial. Through a listening and reflection study, we explore participants' perspectives on AI- vs human-generated progressive metal, in symbolic format, using rock music as a control group. AI-generated examples were produced by ProgGP, a Transformer-based model. We propose a mixed methods approach to assess the effects of generation type (human vs. AI), genre (progressive metal vs. rock), and curation process (random vs. cherry-picked). This combines quantitative feedback on genre congruence, preference, creativity, consistency, playability, humanness, and repeatability, and qualitative feedback to provide insights into listeners' experiences. A total of 32 progressive metal fans completed the study. Our findings validate the use of fine-tuning to achieve genre-specific specialization in AI music generation, as listeners could distinguish between AI-generated rock and progressive metal. Despite some AI-generated excerpts receiving similar ratings to human music, listeners exhibited a preference for human compositions. Thematic analysis identified key features for genre and AI vs. human distinctions. Finally, we consider the ethical implications of our work in promoting musical data diversity within MIR research by focusing on an under-explored genre.

MoodLoopGP: Generating Emotion-Conditioned Loop Tablature Music with Multi-Granular Features

Jan 25, 2024Abstract:Loopable music generation systems enable diverse applications, but they often lack controllability and customization capabilities. We argue that enhancing controllability can enrich these models, with emotional expression being a crucial aspect for both creators and listeners. Hence, building upon LooperGP, a loopable tablature generation model, this paper explores endowing systems with control over conveyed emotions. To enable such conditional generation, we propose integrating musical knowledge by utilizing multi-granular semantic and musical features during model training and inference. Specifically, we incorporate song-level features (Emotion Labels, Tempo, and Mode) and bar-level features (Tonal Tension) together to guide emotional expression. Through algorithmic and human evaluations, we demonstrate the approach's effectiveness in producing music conveying two contrasting target emotions, happiness and sadness. An ablation study is also conducted to clarify the contributing factors behind our approach's results.

Real-time Percussive Technique Recognition and Embedding Learning for the Acoustic Guitar

Jul 13, 2023

Abstract:Real-time music information retrieval (RT-MIR) has much potential to augment the capabilities of traditional acoustic instruments. We develop RT-MIR techniques aimed at augmenting percussive fingerstyle, which blends acoustic guitar playing with guitar body percussion. We formulate several design objectives for RT-MIR systems for augmented instrument performance: (i) causal constraint, (ii) perceptually negligible action-to-sound latency, (iii) control intimacy support, (iv) synthesis control support. We present and evaluate real-time guitar body percussion recognition and embedding learning techniques based on convolutional neural networks (CNNs) and CNNs jointly trained with variational autoencoders (VAEs). We introduce a taxonomy of guitar body percussion based on hand part and location. We follow a cross-dataset evaluation approach by collecting three datasets labelled according to the taxonomy. The embedding quality of the models is assessed using KL-Divergence across distributions corresponding to different taxonomic classes. Results indicate that the networks are strong classifiers especially in a simplified 2-class recognition task, and the VAEs yield improved class separation compared to CNNs as evidenced by increased KL-Divergence across distributions. We argue that the VAE embedding quality could support control intimacy and rich interaction when the latent space's parameters are used to control an external synthesis engine. Further design challenges around generalisation to different datasets have been identified.

Combining Vision and EMG-Based Hand Tracking for Extended Reality Musical Instruments

Jul 13, 2023

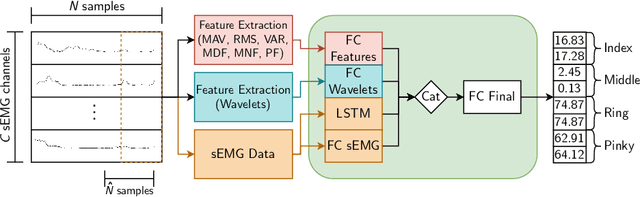

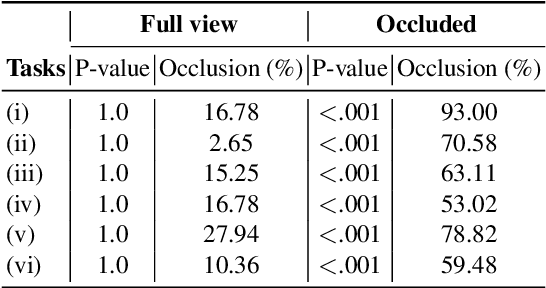

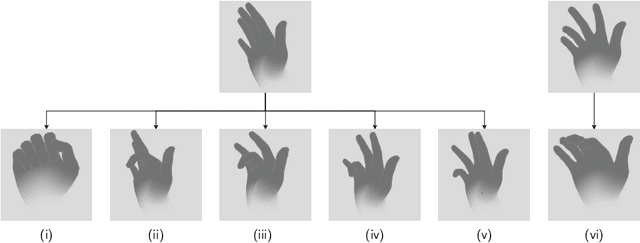

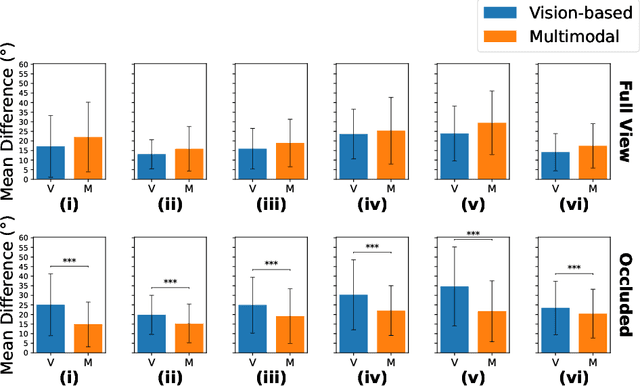

Abstract:Hand tracking is a critical component of natural user interactions in extended reality (XR) environments, including extended reality musical instruments (XRMIs). However, self-occlusion remains a significant challenge for vision-based hand tracking systems, leading to inaccurate results and degraded user experiences. In this paper, we propose a multimodal hand tracking system that combines vision-based hand tracking with surface electromyography (sEMG) data for finger joint angle estimation. We validate the effectiveness of our system through a series of hand pose tasks designed to cover a wide range of gestures, including those prone to self-occlusion. By comparing the performance of our multimodal system to a baseline vision-based tracking method, we demonstrate that our multimodal approach significantly improves tracking accuracy for several finger joints prone to self-occlusion. These findings suggest that our system has the potential to enhance XR experiences by providing more accurate and robust hand tracking, even in the presence of self-occlusion.

ShredGP: Guitarist Style-Conditioned Tablature Generation

Jul 11, 2023Abstract:GuitarPro format tablatures are a type of digital music notation that encapsulates information about guitar playing techniques and fingerings. We introduce ShredGP, a GuitarPro tablature generative Transformer-based model conditioned to imitate the style of four distinct iconic electric guitarists. In order to assess the idiosyncrasies of each guitar player, we adopt a computational musicology methodology by analysing features computed from the tokens yielded by the DadaGP encoding scheme. Statistical analyses of the features evidence significant differences between the four guitarists. We trained two variants of the ShredGP model, one using a multi-instrument corpus, the other using solo guitar data. We present a BERT-based model for guitar player classification and use it to evaluate the generated examples. Overall, results from the classifier show that ShredGP is able to generate content congruent with the style of the targeted guitar player. Finally, we reflect on prospective applications for ShredGP for human-AI music interaction.

ProgGP: From GuitarPro Tablature Neural Generation To Progressive Metal Production

Jul 11, 2023Abstract:Recent work in the field of symbolic music generation has shown value in using a tokenization based on the GuitarPro format, a symbolic representation supporting guitar expressive attributes, as an input and output representation. We extend this work by fine-tuning a pre-trained Transformer model on ProgGP, a custom dataset of 173 progressive metal songs, for the purposes of creating compositions from that genre through a human-AI partnership. Our model is able to generate multiple guitar, bass guitar, drums, piano and orchestral parts. We examine the validity of the generated music using a mixed methods approach by combining quantitative analyses following a computational musicology paradigm and qualitative analyses following a practice-based research paradigm. Finally, we demonstrate the value of the model by using it as a tool to create a progressive metal song, fully produced and mixed by a human metal producer based on AI-generated music.

LooperGP: A Loopable Sequence Model for Live Coding Performance using GuitarPro Tablature

Mar 03, 2023Abstract:Despite their impressive offline results, deep learning models for symbolic music generation are not widely used in live performances due to a deficit of musically meaningful control parameters and a lack of structured musical form in their outputs. To address these issues we introduce LooperGP, a method for steering a Transformer-XL model towards generating loopable musical phrases of a specified number of bars and time signature, enabling a tool for live coding performances. We show that by training LooperGP on a dataset of 93,681 musical loops extracted from the DadaGP dataset, we are able to steer its generative output towards generating 3x as many loopable phrases as our baseline. In a subjective listening test conducted by 31 participants, LooperGP loops achieved positive median ratings in originality, musical coherence and loop smoothness, demonstrating its potential as a performance tool.

* The Version of Record of this contribution is published in Proceedings of EvoMUSART: International Conference on Computational Intelligence in Music, Sound, Art and Design (Part of EvoStar) 2023

GTR-CTRL: Instrument and Genre Conditioning for Guitar-Focused Music Generation with Transformers

Feb 10, 2023Abstract:Recently, symbolic music generation with deep learning techniques has witnessed steady improvements. Most works on this topic focus on MIDI representations, but less attention has been paid to symbolic music generation using guitar tablatures (tabs) which can be used to encode multiple instruments. Tabs include information on expressive techniques and fingerings for fretted string instruments in addition to rhythm and pitch. In this work, we use the DadaGP dataset for guitar tab music generation, a corpus of over 26k songs in GuitarPro and token formats. We introduce methods to condition a Transformer-XL deep learning model to generate guitar tabs (GTR-CTRL) based on desired instrumentation (inst-CTRL) and genre (genre-CTRL). Special control tokens are appended at the beginning of each song in the training corpus. We assess the performance of the model with and without conditioning. We propose instrument presence metrics to assess the inst-CTRL model's response to a given instrumentation prompt. We trained a BERT model for downstream genre classification and used it to assess the results obtained with the genre-CTRL model. Statistical analyses evidence significant differences between the conditioned and unconditioned models. Overall, results indicate that the GTR-CTRL methods provide more flexibility and control for guitar-focused symbolic music generation than an unconditioned model.

* This preprint is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0). The Version of Record of this contribution is published in Proceedings of EvoMUSART: International Conference on Computational Intelligence in Music, Sound, Art and Design (Part of EvoStar) 2023

DadaGP: A Dataset of Tokenized GuitarPro Songs for Sequence Models

Jul 30, 2021

Abstract:Originating in the Renaissance and burgeoning in the digital era, tablatures are a commonly used music notation system which provides explicit representations of instrument fingerings rather than pitches. GuitarPro has established itself as a widely used tablature format and software enabling musicians to edit and share songs for musical practice, learning, and composition. In this work, we present DadaGP, a new symbolic music dataset comprising 26,181 song scores in the GuitarPro format covering 739 musical genres, along with an accompanying tokenized format well-suited for generative sequence models such as the Transformer. The tokenized format is inspired by event-based MIDI encodings, often used in symbolic music generation models. The dataset is released with an encoder/decoder which converts GuitarPro files to tokens and back. We present results of a use case in which DadaGP is used to train a Transformer-based model to generate new songs in GuitarPro format. We discuss other relevant use cases for the dataset (guitar-bass transcription, music style transfer and artist/genre classification) as well as ethical implications. DadaGP opens up the possibility to train GuitarPro score generators, fine-tune models on custom data, create new styles of music, AI-powered songwriting apps, and human-AI improvisation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge