Mateus Maia

GP-BART: a novel Bayesian additive regression trees approach using Gaussian processes

Apr 06, 2022

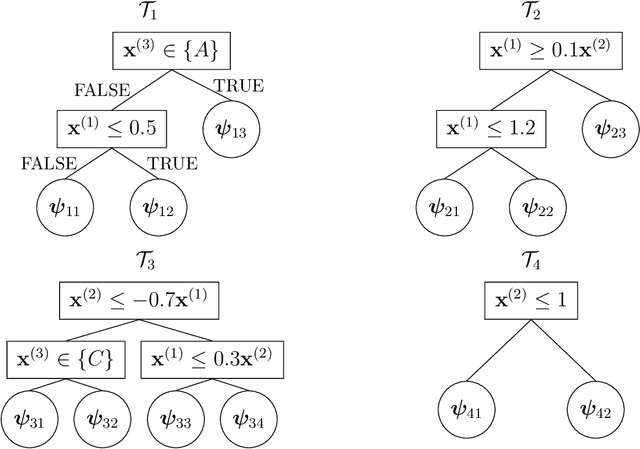

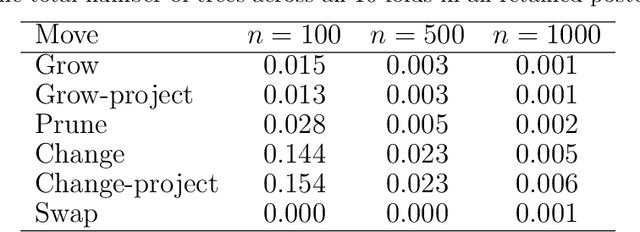

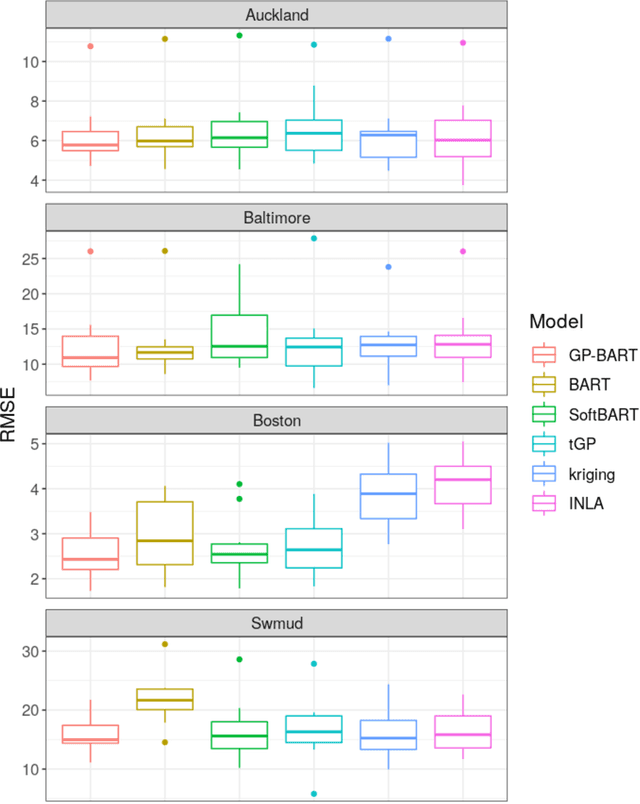

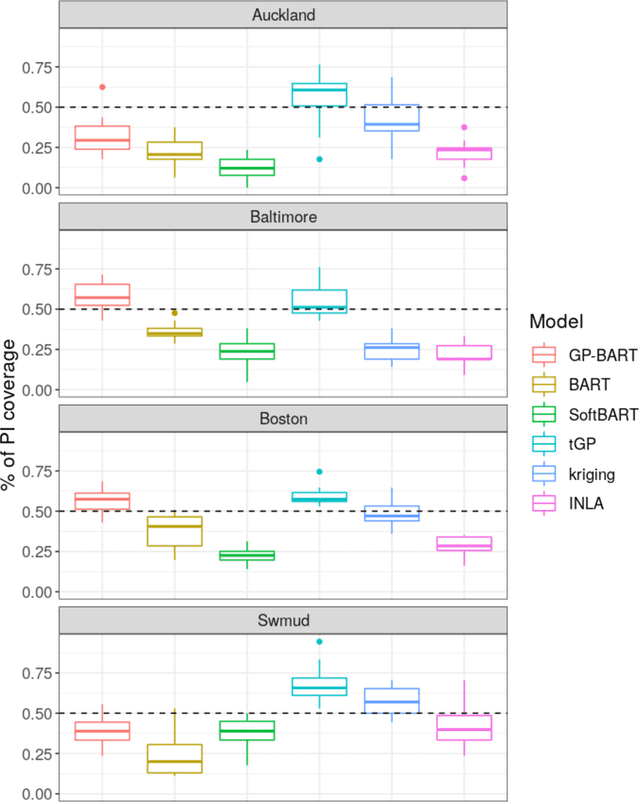

Abstract:The Bayesian additive regression trees (BART) model is an ensemble method extensively and successfully used in regression tasks due to its consistently strong predictive performance and its ability to quantify uncertainty. BART combines "weak" tree models through a set of shrinkage priors, whereby each tree explains a small portion of the variability in the data. However, the lack of smoothness and the absence of a covariance structure over the observations in standard BART can yield poor performance in cases where such assumptions would be necessary. We propose Gaussian processes Bayesian additive regression trees (GP-BART) as an extension of BART which assumes Gaussian process (GP) priors for the predictions of each terminal node among all trees. We illustrate our model on simulated and real data and compare its performance to traditional modelling approaches, outperforming them in many scenarios. An implementation of our method is available in the R package rGPBART available at: https://github.com/MateusMaiaDS/gpbart

Random Machines Regression Approach: an ensemble support vector regression model with free kernel choice

Mar 27, 2020

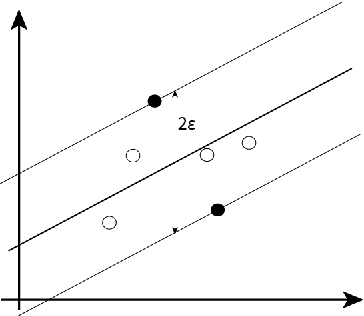

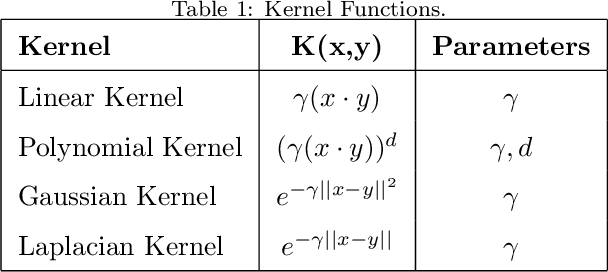

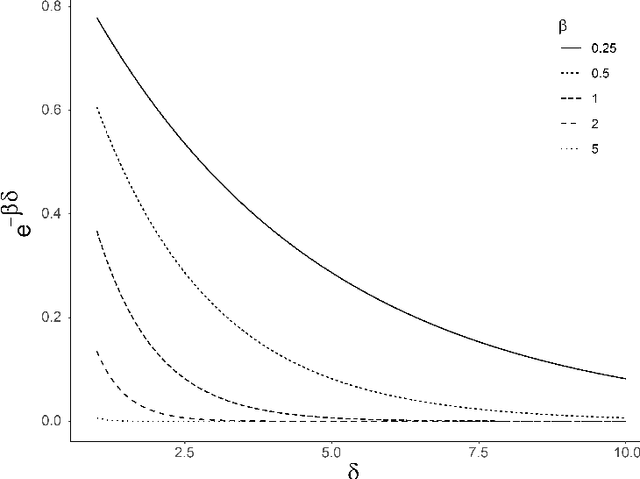

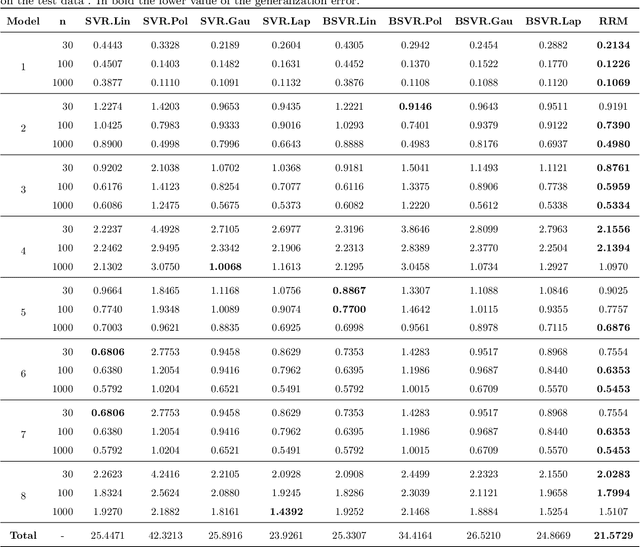

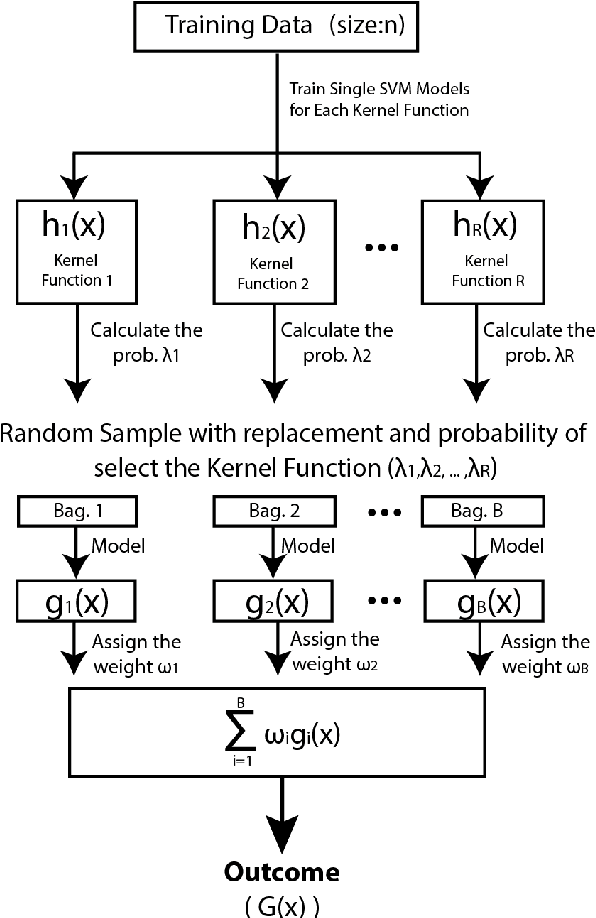

Abstract:Machine learning techniques always aim to reduce the generalized prediction error. In order to reduce it, ensemble methods present a good approach combining several models that results in a greater forecasting capacity. The Random Machines already have been demonstrated as strong technique, i.e: high predictive power, to classification tasks, in this article we propose an procedure to use the bagged-weighted support vector model to regression problems. Simulation studies were realized over artificial datasets, and over real data benchmarks. The results exhibited a good performance of Regression Random Machines through lower generalization error without needing to choose the best kernel function during tuning process.

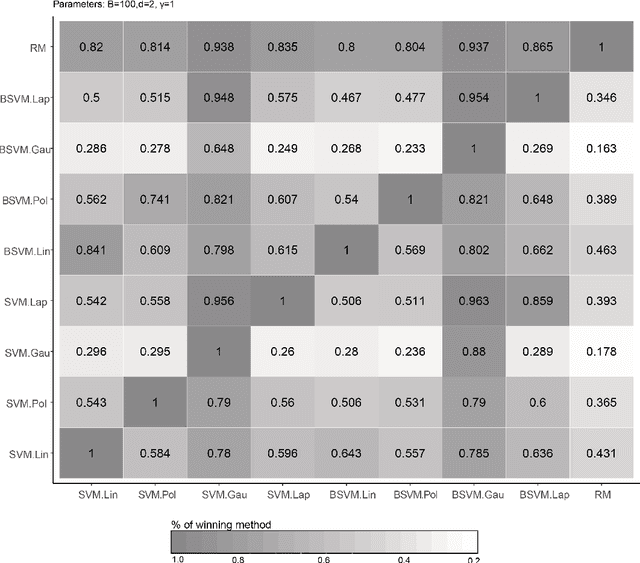

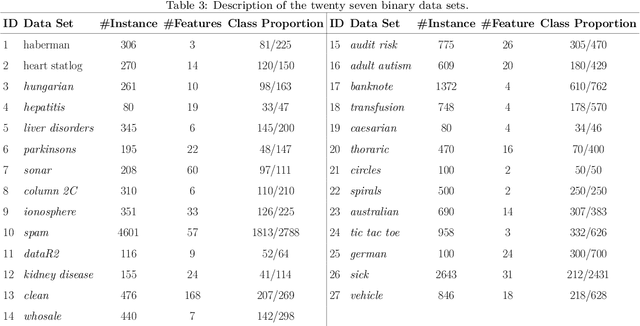

Random Machines: A bagged-weighted support vector model with free kernel choice

Nov 21, 2019

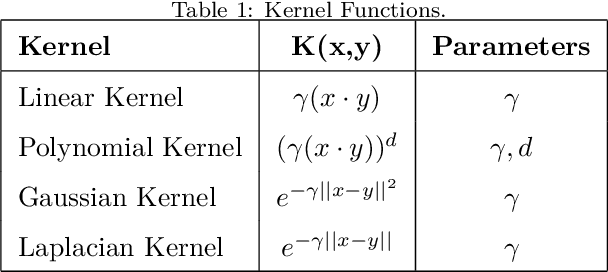

Abstract:Improvement of statistical learning models in order to increase efficiency in solving classification or regression problems is still a goal pursued by the scientific community. In this way, the support vector machine model is one of the most successful and powerful algorithms for those tasks. However, its performance depends directly from the choice of the kernel function and their hyperparameters. The traditional choice of them, actually, can be computationally expensive to do the kernel choice and the tuning processes. In this article, it is proposed a novel framework to deal with the kernel function selection called Random Machines. The results improved accuracy and reduced computational time. The data study was performed in simulated data and over 27 real benchmarking datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge