Massimo Sartori

Neuromuscular Modeling for Locomotion with Wearable Assistive Robots -- A primer

Jul 19, 2024

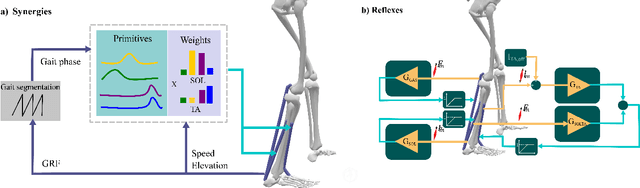

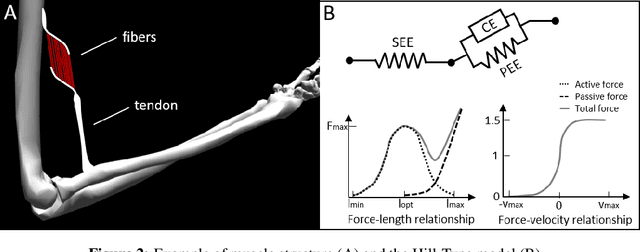

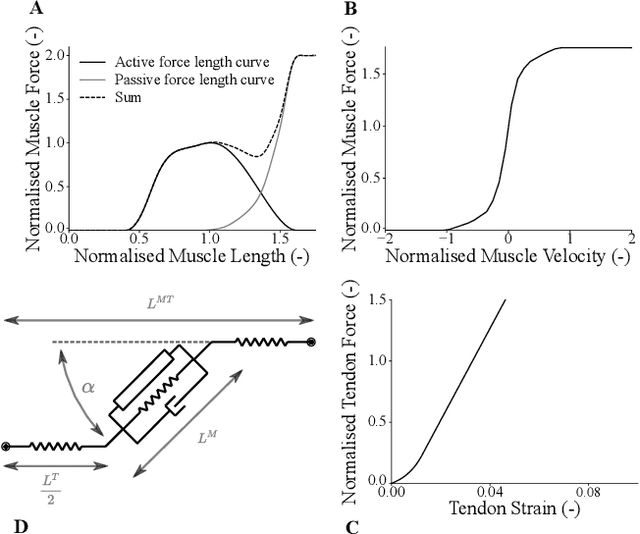

Abstract:Wearable assistive robots (WR) for the lower extremity are extensively documented in literature. Various interfaces have been designed to control these devices during gait and balance activities. However, achieving seamless and intuitive control requires accurate modeling of the human neuromusculoskeletal (NMSK) system. Such modeling enables WR to anticipate user intentions and determine the necessary joint assistance. Despite the existence of controllers interfacing with the NMSK system, robust and generalizable techniques across different tasks remain scarce. Designing these novel controllers necessitates the combined expertise of neurophysiologists, who understand the physiology of movement initiation and generation, and biomechatronic engineers, who design and control devices that assist movement. This paper aims to bridge the gaps between these fields by presenting a primer on key concepts and the current state of the science in each area. We present three main sections: the neuromechanics of locomotion, neuromechanical models of movement, and existing neuromechanical controllers used in WR. Through these sections, we provide a comprehensive overview of seminal studies in the field, facilitating collaboration between neurophysiologists and biomechatronic engineers for future advances in wearable robotics for locomotion.

Adaptive Assistance with an Active and Soft Back-Support Exosuit to Unknown External Loads via Model-Based Estimates of Internal Lumbosacral Moments

Nov 03, 2023Abstract:State of the art controllers for back exoskeletons largely rely on body kinematics. This results in control strategies which cannot provide adaptive support under unknown external loads. We developed a neuromechanical model-based controller (NMBC) for a soft back exosuit, wherein assistive forces were proportional to the active component of lumbosacral joint moments, derived from real-time electromyography-driven models. The exosuit provided adaptive assistance forces with no a priori information on the external loading conditions. Across 10 participants, who stoop-lifted 5 and 15 kg boxes, our NMBC was compared to a non-adaptive virtual spring-based control(VSBC), in which exosuit forces were proportional to trunk inclination. Peak cable assistive forces were modulated across weight conditions for NMBC (5kg: 2.13 N/kg; 15kg: 2.82 N/kg) but not for VSBC (5kg: 1.92 N/kg; 15kg: 2.00 N/kg). The proposed NMBC strategy resulted in larger reduction of cumulative compression forces for 5 kg (NMBC: 18.2%; VSBC: 10.7%) and 15 kg conditions (NMBC: 21.3%; VSBC: 10.2%). Our proposed methodology may facilitate the adoption of non-hindering wearable robotics in real-life scenarios.

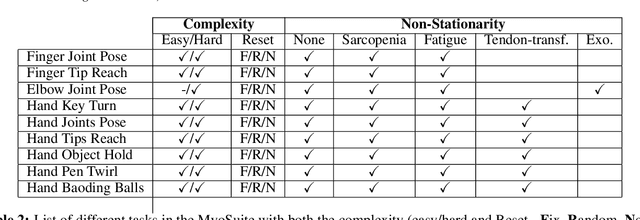

MyoSuite -- A contact-rich simulation suite for musculoskeletal motor control

May 26, 2022

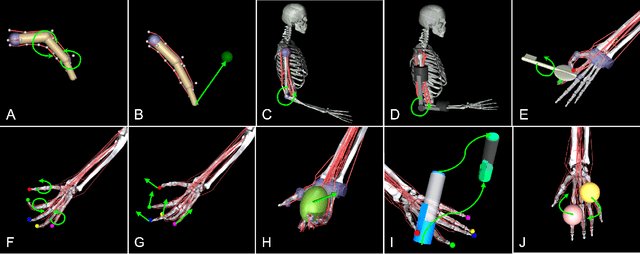

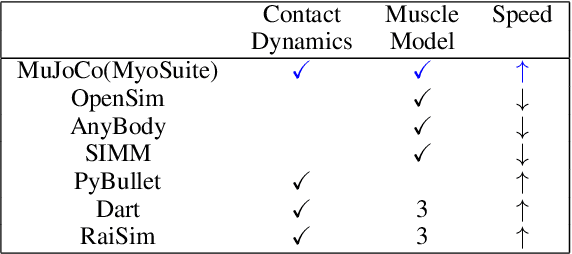

Abstract:Embodied agents in continuous control domains have had limited exposure to tasks allowing to explore musculoskeletal properties that enable agile and nimble behaviors in biological beings. The sophistication behind neuro-musculoskeletal control can pose new challenges for the motor learning community. At the same time, agents solving complex neural control problems allow impact in fields such as neuro-rehabilitation, as well as collaborative-robotics. Human biomechanics underlies complex multi-joint-multi-actuator musculoskeletal systems. The sensory-motor system relies on a range of sensory-contact rich and proprioceptive inputs that define and condition muscle actuation required to exhibit intelligent behaviors in the physical world. Current frameworks for musculoskeletal control do not support physiological sophistication of the musculoskeletal systems along with physical world interaction capabilities. In addition, they are neither embedded in complex and skillful motor tasks nor are computationally effective and scalable to study large-scale learning paradigms. Here, we present MyoSuite -- a suite of physiologically accurate biomechanical models of elbow, wrist, and hand, with physical contact capabilities, which allow learning of complex and skillful contact-rich real-world tasks. We provide diverse motor-control challenges: from simple postural control to skilled hand-object interactions such as turning a key, twirling a pen, rotating two balls in one hand, etc. By supporting physiological alterations in musculoskeletal geometry (tendon transfer), assistive devices (exoskeleton assistance), and muscle contraction dynamics (muscle fatigue, sarcopenia), we present real-life tasks with temporal changes, thereby exposing realistic non-stationary conditions in our tasks which most continuous control benchmarks lack.

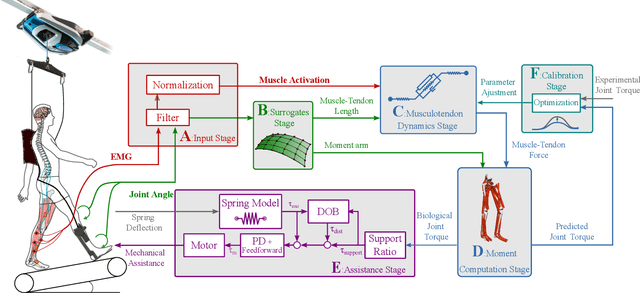

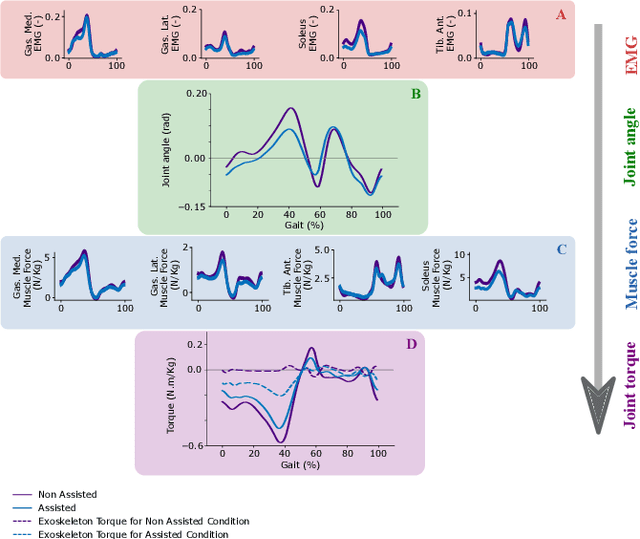

Neuromechanical model-based control of bi-lateral ankle exoskeletons: biological joint torque and electromyogram reduction across walking conditions

Aug 02, 2021

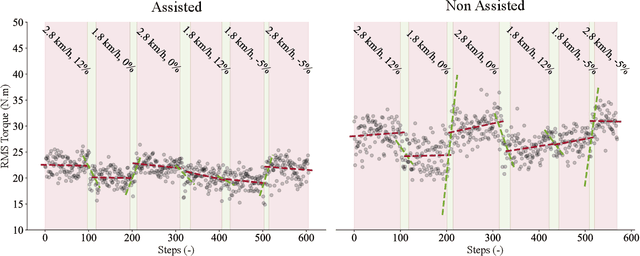

Abstract:To enable the broad adoption of wearable robotic exoskeletons in medical and industrial settings, it is crucial they can effectively support large repertoires of movements. We propose a new human-machine interface to drive bilateral ankle exoskeletons during a range of 'unseen' walking conditions that were not used for establishing the control interface. The proposed approach uses person-specific neuromechanical models of the human body to estimate biological ankle torques in real-time from electromyograms (EMGS) and joint angles. A low-level controller based on a disturbance observer translates biological torque estimates into exoskeleton commands. We call this 'neuromechanical model-based control' (NMBC). NMBC enabled five individuals to voluntarily control exoskeletons across two walking speeds performed at three ground elevations with no need for predefined torque profiles, nor a prior chosen neuromuscular reflex rules, or state machines as common in literature. Furthermore, a single subject case study was carried out on a dexterous moonwalk task, showing reduction in muscular effort. NMBC enabled reducing biological ankle torques as well as eight ankle muscle EMGs both within (22% for the torque; 13% for the EMG) and between walking conditions (22% for the torque; 13% for the EMG) when compared to non-assisted conditions. Torque and EMG reduction in novel walking conditions indicated the exoskeleton operated symbiotically as an exomuscle controlled by the operator's neuromuscular system. This will open new avenues for systematic adoption of wearable robots in out-of-the-lab medical and occupational settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge