Huawei Wang

A real-time full-chain wearable sensor-based musculoskeletal simulation: an OpenSim-ROS Integration

Jul 26, 2025Abstract:Musculoskeletal modeling and simulations enable the accurate description and analysis of the movement of biological systems with applications such as rehabilitation assessment, prosthesis, and exoskeleton design. However, the widespread usage of these techniques is limited by costly sensors, laboratory-based setups, computationally demanding processes, and the use of diverse software tools that often lack seamless integration. In this work, we address these limitations by proposing an integrated, real-time framework for musculoskeletal modeling and simulations that leverages OpenSimRT, the robotics operating system (ROS), and wearable sensors. As a proof-of-concept, we demonstrate that this framework can reasonably well describe inverse kinematics of both lower and upper body using either inertial measurement units or fiducial markers. Additionally, we show that it can effectively estimate inverse dynamics of the ankle joint and muscle activations of major lower limb muscles during daily activities, including walking, squatting and sit to stand, stand to sit when combined with pressure insoles. We believe this work lays the groundwork for further studies with more complex real-time and wearable sensor-based human movement analysis systems and holds potential to advance technologies in rehabilitation, robotics and exoskeleton designs.

Neuromuscular Modeling for Locomotion with Wearable Assistive Robots -- A primer

Jul 19, 2024

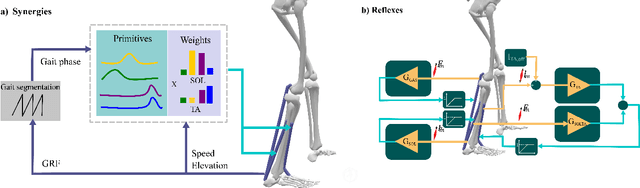

Abstract:Wearable assistive robots (WR) for the lower extremity are extensively documented in literature. Various interfaces have been designed to control these devices during gait and balance activities. However, achieving seamless and intuitive control requires accurate modeling of the human neuromusculoskeletal (NMSK) system. Such modeling enables WR to anticipate user intentions and determine the necessary joint assistance. Despite the existence of controllers interfacing with the NMSK system, robust and generalizable techniques across different tasks remain scarce. Designing these novel controllers necessitates the combined expertise of neurophysiologists, who understand the physiology of movement initiation and generation, and biomechatronic engineers, who design and control devices that assist movement. This paper aims to bridge the gaps between these fields by presenting a primer on key concepts and the current state of the science in each area. We present three main sections: the neuromechanics of locomotion, neuromechanical models of movement, and existing neuromechanical controllers used in WR. Through these sections, we provide a comprehensive overview of seminal studies in the field, facilitating collaboration between neurophysiologists and biomechatronic engineers for future advances in wearable robotics for locomotion.

MyoSuite -- A contact-rich simulation suite for musculoskeletal motor control

May 26, 2022

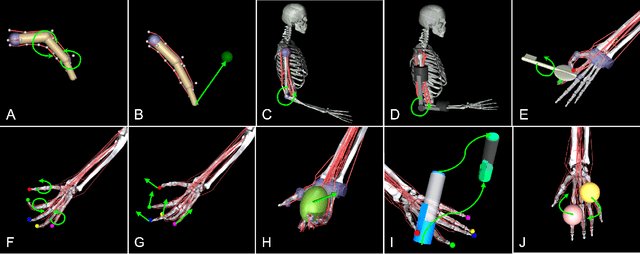

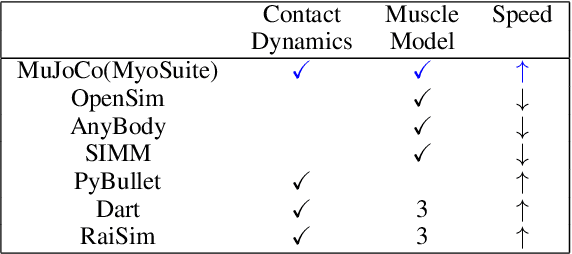

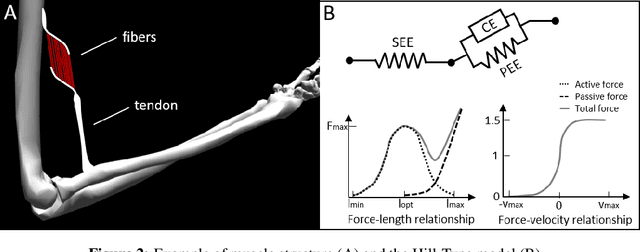

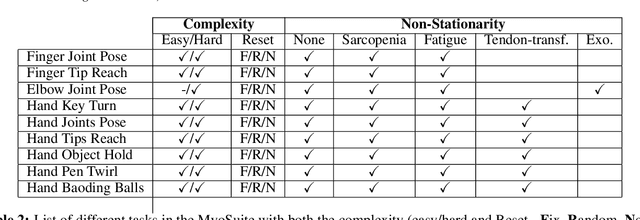

Abstract:Embodied agents in continuous control domains have had limited exposure to tasks allowing to explore musculoskeletal properties that enable agile and nimble behaviors in biological beings. The sophistication behind neuro-musculoskeletal control can pose new challenges for the motor learning community. At the same time, agents solving complex neural control problems allow impact in fields such as neuro-rehabilitation, as well as collaborative-robotics. Human biomechanics underlies complex multi-joint-multi-actuator musculoskeletal systems. The sensory-motor system relies on a range of sensory-contact rich and proprioceptive inputs that define and condition muscle actuation required to exhibit intelligent behaviors in the physical world. Current frameworks for musculoskeletal control do not support physiological sophistication of the musculoskeletal systems along with physical world interaction capabilities. In addition, they are neither embedded in complex and skillful motor tasks nor are computationally effective and scalable to study large-scale learning paradigms. Here, we present MyoSuite -- a suite of physiologically accurate biomechanical models of elbow, wrist, and hand, with physical contact capabilities, which allow learning of complex and skillful contact-rich real-world tasks. We provide diverse motor-control challenges: from simple postural control to skilled hand-object interactions such as turning a key, twirling a pen, rotating two balls in one hand, etc. By supporting physiological alterations in musculoskeletal geometry (tendon transfer), assistive devices (exoskeleton assistance), and muscle contraction dynamics (muscle fatigue, sarcopenia), we present real-life tasks with temporal changes, thereby exposing realistic non-stationary conditions in our tasks which most continuous control benchmarks lack.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge