Marvin Manalastas

Joint Communication and Sensing for 6G -- A Cross-Layer Perspective

Feb 14, 2024

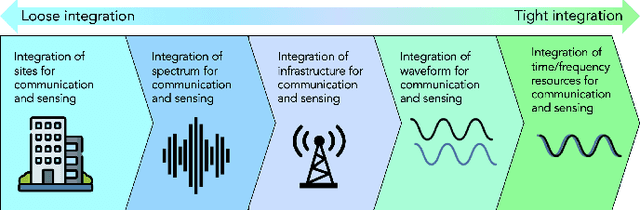

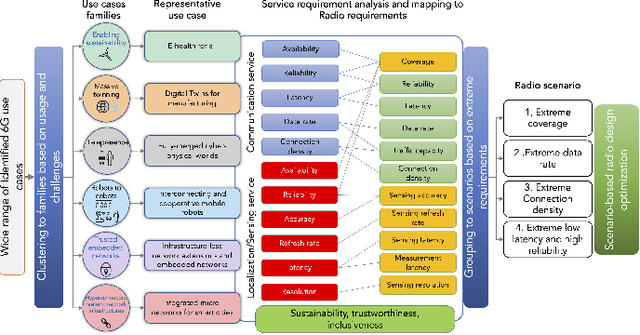

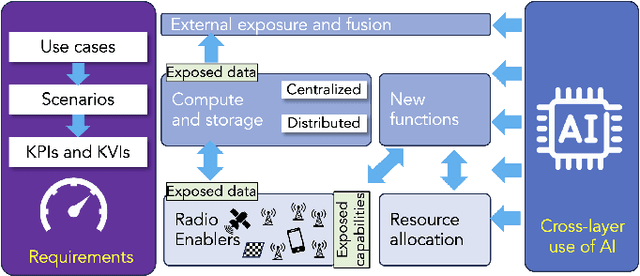

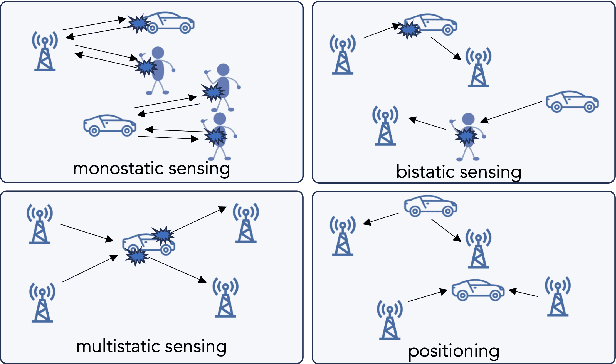

Abstract:As 6G emerges, cellular systems are envisioned to integrate sensing with communication capabilities, leading to multi-faceted communication and sensing (JCAS). This paper presents a comprehensive cross-layer overview of the Hexa-X-II project's endeavors in JCAS, aligning 6G use cases with service requirements and pinpointing distinct scenarios that bridge communication and sensing. This work relates to these scenarios through the lens of the cross-layer physical and networking domains, covering models, deployments, resource allocation, storage challenges, computational constraints, interfaces, and innovative functions.

An AI-Enabled Framework to Defend Ingenious MDT-based Attacks on the Emerging Zero Touch Cellular Networks

Aug 05, 2023

Abstract:Deep automation provided by self-organizing network (SON) features and their emerging variants such as zero touch automation solutions is a key enabler for increasingly dense wireless networks and pervasive Internet of Things (IoT). To realize their objectives, most automation functionalities rely on the Minimization of Drive Test (MDT) reports. The MDT reports are used to generate inferences about network state and performance, thus dynamically change network parameters accordingly. However, the collection of MDT reports from commodity user devices, particularly low cost IoT devices, make them a vulnerable entry point to launch an adversarial attack on emerging deeply automated wireless networks. This adds a new dimension to the security threats in the IoT and cellular networks. Existing literature on IoT, SON, or zero touch automation does not address this important problem. In this paper, we investigate an impactful, first of its kind adversarial attack that can be launched by exploiting the malicious MDT reports from the compromised user equipment (UE). We highlight the detrimental repercussions of this attack on the performance of common network automation functions. We also propose a novel Malicious MDT Reports Identification framework (MRIF) as a countermeasure to detect and eliminate the malicious MDT reports using Machine Learning and verify it through a use-case. Thus, the defense mechanism can provide the resilience and robustness for zero touch automation SON engines against the adversarial MDT attacks

Machine Learning Aided Holistic Handover Optimization for Emerging Networks

Feb 06, 2022

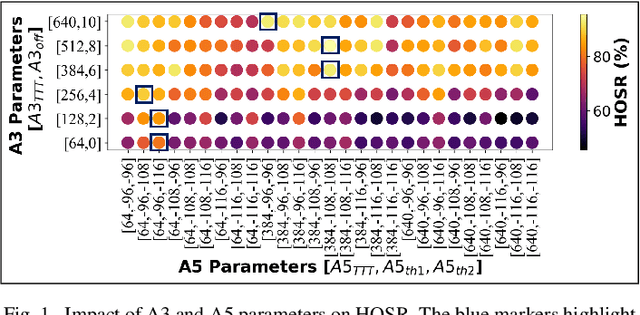

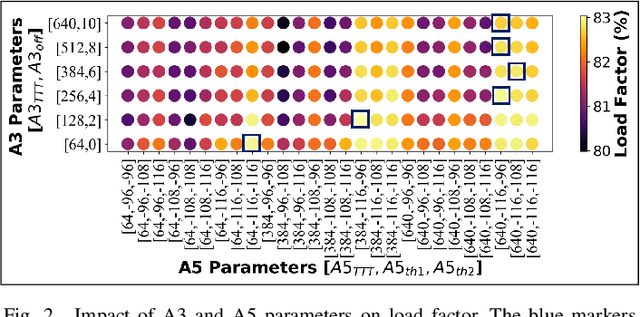

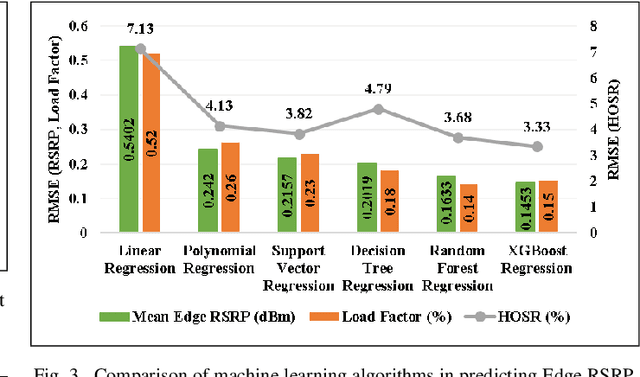

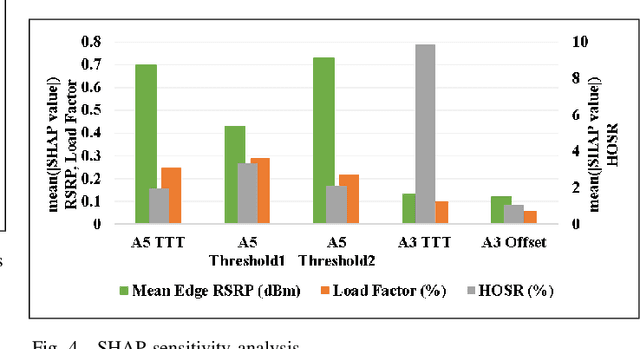

Abstract:In the wake of network densification and multi-band operation in emerging cellular networks, mobility and handover management is becoming a major bottleneck. The problem is further aggravated by the fact that holistic mobility management solutions for different types of handovers, namely inter-frequency and intra-frequency handovers, remain scarce. This paper presents a first mobility management solution that concurrently optimizes inter-frequency related A5 parameters and intra-frequency related A3 parameters. We analyze and optimize five parameters namely A5-time to trigger (TTT), A5-threshold1, A5-threshold2, A3-TTT, and A3-offset to jointly maximize three critical key performance indicators (KPIs): edge user reference signal received power (RSRP), handover success rate (HOSR) and load between frequency bands. In the absence of tractable analytical models due to system level complexity, we leverage machine learning to quantify the KPIs as a function of the mobility parameters. An XGBoost based model has the best performance for edge RSRP and HOSR while random forest outperforms others for load prediction. An analysis of the mobility parameters provides several insights: 1) there exists a strong coupling between A3 and A5 parameters; 2) an optimal set of parameters exists for each KPI; and 3) the optimal parameters vary for different KPIs. We also perform a SHAP based sensitivity to help resolve the parametric conflict between the KPIs. Finally, we formulate a maximization problem, show it is non-convex, and solve it utilizing simulated annealing (SA). Results indicate that ML-based SA-aided solution is more than 14x faster than the brute force approach with a slight loss in optimality.

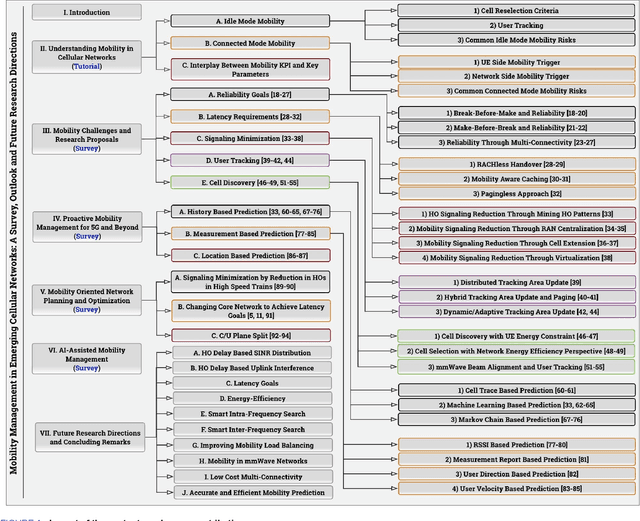

Mobility Management in Emerging Ultra-Dense Cellular Networks: A Survey, Outlook, and Future Research Directions

Sep 29, 2020

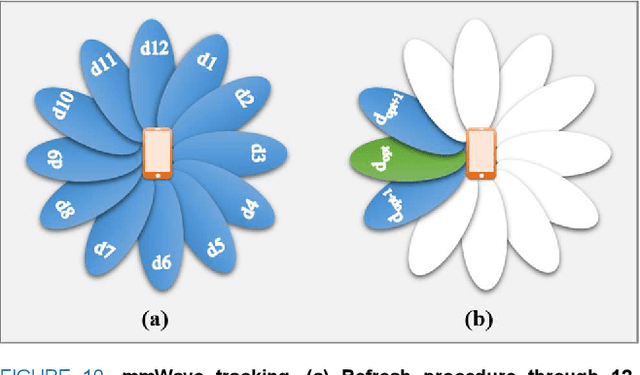

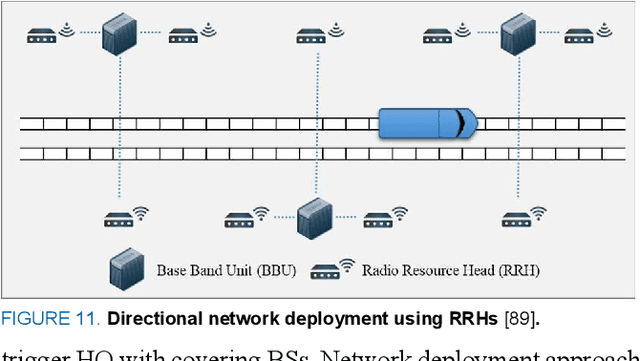

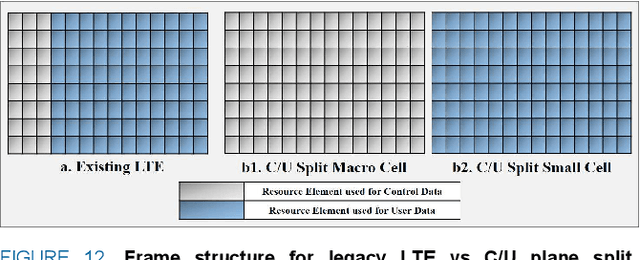

Abstract:The exponential rise in mobile traffic originating from mobile devices highlights the need for making mobility management in future networks even more efficient and seamless than ever before. Ultra-Dense Cellular Network vision consisting of cells of varying sizes with conventional and mmWave bands is being perceived as the panacea for the eminent capacity crunch. However, mobility challenges in an ultra-dense heterogeneous network with motley of high frequency and mmWave band cells will be unprecedented due to plurality of handover instances, and the resulting signaling overhead and data interruptions for miscellany of devices. Similarly, issues like user tracking and cell discovery for mmWave with narrow beams need to be addressed before the ambitious gains of emerging mobile networks can be realized. Mobility challenges are further highlighted when considering the 5G deliverables of multi-Gbps wireless connectivity, <1ms latency and support for devices moving at maximum speed of 500km/h, to name a few. Despite its significance, few mobility surveys exist with the majority focused on adhoc networks. This paper is the first to provide a comprehensive survey on the panorama of mobility challenges in the emerging ultra-dense mobile networks. We not only present a detailed tutorial on 5G mobility approaches and highlight key mobility risks of legacy networks, but also review key findings from recent studies and highlight the technical challenges and potential opportunities related to mobility from the perspective of emerging ultra-dense cellular networks.

A Machine Learning based Framework for KPI Maximization in Emerging Networks using Mobility Parameters

May 04, 2020

Abstract:Current LTE network is faced with a plethora of Configuration and Optimization Parameters (COPs), both hard and soft, that are adjusted manually to manage the network and provide better Quality of Experience (QoE). With 5G in view, the number of these COPs are expected to reach 2000 per site, making their manual tuning for finding the optimal combination of these parameters, an impossible fleet. Alongside these thousands of COPs is the anticipated network densification in emerging networks which exacerbates the burden of the network operators in managing and optimizing the network. Hence, we propose a machine learning-based framework combined with a heuristic technique to discover the optimal combination of two pertinent COPs used in mobility, Cell Individual Offset (CIO) and Handover Margin (HOM), that maximizes a specific Key Performance Indicator (KPI) such as mean Signal to Interference and Noise Ratio (SINR) of all the connected users. The first part of the framework leverages the power of machine learning to predict the KPI of interest given several different combinations of CIO and HOM. The resulting predictions are then fed into Genetic Algorithm (GA) which searches for the best combination of the two mentioned parameters that yield the maximum mean SINR for all users. Performance of the framework is also evaluated using several machine learning techniques, with CatBoost algorithm yielding the best prediction performance. Meanwhile, GA is able to reveal the optimal parameter setting combination more efficiently and with three orders of magnitude faster convergence time in comparison to brute force approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge