Hasan Farooq

An AI-Enabled Framework to Defend Ingenious MDT-based Attacks on the Emerging Zero Touch Cellular Networks

Aug 05, 2023

Abstract:Deep automation provided by self-organizing network (SON) features and their emerging variants such as zero touch automation solutions is a key enabler for increasingly dense wireless networks and pervasive Internet of Things (IoT). To realize their objectives, most automation functionalities rely on the Minimization of Drive Test (MDT) reports. The MDT reports are used to generate inferences about network state and performance, thus dynamically change network parameters accordingly. However, the collection of MDT reports from commodity user devices, particularly low cost IoT devices, make them a vulnerable entry point to launch an adversarial attack on emerging deeply automated wireless networks. This adds a new dimension to the security threats in the IoT and cellular networks. Existing literature on IoT, SON, or zero touch automation does not address this important problem. In this paper, we investigate an impactful, first of its kind adversarial attack that can be launched by exploiting the malicious MDT reports from the compromised user equipment (UE). We highlight the detrimental repercussions of this attack on the performance of common network automation functions. We also propose a novel Malicious MDT Reports Identification framework (MRIF) as a countermeasure to detect and eliminate the malicious MDT reports using Machine Learning and verify it through a use-case. Thus, the defense mechanism can provide the resilience and robustness for zero touch automation SON engines against the adversarial MDT attacks

Interpretable AI-based Large-scale 3D Pathloss Prediction Model for enabling Emerging Self-Driving Networks

Jan 30, 2022

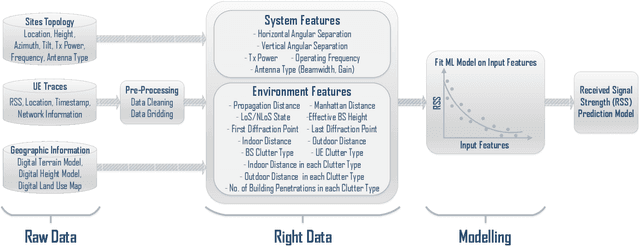

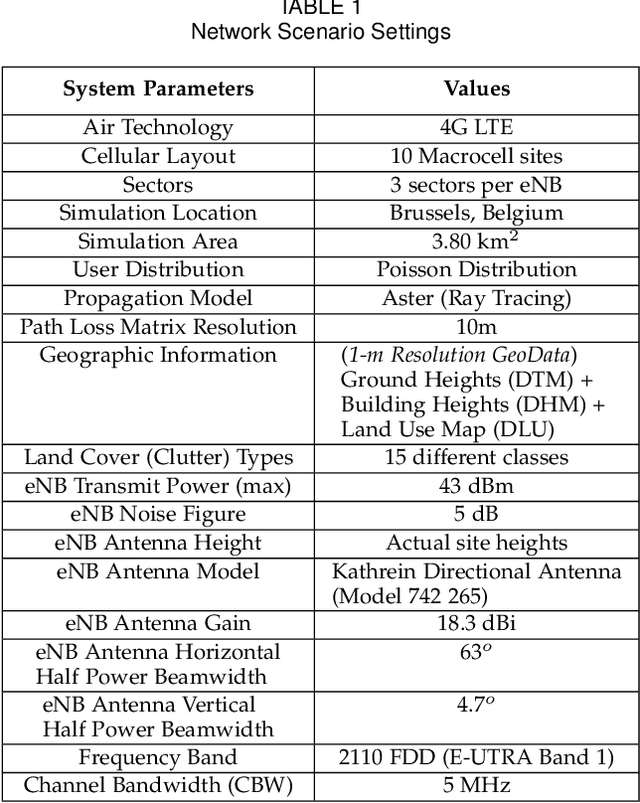

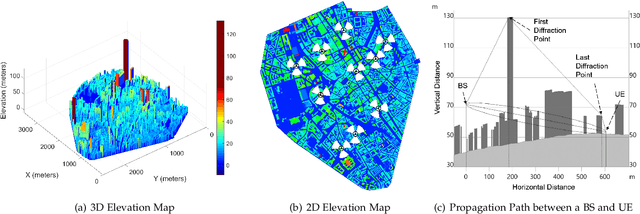

Abstract:In modern wireless communication systems, radio propagation modeling to estimate pathloss has always been a fundamental task in system design and optimization. The state-of-the-art empirical propagation models are based on measurements in specific environments and limited in their ability to capture idiosyncrasies of various propagation environments. To cope with this problem, ray-tracing based solutions are used in commercial planning tools, but they tend to be extremely time-consuming and expensive. We propose a Machine Learning (ML)-based model that leverages novel key predictors for estimating pathloss. By quantitatively evaluating the ability of various ML algorithms in terms of predictive, generalization and computational performance, our results show that Light Gradient Boosting Machine (LightGBM) algorithm overall outperforms others, even with sparse training data, by providing a 65% increase in prediction accuracy as compared to empirical models and 13x decrease in prediction time as compared to ray-tracing. To address the interpretability challenge that thwarts the adoption of most ML-based models, we perform extensive secondary analysis using SHapley Additive exPlanations (SHAP) method, yielding many practically useful insights that can be leveraged for intelligently tuning the network configuration, selective enrichment of training data in real networks and for building lighter ML-based propagation model to enable low-latency use-cases.

Coordinated Reinforcement Learning for Optimizing Mobile Networks

Sep 30, 2021

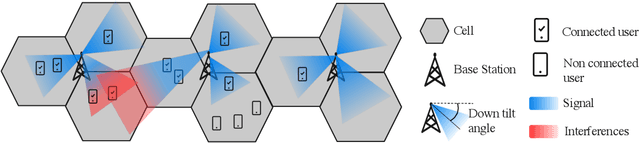

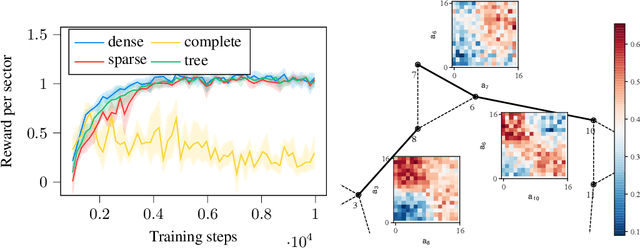

Abstract:Mobile networks are composed of many base stations and for each of them many parameters must be optimized to provide good services. Automatically and dynamically optimizing all these entities is challenging as they are sensitive to variations in the environment and can affect each other through interferences. Reinforcement learning (RL) algorithms are good candidates to automatically learn base station configuration strategies from incoming data but they are often hard to scale to many agents. In this work, we demonstrate how to use coordination graphs and reinforcement learning in a complex application involving hundreds of cooperating agents. We show how mobile networks can be modeled using coordination graphs and how network optimization problems can be solved efficiently using multi- agent reinforcement learning. The graph structure occurs naturally from expert knowledge about the network and allows to explicitly learn coordinating behaviors between the antennas through edge value functions represented by neural networks. We show empirically that coordinated reinforcement learning outperforms other methods. The use of local RL updates and parameter sharing can handle a large number of agents without sacrificing coordination which makes it well suited to optimize the ever denser networks brought by 5G and beyond.

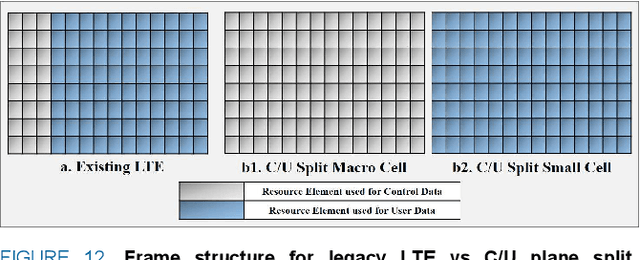

Mobility Management in Emerging Ultra-Dense Cellular Networks: A Survey, Outlook, and Future Research Directions

Sep 29, 2020

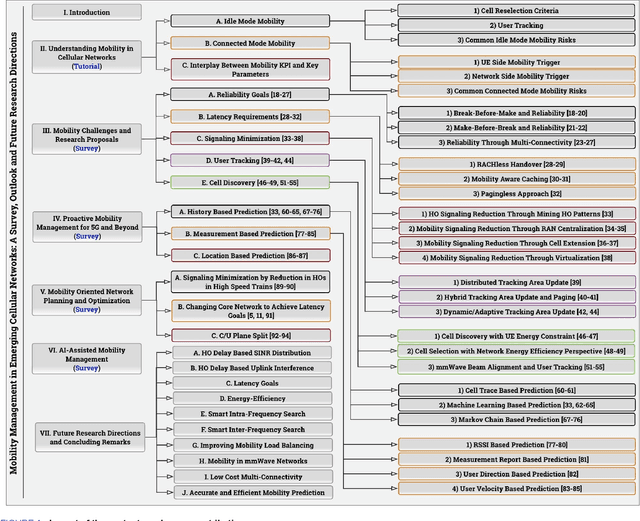

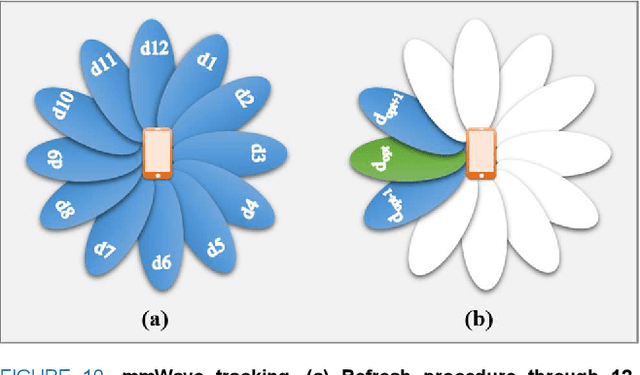

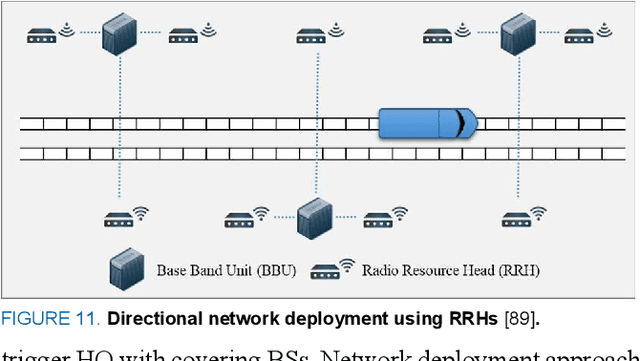

Abstract:The exponential rise in mobile traffic originating from mobile devices highlights the need for making mobility management in future networks even more efficient and seamless than ever before. Ultra-Dense Cellular Network vision consisting of cells of varying sizes with conventional and mmWave bands is being perceived as the panacea for the eminent capacity crunch. However, mobility challenges in an ultra-dense heterogeneous network with motley of high frequency and mmWave band cells will be unprecedented due to plurality of handover instances, and the resulting signaling overhead and data interruptions for miscellany of devices. Similarly, issues like user tracking and cell discovery for mmWave with narrow beams need to be addressed before the ambitious gains of emerging mobile networks can be realized. Mobility challenges are further highlighted when considering the 5G deliverables of multi-Gbps wireless connectivity, <1ms latency and support for devices moving at maximum speed of 500km/h, to name a few. Despite its significance, few mobility surveys exist with the majority focused on adhoc networks. This paper is the first to provide a comprehensive survey on the panorama of mobility challenges in the emerging ultra-dense mobile networks. We not only present a detailed tutorial on 5G mobility approaches and highlight key mobility risks of legacy networks, but also review key findings from recent studies and highlight the technical challenges and potential opportunities related to mobility from the perspective of emerging ultra-dense cellular networks.

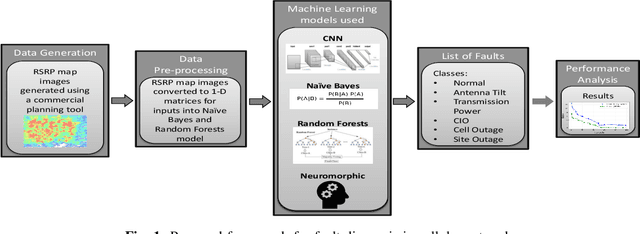

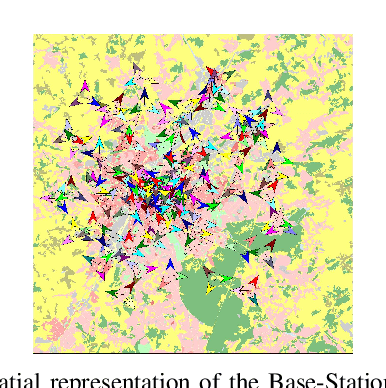

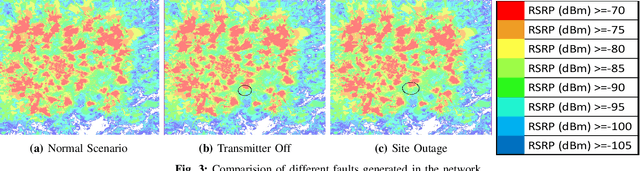

Neuromorphic AI Empowered Root Cause Analysis of Faults in Emerging Networks

May 04, 2020

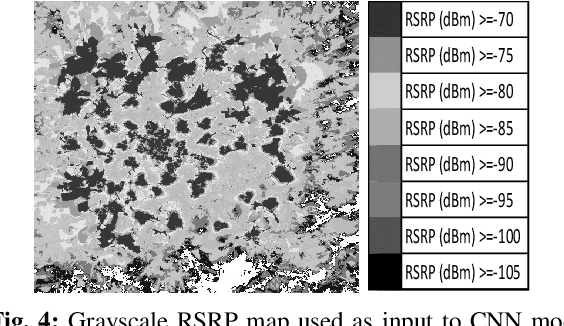

Abstract:Mobile cellular network operators spend nearly a quarter of their revenue on network maintenance and management. A significant portion of that budget is spent on resolving faults diagnosed in the system that disrupt or degrade cellular services. Historically, the operations to detect, diagnose and resolve issues were carried out by human experts. However, with diversifying cell types, increased complexity and growing cell density, this methodology is becoming less viable, both technically and financially. To cope with this problem, in recent years, research on self-healing solutions has gained significant momentum. One of the most desirable features of the self-healing paradigm is automated fault diagnosis. While several fault detection and diagnosis machine learning models have been proposed recently, these schemes have one common tenancy of relying on human expert contribution for fault diagnosis and prediction in one way or another. In this paper, we propose an AI-based fault diagnosis solution that offers a key step towards a completely automated self-healing system without requiring human expert input. The proposed solution leverages Random Forests classifier, Convolutional Neural Network and neuromorphic based deep learning model which uses RSRP map images of faults generated. We compare the performance of the proposed solution against state-of-the-art solution in literature that mostly use Naive Bayes models, while considering seven different fault types. Results show that neuromorphic computing model achieves high classification accuracy as compared to the other models even with relatively small training data

Can Machine Learning Be Used to Recognize and Diagnose Coughs?

Apr 01, 2020

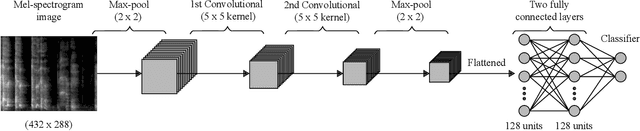

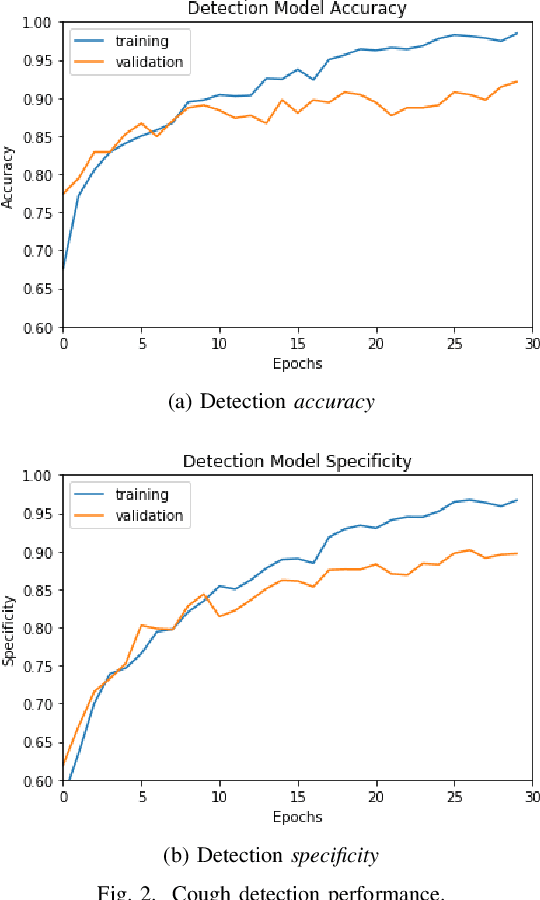

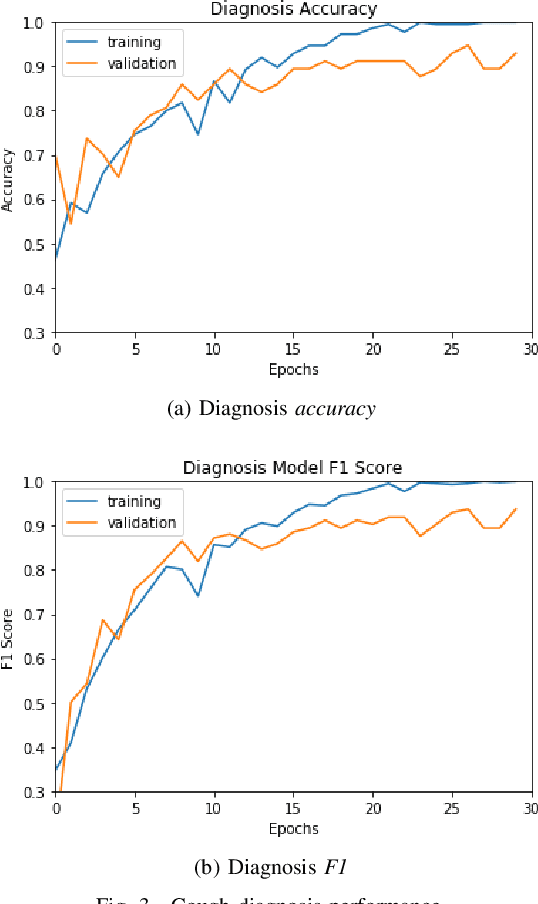

Abstract:5G is bringing new use cases to the forefront, one of the most prominent being machine learning empowered health care. Since respiratory infections are one of the notable modern medical concerns and coughs being a common symptom of this, a system for recognizing and diagnosing infections based on raw cough data would have a multitude of beneficial research and medical applications. In the literature, machine learning has been successfully used to detect cough events in controlled environments. In this work, we present a novel system that utilizes Convolutional Neural Networks (CNNs) to detect cough within environment audio and diagnose three potential illnesses (i.e., Bronchitis, Bronchiolitis, and Pertussis) based on their unique cough audio features. Our detection model achieves an accuracy of 90.17% and a specificity of 89.73%, whereas the diagnosis model achieves an accuracy of about 94.74% and an F1 score of 93.73%. These results clearly show that our system is successfully able to detect and separate cough events from background noise. Moreover, our single diagnosis model is capable of distinguishing between different illnesses without the need of separate models.

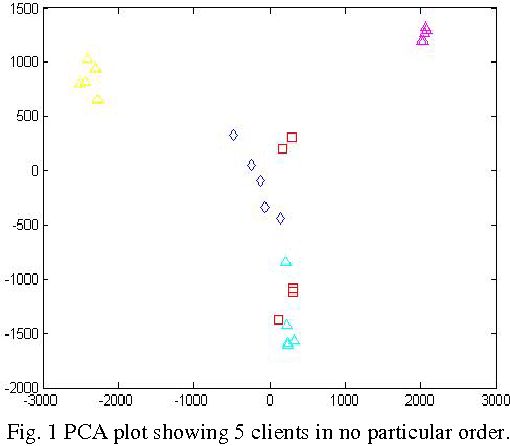

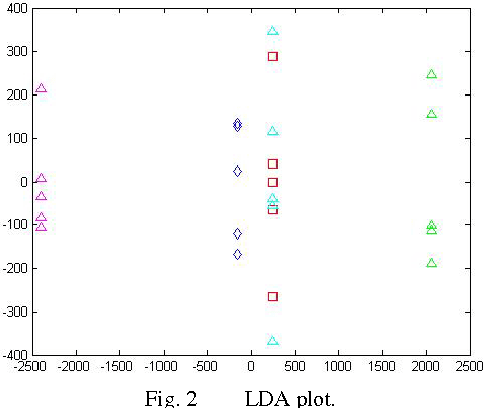

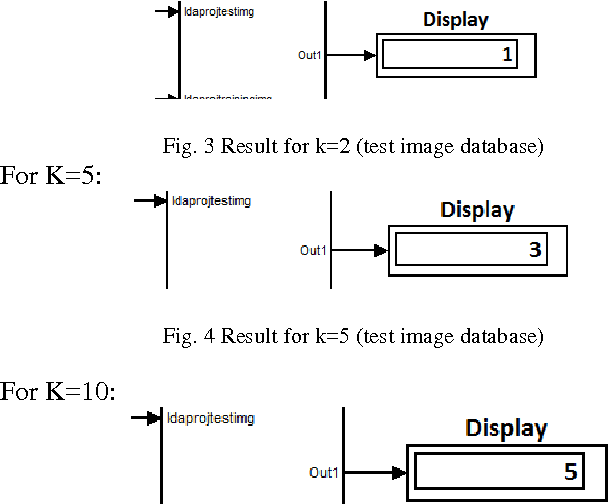

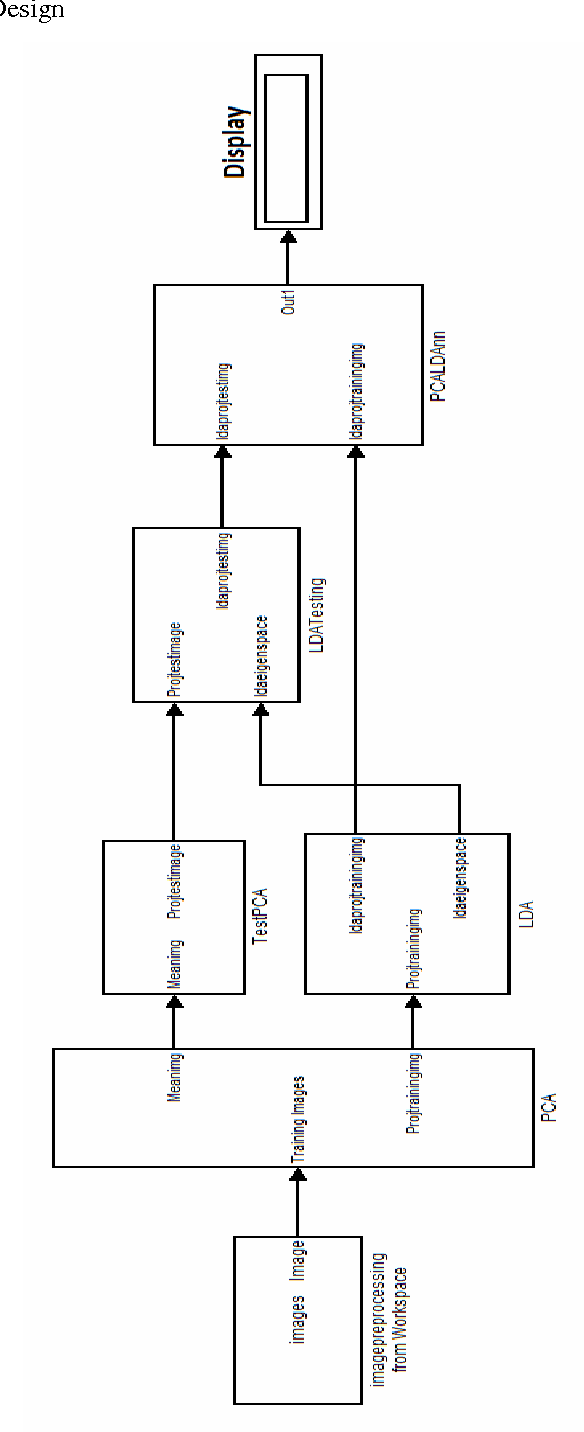

Principal Component Analysis-Linear Discriminant Analysis Feature Extractor for Pattern Recognition

Apr 05, 2012

Abstract:Robustness of embedded biometric systems is of prime importance with the emergence of fourth generation communication devices and advancement in security systems This paper presents the realization of such technologies which demands reliable and error-free biometric identity verification systems. High dimensional patterns are not permitted due to eigen-decomposition in high dimensional image space and degeneration of scattering matrices in small size sample. Generalization, dimensionality reduction and maximizing the margins are controlled by minimizing weight vectors. Results show good pattern by multimodal biometric system proposed in this paper. This paper is aimed at investigating a biometric identity system using Principal Component Analysis and Lindear Discriminant Analysis with K-Nearest Neighbor and implementing such system in real-time using SignalWAVE.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge