Julien Forgeat

Coordinated Reinforcement Learning for Optimizing Mobile Networks

Sep 30, 2021

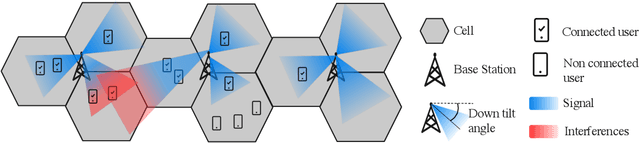

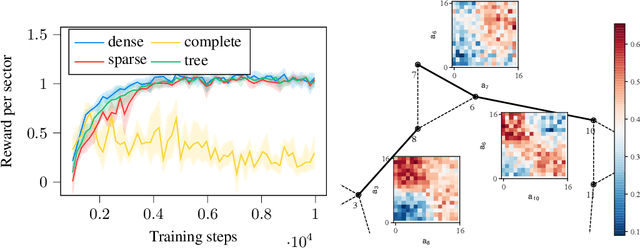

Abstract:Mobile networks are composed of many base stations and for each of them many parameters must be optimized to provide good services. Automatically and dynamically optimizing all these entities is challenging as they are sensitive to variations in the environment and can affect each other through interferences. Reinforcement learning (RL) algorithms are good candidates to automatically learn base station configuration strategies from incoming data but they are often hard to scale to many agents. In this work, we demonstrate how to use coordination graphs and reinforcement learning in a complex application involving hundreds of cooperating agents. We show how mobile networks can be modeled using coordination graphs and how network optimization problems can be solved efficiently using multi- agent reinforcement learning. The graph structure occurs naturally from expert knowledge about the network and allows to explicitly learn coordinating behaviors between the antennas through edge value functions represented by neural networks. We show empirically that coordinated reinforcement learning outperforms other methods. The use of local RL updates and parameter sharing can handle a large number of agents without sacrificing coordination which makes it well suited to optimize the ever denser networks brought by 5G and beyond.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge