Martin Uecker

Phase-Pole-Free Images and Smooth Coil Sensitivity Maps by Regularized Nonlinear Inversion

Aug 06, 2025Abstract:Purpose: Phase singularities are a common problem in image reconstruction with auto-calibrated sensitivities due to an inherent ambiguity of the estimation problem. The purpose of this work is to develop a method for detecting and correcting phase poles in non-linear inverse (NLINV) reconstruction of MR images and coil sensitivity maps. Methods: Phase poles are detected in individual coil sensitivity maps by computing the curl in each pixel. A weighted average of the curl in each coil is computed to detect phase poles. Phase pole detection and correction is then integrated into the iteratively regularized Gauss-Newton method of the NLINV algorithm, which then avoid phase singularities in the reconstructed images. The method is evaluated for reconstruction of accelerated Cartesian MPRAGE data of the brain and interactive radial real-time MRI of the human heart. Results: Phase poles are reliably removed in NLINV reconstructions for both applications. NLINV with phase pole correction can reliably and efficiently estimate coil sensitivity profiles free from singularities even from very small ($7\times7$) auto-calibration (AC) regions. Conclusion: NLINV emerges as an efficient and reliable tool for image reconstruction and coil sensitivity estimation in challenging MRI applications.

Autoregressive Image Diffusion: Generation of Image Sequence and Application in MRI

May 24, 2024

Abstract:Magnetic resonance imaging (MRI) is a widely used non-invasive imaging modality. However, a persistent challenge lies in balancing image quality with imaging speed. This trade-off is primarily constrained by k-space measurements, which traverse specific trajectories in the spatial Fourier domain (k-space). These measurements are often undersampled to shorten acquisition times, resulting in image artifacts and compromised quality. Generative models learn image distributions and can be used to reconstruct high-quality images from undersampled k-space data. In this work, we present the autoregressive image diffusion (AID) model for image sequences and use it to sample the posterior for accelerated MRI reconstruction. The algorithm incorporates both undersampled k-space and pre-existing information. Models trained with fastMRI dataset are evaluated comprehensively. The results show that the AID model can robustly generate sequentially coherent image sequences. In 3D and dynamic MRI, the AID can outperform the standard diffusion model and reduce hallucinations, due to the learned inter-image dependencies.

Autoregressive Image Diffusion: Generating Image Sequence and Application in MRI

May 23, 2024

Abstract:Magnetic resonance imaging (MRI) is a widely used non-invasive imaging modality. However, a persistent challenge lies in balancing image quality with imaging speed. This trade-off is primarily constrained by k-space measurements, which traverse specific trajectories in the spatial Fourier domain (k-space). These measurements are often undersampled to shorten acquisition times, resulting in image artifacts and compromised quality. Generative models learn image distributions and can be used to reconstruct high-quality images from undersampled k-space data. In this work, we present the autoregressive image diffusion (AID) model for image sequences and use it to sample the posterior for accelerated MRI reconstruction. The algorithm incorporates both undersampled k-space and pre-existing information. Models trained with fastMRI dataset are evaluated comprehensively. The results show that the AID model can robustly generate sequentially coherent image sequences. In 3D and dynamic MRI, the AID can outperform the standard diffusion model and reduce hallucinations, due to the learned inter-image dependencies.

Self Supervised Learning for Improved Calibrationless Radial MRI with NLINV-Net

Feb 09, 2024

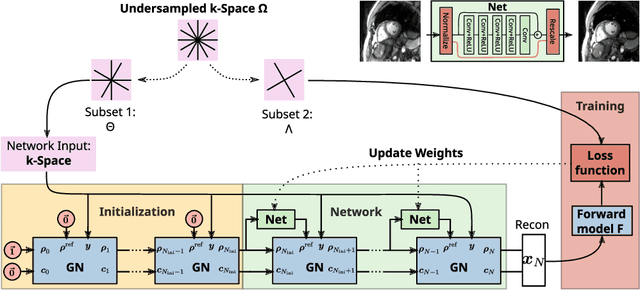

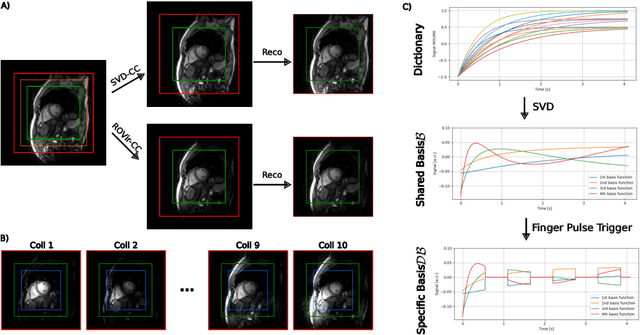

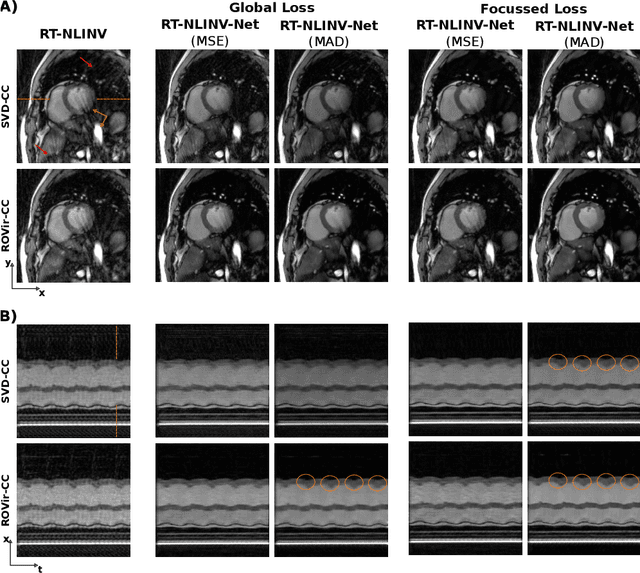

Abstract:Purpose: To develop a neural network architecture for improved calibrationless reconstruction of radial data when no ground truth is available for training. Methods: NLINV-Net is a model-based neural network architecture that directly estimates images and coil sensitivities from (radial) k-space data via non-linear inversion (NLINV). Combined with a training strategy using self-supervision via data undersampling (SSDU), it can be used for imaging problems where no ground truth reconstructions are available. We validated the method for (1) real-time cardiac imaging and (2) single-shot subspace-based quantitative T1 mapping. Furthermore, region-optimized virtual (ROVir) coils were used to suppress artifacts stemming from outside the FoV and to focus the k-space based SSDU loss on the region of interest. NLINV-Net based reconstructions were compared with conventional NLINV and PI-CS (parallel imaging + compressed sensing) reconstruction and the effect of the region-optimized virtual coils and the type of training loss was evaluated qualitatively. Results: NLINV-Net based reconstructions contain significantly less noise than the NLINV-based counterpart. ROVir coils effectively suppress streakings which are not suppressed by the neural networks while the ROVir-based focussed loss leads to visually sharper time series for the movement of the myocardial wall in cardiac real-time imaging. For quantitative imaging, T1-maps reconstructed using NLINV-Net show similar quality as PI-CS reconstructions, but NLINV-Net does not require slice-specific tuning of the regularization parameter. Conclusion: NLINV-Net is a versatile tool for calibrationless imaging which can be used in challenging imaging scenarios where a ground truth is not available.

Assessment of Deep Learning Segmentation for Real-Time Free-Breathing Cardiac Magnetic Resonance Imaging

Dec 01, 2023Abstract:In recent years, a variety of deep learning networks for cardiac MRI (CMR) segmentation have been developed and analyzed. However, nearly all of them are focused on cine CMR under breathold. In this work, accuracy of deep learning methods is assessed for volumetric analysis (via segmentation) of the left ventricle in real-time free-breathing CMR at rest and under exercise stress. Data from healthy volunteers (n=15) for cine and real-time free-breathing CMR were analyzed retrospectively. Segmentations of a commercial software (comDL) and a freely available neural network (nnU-Net), were compared to a reference created via the manual correction of comDL segmentation. Segmentation of left ventricular endocardium (LV), left ventricular myocardium (MYO), and right ventricle (RV) is evaluated for both end-systolic and end-diastolic phases and analyzed with Dice's coefficient (DC). The volumetric analysis includes LV end-diastolic volume (EDV), LV end-systolic volume (ESV), and LV ejection fraction (EF). For cine CMR, nnU-Net and comDL achieve a DC above 0.95 for LV and 0.9 for MYO, and RV. For real-time CMR, the accuracy of nnU-Net exceeds that of comDL overall. For real-time CMR at rest, nnU-Net achieves a DC of 0.94 for LV, 0.89 for MYO, and 0.90 for RV; mean absolute differences between nnU-Net and reference are 2.9mL for EDV, 3.5mL for ESV and 2.6% for EF. For real-time CMR under exercise stress, nnU-Net achieves a DC of 0.92 for LV, 0.85 for MYO, and 0.83 for RV; mean absolute differences between nnU-Net and reference are 11.4mL for EDV, 2.9mL for ESV and 3.6% for EF. Deep learning methods designed or trained for cine CMR segmentation can perform well on real-time CMR. For real-time free-breathing CMR at rest, the performance of deep learning methods is comparable to inter-observer variability in cine CMR and is usable or fully automatic segmentation.

Generative Image Priors for MRI Reconstruction Trained from Magnitude-Only Images

Aug 04, 2023

Abstract:Purpose: In this work, we present a workflow to construct generic and robust generative image priors from magnitude-only images. The priors can then be used for regularization in reconstruction to improve image quality. Methods: The workflow begins with the preparation of training datasets from magnitude-only MR images. This dataset is then augmented with phase information and used to train generative priors of complex images. Finally, trained priors are evaluated using both linear and nonlinear reconstruction for compressed sensing parallel imaging with various undersampling schemes. Results: The results of our experiments demonstrate that priors trained on complex images outperform priors trained only on magnitude images. Additionally, a prior trained on a larger dataset exhibits higher robustness. Finally, we show that the generative priors are superior to L1 -wavelet regularization for compressed sensing parallel imaging with high undersampling. Conclusion: These findings stress the importance of incorporating phase information and leveraging large datasets to raise the performance and reliability of the generative priors for MRI reconstruction. Phase augmentation makes it possible to use existing image databases for training.

Deep, Deep Learning with BART

Feb 28, 2022

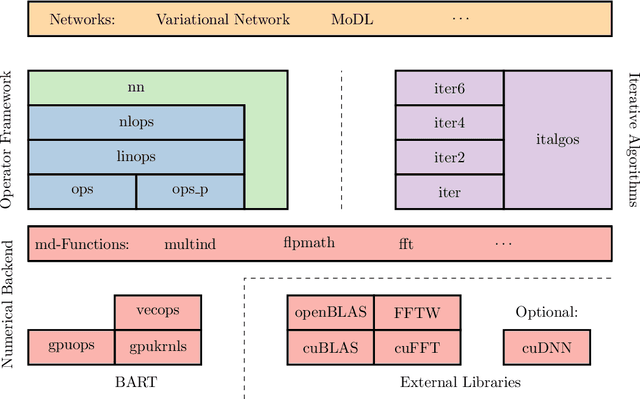

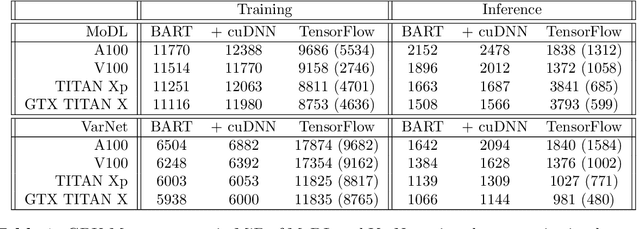

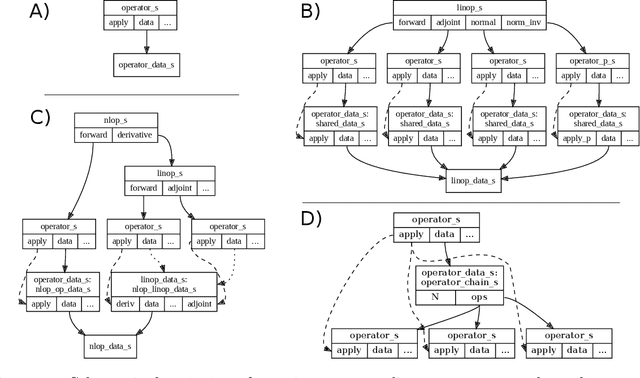

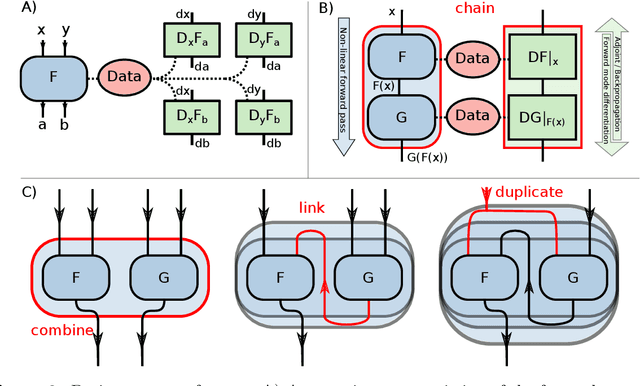

Abstract:Purpose: To develop a deep-learning-based image reconstruction framework for reproducible research in MRI. Methods: The BART toolbox offers a rich set of implementations of calibration and reconstruction algorithms for parallel imaging and compressed sensing. In this work, BART was extended by a non-linear operator framework that provides automatic differentiation to allow computation of gradients. Existing MRI-specific operators of BART, such as the non-uniform fast Fourier transform, are directly integrated into this framework and are complemented by common building blocks used in neural networks. To evaluate the use of the framework for advanced deep-learning-based reconstruction, two state-of-the-art unrolled reconstruction networks, namely the Variational Network [1] and MoDL [2], were implemented. Results: State-of-the-art deep image-reconstruction networks can be constructed and trained using BART's gradient based optimization algorithms. The BART implementation achieves a similar performance in terms of training time and reconstruction quality compared to the original implementations based on TensorFlow. Conclusion: By integrating non-linear operators and neural networks into BART, we provide a general framework for deep-learning-based reconstruction in MRI.

MRI Reconstruction via Data Driven Markov Chain with Joint Uncertainty Estimation

Feb 03, 2022

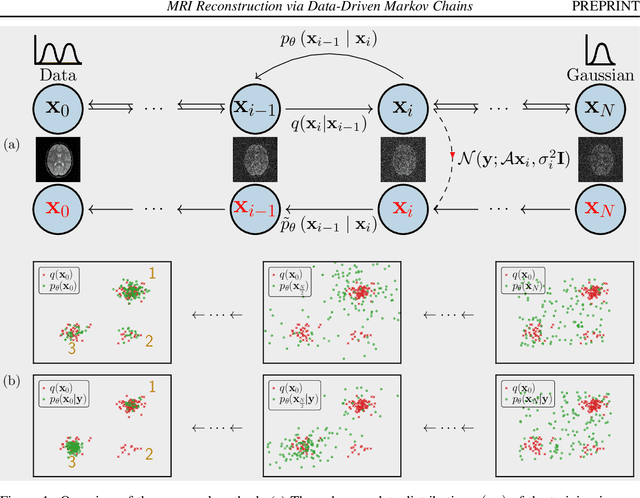

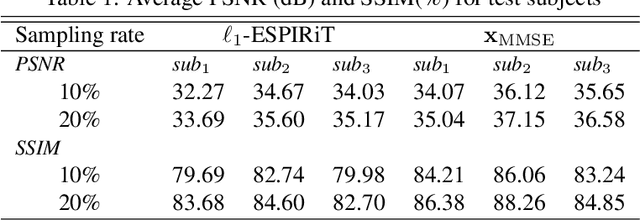

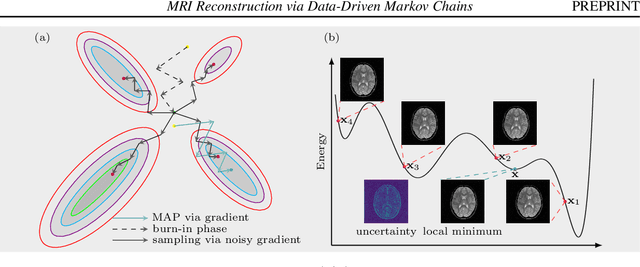

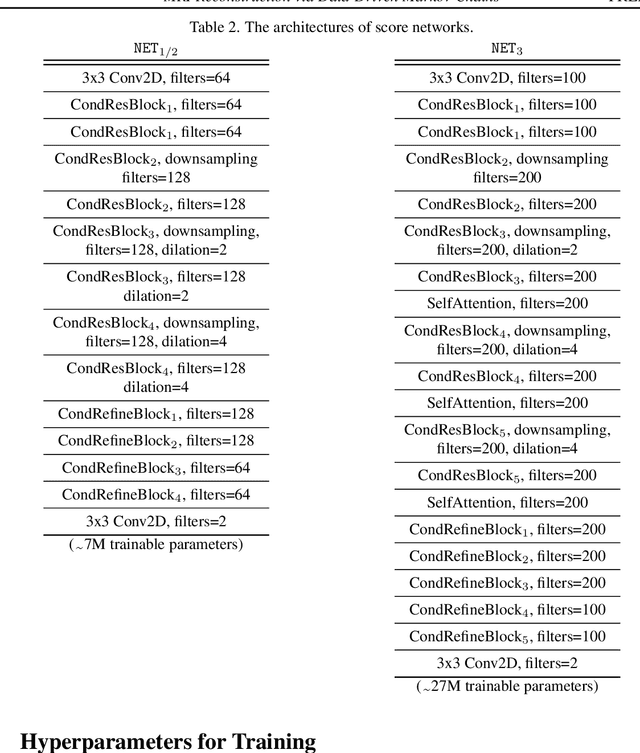

Abstract:We introduce a framework that enables efficient sampling from learned probability distributions for MRI reconstruction. Different from conventional deep learning-based MRI reconstruction techniques, samples are drawn from the posterior distribution given the measured k-space using the Markov chain Monte Carlo (MCMC) method. In addition to the maximum a posteriori (MAP) estimate for the image, which can be obtained with conventional methods, the minimum mean square error (MMSE) estimate and uncertainty maps can also be computed. The data-driven Markov chains are constructed from the generative model learned from a given image database and are independent of the forward operator that is used to model the k-space measurement. This provides flexibility because the method can be applied to k-space acquired with different sampling schemes or receive coils using the same pre-trained models. Furthermore, we use a framework based on a reverse diffusion process to be able to utilize advanced generative models. The performance of the method is evaluated on an open dataset using 10-fold accelerated acquisition.

Free-Breathing Water, Fat, $R_2^{\star}$ and $B_0$ Field Mapping of the Liver Using Multi-Echo Radial FLASH and Regularized Model-based Reconstruction

Jan 07, 2021

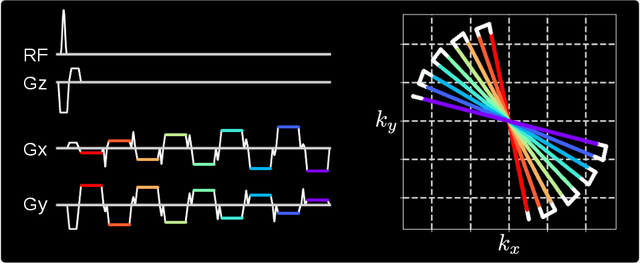

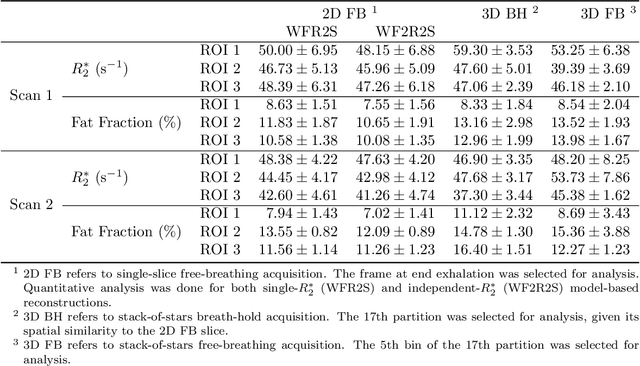

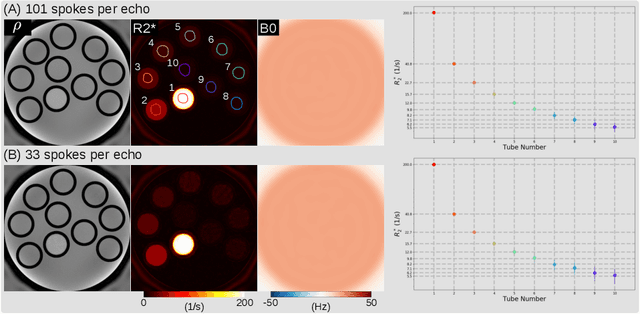

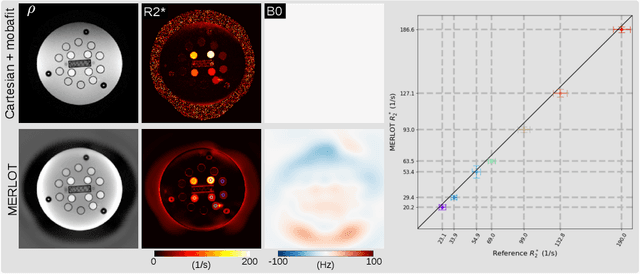

Abstract:Purpose: To achieve free-breathing quantitative fat and $R_2^{\star}$ mapping of the liver using a generalized model-based iterative reconstruction, dubbed as MERLOT. Methods: For acquisition, we use a multi-echo radial FLASH sequence that acquires multiple echoes with different complementary radial spoke encodings. We investigate real-time single-slice and volumetric multi-echo radial FLASH acquisition. For the latter, the sampling scheme is extended to a volumetric stack-of-stars acquisition. Model-based reconstruction based on generalized nonlinear inversion is used to jointly estimate water, fat, $R_2^{\star}$, $B_0$ field inhomogeneity, and coil sensitivity maps from the multi-coil multi-echo radial spokes. Spatial smoothness regularization is applied onto the B 0 field and coil sensitivity maps, whereas joint sparsity regularization is employed for the other parameter maps. The method integrates calibration-less parallel imaging and compressed sensing and was implemented in BART. For the volumetric acquisition, the respiratory motion is resolved with self-gating using SSA-FARY. The quantitative accuracy of the proposed method was validated via numerical simulation, the NIST phantom, a water/fat phantom, and in in-vivo liver studies. Results: For real-time acquisition, the proposed model-based reconstruction allowed acquisition of dynamic liver fat fraction and $R_2^{\star}$ maps at a temporal resolution of 0.3 s per frame. For the volumetric acquisition, whole liver coverage could be achieved in under 2 minutes using the self-gated motion-resolved reconstruction. Conclusion: The proposed multi-echo radial sampling sequence achieves fast k -space coverage and is robust to motion. The proposed model-based reconstruction yields spatially and temporally resolved liver fat fraction, $R_2^{\star}$ and $B_0$ field maps at high undersampling factor and with volume coverage.

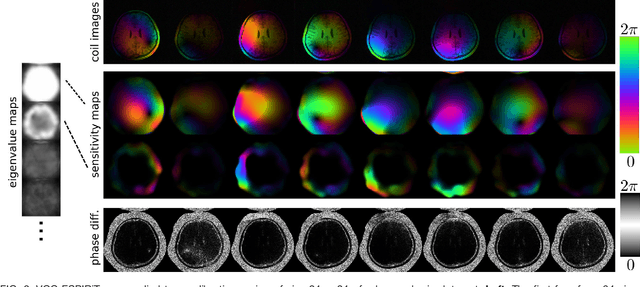

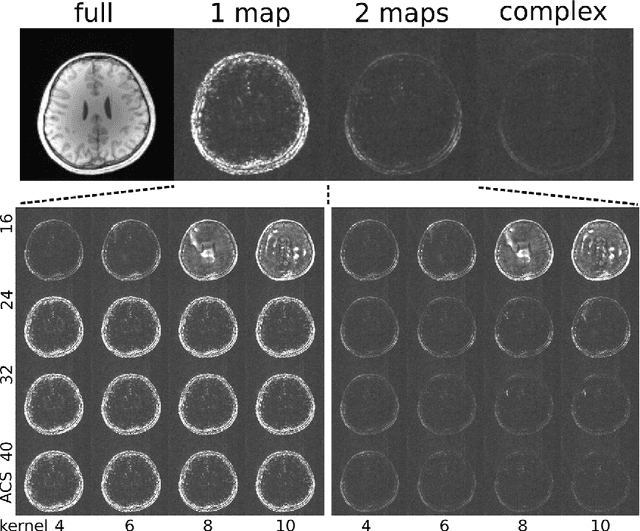

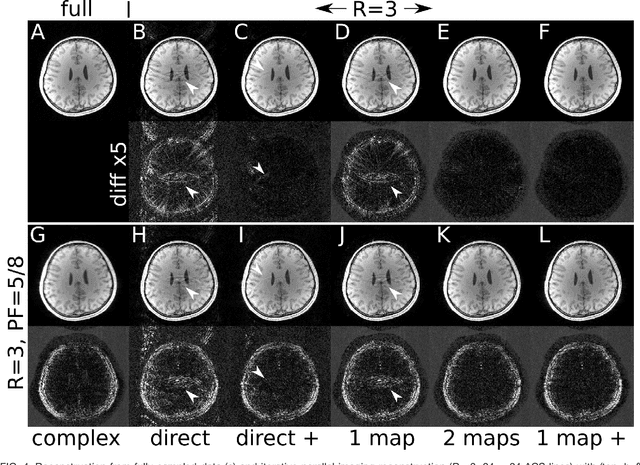

Estimating Absolute-Phase Maps Using ESPIRiT and Virtual Conjugate Coils

Jan 05, 2016

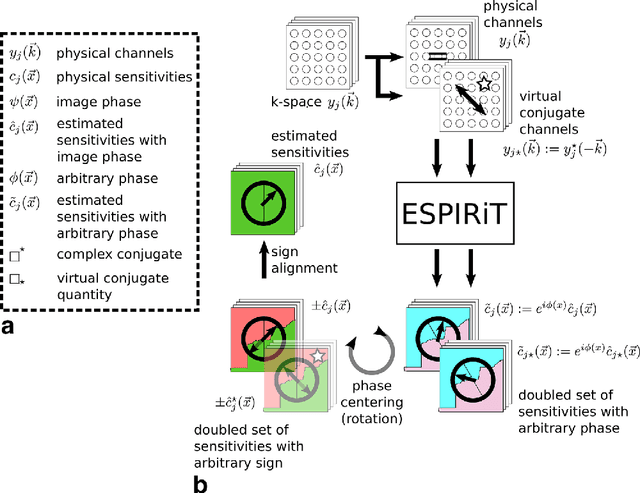

Abstract:Purpose: To develop an ESPIRiT-based method to estimate coil sensitivities with image phase as a building block for efficient and robust image reconstruction with phase constraints. Theory and Methods: ESPIRiT is a new framework for calibration of the coil sensitivities and reconstruction in parallel Magnetic Resonance Imaging (MRI). Applying ESPIRiT to a combined set of physical and virtual conjugate coils (VCC-ESPIRiT) implicitly exploits conjugate symmetry in k-space similar to VCC-GRAPPA. Based on this method, a new post-processing step is proposed for the explicit computation of coil sensitivities that include the absolute phase of the image. The accuracy of the computed maps is directly validated using a test based on projection onto fully sampled coil images and also indirectly in phase-constrained parallel-imaging reconstructions. Results: The proposed method can estimate accurate sensitivities which include low-resolution image phase. In case of high-frequency phase variations VCC-ESPIRiT yields an additional set of maps that indicates the existence of a high-frequency phase component. Taking this additional set of maps into account can improve the robustness of phase-constrained parallel imaging. Conclusion: The extended VCC-ESPIRiT is a useful tool for phase-constrained imaging.

* 15 pages, 5 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge