Martin Azkarate

Field Assessment of Force Torque Sensors for Planetary Rover Navigation

Nov 07, 2024

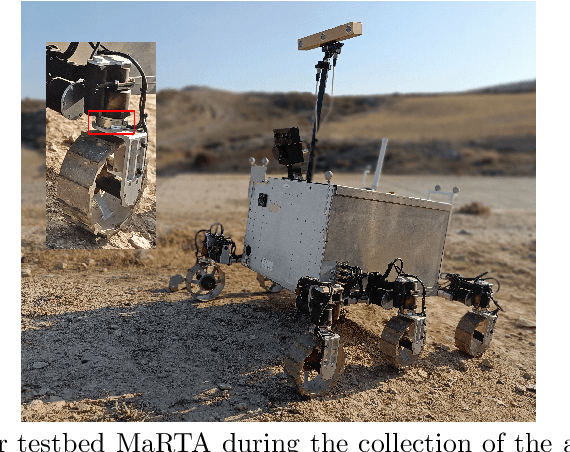

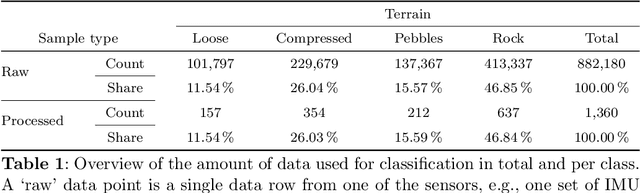

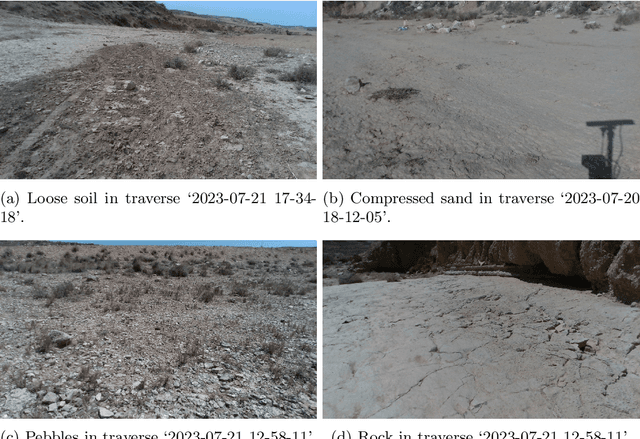

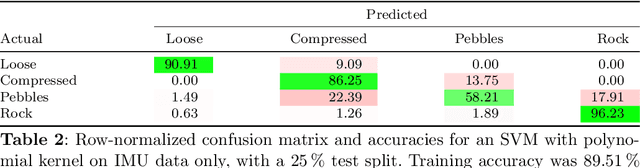

Abstract:Proprioceptive sensors on planetary rovers serve for state estimation and for understanding terrain and locomotion performance. While inertial measurement units (IMUs) are widely used to this effect, force-torque sensors are less explored for planetary navigation despite their potential to directly measure interaction forces and provide insights into traction performance. This paper presents an evaluation of the performance and use cases of force-torque sensors based on data collected from a six-wheeled rover during tests over varying terrains, speeds, and slopes. We discuss challenges, such as sensor signal reliability and terrain response accuracy, and identify opportunities regarding the use of these sensors. The data is openly accessible and includes force-torque measurements from each of the six-wheel assemblies as well as IMU data from within the rover chassis. This paper aims to inform the design of future studies and rover upgrades, particularly in sensor integration and control algorithms, to improve navigation capabilities.

Solving Occlusion in Terrain Mapping with Neural Networks

Sep 15, 2021

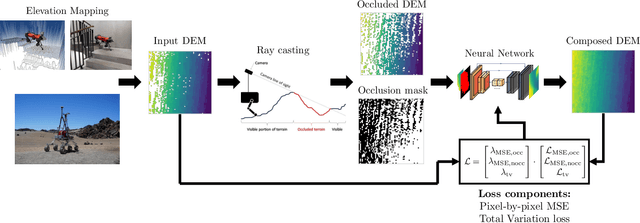

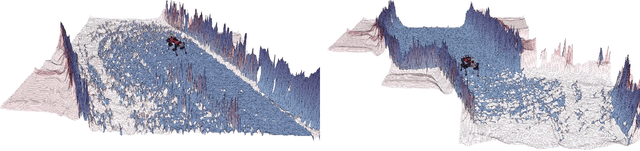

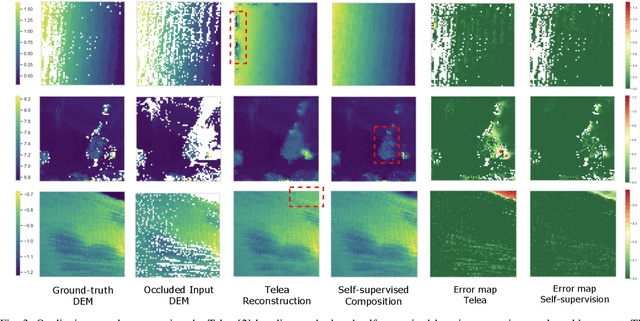

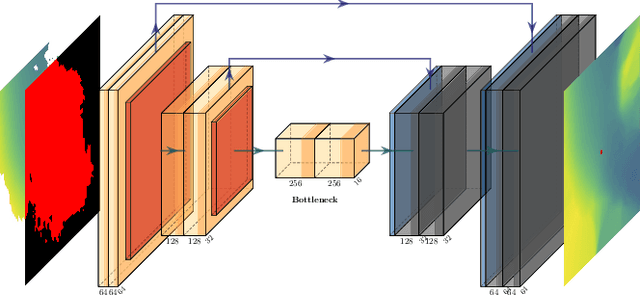

Abstract:Accurate and complete terrain maps enhance the awareness of autonomous robots and enable safe and optimal path planning. Rocks and topography often create occlusions and lead to missing elevation information in the Digital Elevation Map (DEM). Currently, mostly traditional inpainting techniques based on diffusion or patch-matching are used by autonomous mobile robots to fill-in incomplete DEMs. These methods cannot leverage the high-level terrain characteristics and the geometric constraints of line of sight we humans use intuitively to predict occluded areas. We propose to use neural networks to reconstruct the occluded areas in DEMs. We introduce a self-supervised learning approach capable of training on real-world data without a need for ground-truth information. We accomplish this by adding artificial occlusion to the incomplete elevation maps constructed on a real robot by performing ray casting. We first evaluate a supervised learning approach on synthetic data for which we have the full ground-truth available and subsequently move to several real-world datasets. These real-world datasets were recorded during autonomous exploration of both structured and unstructured terrain with a legged robot, and additionally in a planetary scenario on Lunar analogue terrain. We state a significant improvement compared to the Telea and Navier-Stokes baseline methods both on synthetic terrain and for the real-world datasets. Our neural network is able to run in real-time on both CPU and GPU with suitable sampling rates for autonomous ground robots.

A GNC Architecture for Planetary Rovers with Autonomous Navigation Capabilities

Nov 22, 2019

Abstract:This paper proposes a Guidance, Navigation, and Control (GNC) architecture for planetary rovers targeting the conditions of upcoming Mars exploration missions such as Mars 2020 and the Sample Fetching Rover (SFR). The navigation requirements of these missions demand a control architecture featuring autonomous capabilities to achieve a fast and long traverse. The proposed solution presents a two-level architecture where the efficient navigation (low) level is always active and the full navigation (upper) level is enabled according to the difficulty of the terrain. The first level is an efficient implementation of the basic functionalities for autonomous navigation based on hazard detection, local path replanning, and trajectory control with visual odometry. The second level implements an adaptive SLAM algorithm that improves the relative localization, evaluates the traversability of the terrain ahead for a more optimal path planning, and performs global (absolute) localization that corrects the pose drift during longer traverses. The architecture provides a solution for long range, low supervision and fast planetary exploration. Both navigation levels have been validated on planetary analogue field test campaigns.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge