Mark Robson

RAMP: A Benchmark for Evaluating Robotic Assembly Manipulation and Planning

May 16, 2023

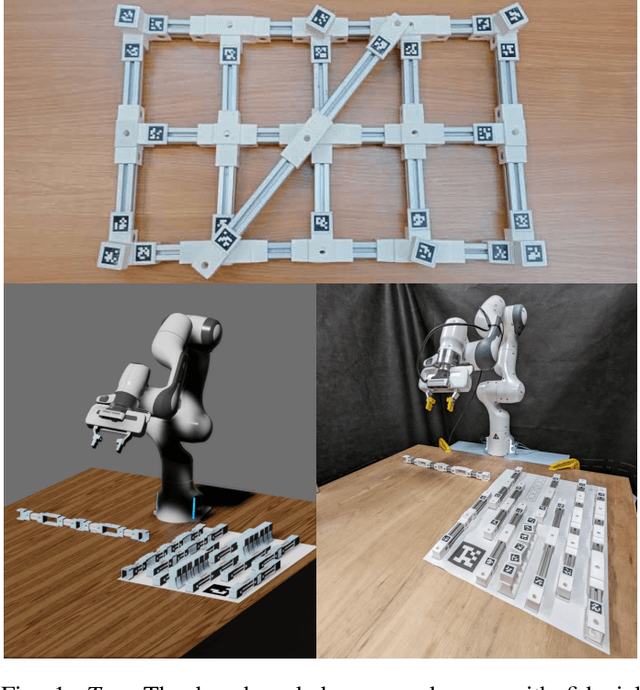

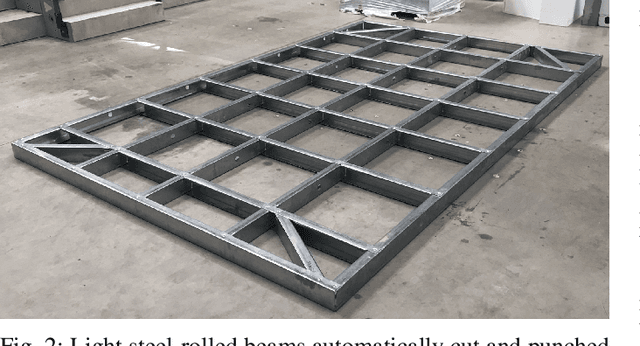

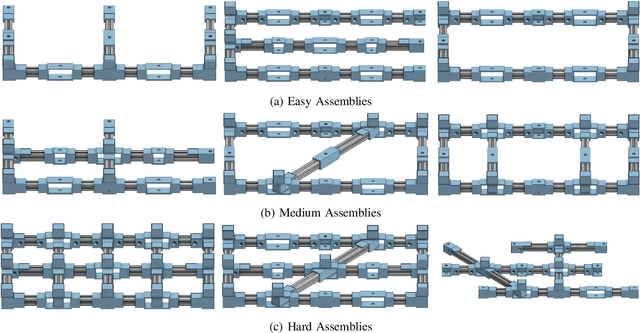

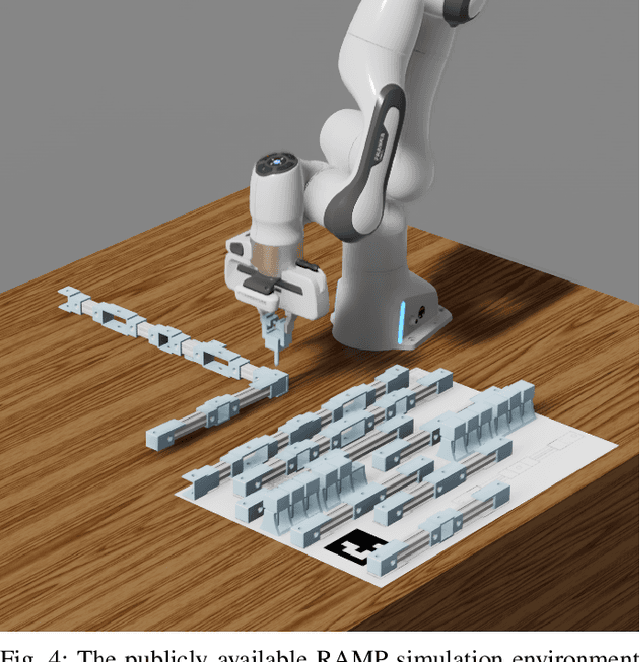

Abstract:We introduce RAMP, an open-source robotics benchmark inspired by real-world industrial assembly tasks. RAMP consists of beams that a robot must assemble into specified goal configurations using pegs as fasteners. As such it assesses planning and execution capabilities, and poses challenges in perception, reasoning, manipulation, diagnostics, fault recovery and goal parsing. RAMP has been designed to be accessible and extensible. Parts are either 3D printed or otherwise constructed from materials that are readily obtainable. The part design and detailed instructions are publicly available. In order to broaden community engagement, RAMP incorporates fixtures such as April Tags which enable researchers to focus on individual sub-tasks of the assembly challenge if desired. We provide a full digital twin as well as rudimentary baselines to enable rapid progress. Our vision is for RAMP to form the substrate for a community-driven endeavour that evolves as capability matures.

Generating Task-specific Robotic Grasps

Mar 20, 2022

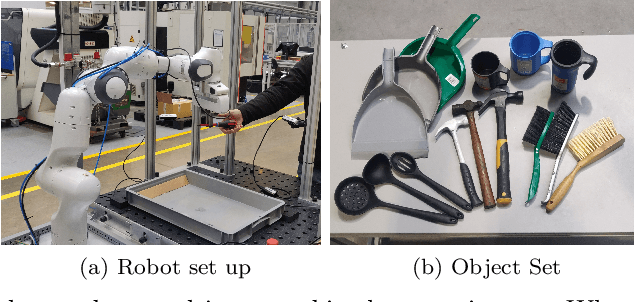

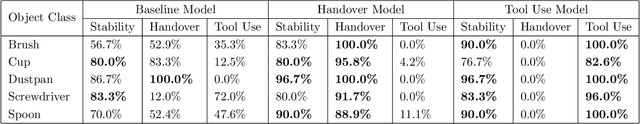

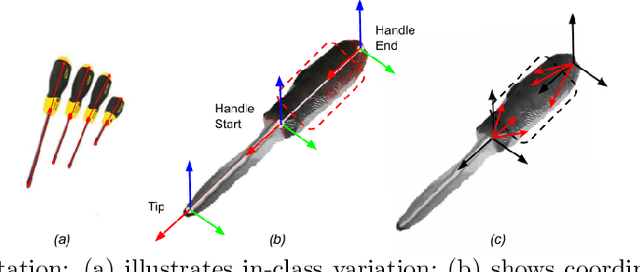

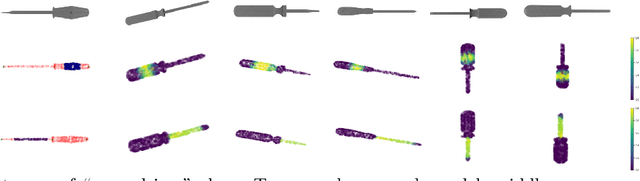

Abstract:This paper describes a method for generating robot grasps by jointly considering stability and other task and object-specific constraints. We introduce a three-level representation that is acquired for each object class from a small number of exemplars of objects, tasks, and relevant grasps. The representation encodes task-specific knowledge for each object class as a relationship between a keypoint skeleton and suitable grasp points that is preserved despite intra-class variations in scale and orientation. The learned models are queried at run time by a simple sampling-based method to guide the generation of grasps that balance task and stability constraints. We ground and evaluate our method in the context of a Franka Emika Panda robot assisting a human in picking tabletop objects for which the robot does not have prior CAD models. Experimental results demonstrate that in comparison with a baseline method that only focuses on stability, our method is able to provide suitable grasps for different tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge