Mark Nicholas Finean

Constrained Skill Discovery: Quadruped Locomotion with Unsupervised Reinforcement Learning

Oct 10, 2024

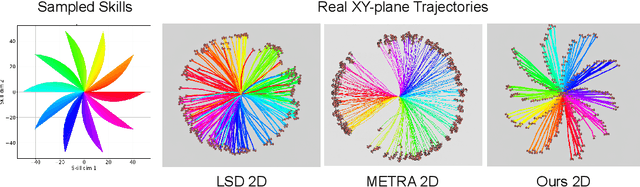

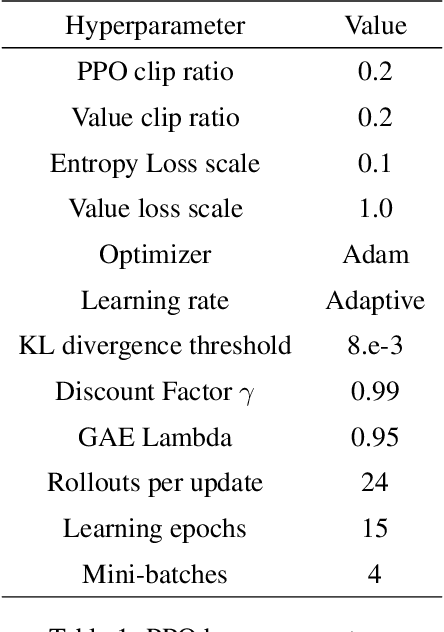

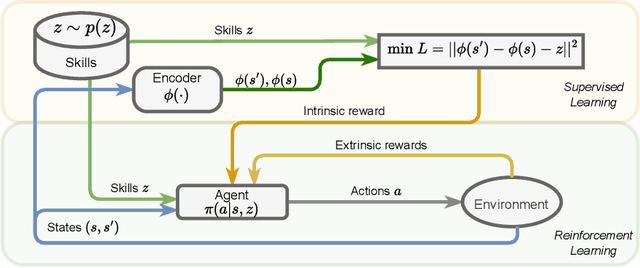

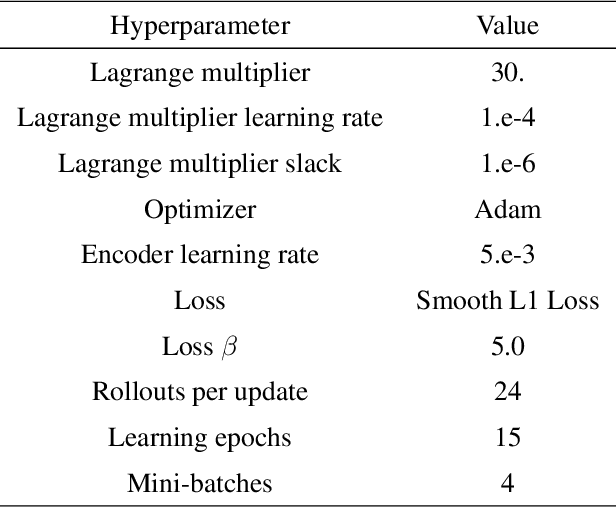

Abstract:Representation learning and unsupervised skill discovery can allow robots to acquire diverse and reusable behaviors without the need for task-specific rewards. In this work, we use unsupervised reinforcement learning to learn a latent representation by maximizing the mutual information between skills and states subject to a distance constraint. Our method improves upon prior constrained skill discovery methods by replacing the latent transition maximization with a norm-matching objective. This not only results in a much a richer state space coverage compared to baseline methods, but allows the robot to learn more stable and easily controllable locomotive behaviors. We successfully deploy the learned policy on a real ANYmal quadruped robot and demonstrate that the robot can accurately reach arbitrary points of the Cartesian state space in a zero-shot manner, using only an intrinsic skill discovery and standard regularization rewards.

Motion Planning in Dynamic Environments Using Context-Aware Human Trajectory Prediction

Jan 13, 2022

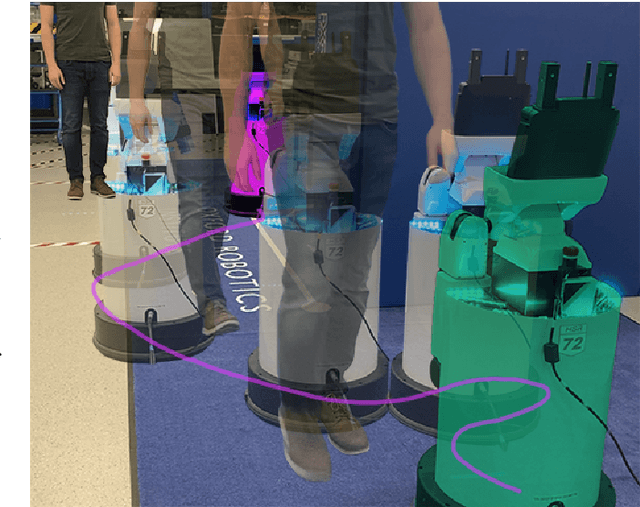

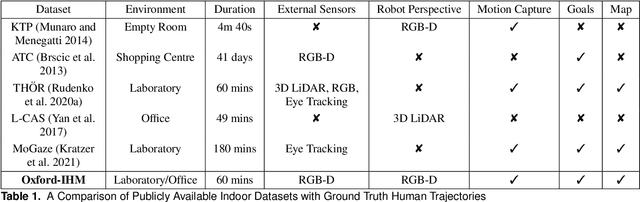

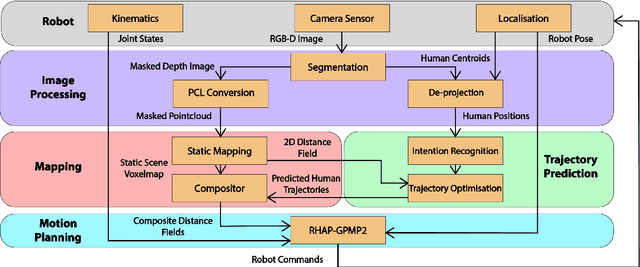

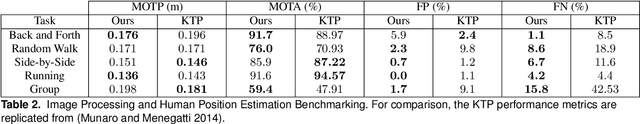

Abstract:Over the years, the separate fields of motion planning, mapping, and human trajectory prediction have advanced considerably. However, the literature is still sparse in providing practical frameworks that enable mobile manipulators to perform whole-body movements and account for the predicted motion of moving obstacles. Previous optimisation-based motion planning approaches that use distance fields have suffered from the high computational cost required to update the environment representation. We demonstrate that GPU-accelerated predicted composite distance fields significantly reduce the computation time compared to calculating distance fields from scratch. We integrate this technique with a complete motion planning and perception framework that accounts for the predicted motion of humans in dynamic environments, enabling reactive and pre-emptive motion planning that incorporates predicted motions. To achieve this, we propose and implement a novel human trajectory prediction method that combines intention recognition with trajectory optimisation-based motion planning. We validate our resultant framework on a real-world Toyota Human Support Robot (HSR) using live RGB-D sensor data from the onboard camera. In addition to providing analysis on a publicly available dataset, we release the Oxford Indoor Human Motion (Oxford-IHM) dataset and demonstrate state-of-the-art performance in human trajectory prediction. The Oxford-IHM dataset is a human trajectory prediction dataset in which people walk between regions of interest in an indoor environment. Both static and robot-mounted RGB-D cameras observe the people while tracked with a motion-capture system.

Where Should I Look? Optimised Gaze Control for Whole-Body Collision Avoidance in Dynamic Environments

Sep 10, 2021

Abstract:As robots operate in increasingly complex and dynamic environments, fast motion re-planning has become a widely explored area of research. In a real-world deployment, we often lack the ability to fully observe the environment at all times, giving rise to the challenge of determining how to best perceive the environment given a continuously updated motion plan. We provide the first investigation into a `smart' controller for gaze control with the objective of providing effective perception of the environment for obstacle avoidance and motion planning in dynamic and unknown environments. We detail the novel problem of determining the best head camera behaviour for mobile robots when constrained by a trajectory. Furthermore, we propose a greedy optimisation-based solution that uses a combination of voxelised rewards and motion primitives. We demonstrate that our method outperforms the benchmark methods in 2D and 3D environments, in respect of both the ability to explore the local surroundings, as well as in a superior success rate of finding collision-free trajectories -- our method is shown to provide 7.4x better map exploration while consistently achieving a higher success rate for generating collision-free trajectories. We verify our findings on a physical Toyota Human Support Robot (HSR) using a GPU-accelerated perception framework.

Simultaneous Scene Reconstruction and Whole-Body Motion Planning for Safe Operation in Dynamic Environments

Mar 05, 2021

Abstract:Recent work has demonstrated real-time mapping and reconstruction from dense perception, while motion planning based on distance fields has been shown to achieve fast, collision-free motion synthesis with good convergence properties. However, demonstration of a fully integrated system that can safely re-plan in unknown environments, in the presence of static and dynamic obstacles, has remained an open challenge. In this work, we first study the impact that signed and unsigned distance fields have on optimisation convergence, and the resultant error cost in trajectory optimisation problems in 2D path planning, arm manipulator motion planning, and whole-body loco-manipulation planning. We further analyse the performance of three state-of-the-art approaches to generating distance fields (Voxblox, Fiesta, and GPU-Voxels) for use in real-time environment reconstruction. Finally, we use our findings to construct a practical hybrid mapping and motion planning system which uses GPU-Voxels and GPMP2 to perform receding-horizon whole-body motion planning that can smoothly avoid moving obstacles in 3D space using live sensor data. Our results are validated in simulation and on a real-world Toyota Human Support Robot (HSR).

Predicted Composite Signed-Distance Fields for Real-Time Motion Planning in Dynamic Environments

Aug 03, 2020

Abstract:We present a novel framework for motion planning in dynamic environments that accounts for the predicted trajectories of moving objects in the scene. We explore the use of composite signed-distance fields in motion planning and detail how they can be used to generate signed-distance fields (SDFs) in real-time to incorporate predicted obstacle motions. We benchmark our approach of using composite SDFs against performing exact SDF calculations on the workspace occupancy grid. Our proposed technique generates predictions substantially faster and typically exhibits an 81--97% reduction in time for subsequent predictions. We integrate our framework with GPMP2 to demonstrate a full implementation of our approach in real-time, enabling a 7-DoF Panda arm to smoothly avoid a moving robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge