Mark Koren

Finding Failures in High-Fidelity Simulation using Adaptive Stress Testing and the Backward Algorithm

Jul 27, 2021

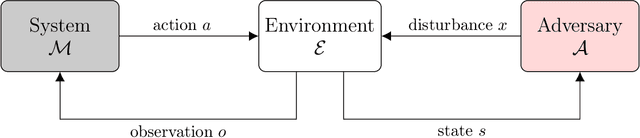

Abstract:Validating the safety of autonomous systems generally requires the use of high-fidelity simulators that adequately capture the variability of real-world scenarios. However, it is generally not feasible to exhaustively search the space of simulation scenarios for failures. Adaptive stress testing (AST) is a method that uses reinforcement learning to find the most likely failure of a system. AST with a deep reinforcement learning solver has been shown to be effective in finding failures across a range of different systems. This approach generally involves running many simulations, which can be very expensive when using a high-fidelity simulator. To improve efficiency, we present a method that first finds failures in a low-fidelity simulator. It then uses the backward algorithm, which trains a deep neural network policy using a single expert demonstration, to adapt the low-fidelity failures to high-fidelity. We have created a series of autonomous vehicle validation case studies that represent some of the ways low-fidelity and high-fidelity simulators can differ, such as time discretization. We demonstrate in a variety of case studies that this new AST approach is able to find failures with significantly fewer high-fidelity simulation steps than are needed when just running AST directly in high-fidelity. As a proof of concept, we also demonstrate AST on NVIDIA's DriveSim simulator, an industry state-of-the-art high-fidelity simulator for finding failures in autonomous vehicles.

A Survey of Algorithms for Black-Box Safety Validation

May 06, 2020

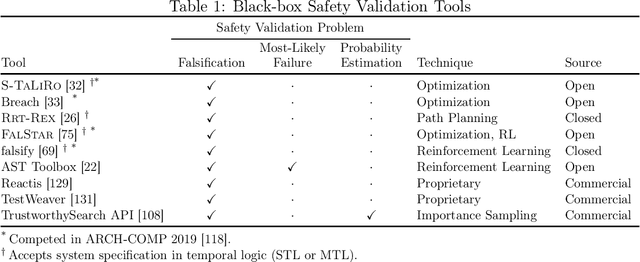

Abstract:Autonomous and semi-autonomous systems for safety-critical applications require rigorous testing before deployment. Due to the complexity of these systems, formal verification may be impossible and real-world testing may be dangerous during development. Therefore, simulation-based techniques have been developed that treat the system under test as a black box during testing. Safety validation tasks include finding disturbances to the system that cause it to fail (falsification), finding the most-likely failure, and estimating the probability that the system fails. Motivated by the prevalence of safety-critical artificial intelligence, this work provides a survey of state-of-the-art safety validation techniques with a focus on applied algorithms and their modifications for the safety validation problem. We present and discuss algorithms in the domains of optimization, path planning, reinforcement learning, and importance sampling. Problem decomposition techniques are presented to help scale algorithms to large state spaces, and a brief overview of safety-critical applications is given, including autonomous vehicles and aircraft collision avoidance systems. Finally, we present a survey of existing academic and commercially available safety validation tools.

The Adaptive Stress Testing Formulation

Apr 08, 2020

Abstract:Validation is a key challenge in the search for safe autonomy. Simulations are often either too simple to provide robust validation, or too complex to tractably compute. Therefore, approximate validation methods are needed to tractably find failures without unsafe simplifications. This paper presents the theory behind one such black-box approach: adaptive stress testing (AST). We also provide three examples of validation problems formulated to work with AST.

Adaptive Stress Testing without Domain Heuristics using Go-Explore

Apr 08, 2020

Abstract:Recently, reinforcement learning (RL) has been used as a tool for finding failures in autonomous systems. During execution, the RL agents often rely on some domain-specific heuristic reward to guide them towards finding failures, but constructing such a heuristic may be difficult or infeasible. Without a heuristic, the agent may only receive rewards at the time of failure, or even rewards that guide it away from failures. For example, some approaches give rewards for taking more-likely actions, because we want to find more-likely failures. However, the agent may then learn to only take likely actions, and may not be able to find a failure at all. Consequently, the problem becomes a hard-exploration problem, where rewards do not aid exploration. A new algorithm, go-explore (GE), has recently set new records on benchmarks from the hard-exploration field. We apply GE to adaptive stress testing (AST), one example of an RL-based falsification approach that provides a way to search for the most-likely failure scenario. We simulate a scenario where an autonomous vehicle drives while a pedestrian is crossing the road. We demonstrate that GE is able to find failures without domain-specific heuristics, such as the distance between the car and the pedestrian, on scenarios that other RL techniques are unable to solve. Furthermore, inspired by the robustification phase of GE, we demonstrate that the backwards algorithm (BA) improves the failures found by other RL techniques.

Efficient Autonomy Validation in Simulation with Adaptive Stress Testing

Jul 16, 2019

Abstract:During the development of autonomous systems such as driverless cars, it is important to characterize the scenarios that are most likely to result in failure. Adaptive Stress Testing (AST) provides a way to search for the most-likely failure scenario as a Markov decision process (MDP). Our previous work used a deep reinforcement learning (DRL) solver to identify likely failure scenarios. However, the solver's use of a feed-forward neural network with a discretized space of possible initial conditions poses two major problems. First, the system is not treated as a black box, in that it requires analyzing the internal state of the system, which leads to considerable implementation complexities. Second, in order to simulate realistic settings, a new instance of the solver needs to be run for each initial condition. Running a new solver for each initial condition not only significantly increases the computational complexity, but also disregards the underlying relationship between similar initial conditions. We provide a solution to both problems by employing a recurrent neural network that takes a set of initial conditions from a continuous space as input. This approach enables robust and efficient detection of failures because the solution generalizes across the entire space of initial conditions. By simulating an instance where an autonomous car drives while a pedestrian is crossing a road, we demonstrate the solver is now capable of finding solutions for problems that would have previously been intractable.

Adaptive Stress Testing for Autonomous Vehicles

Feb 05, 2019

Abstract:This paper presents a method for testing the decision making systems of autonomous vehicles. Our approach involves perturbing stochastic elements in the vehicle's environment until the vehicle is involved in a collision. Instead of applying direct Monte Carlo sampling to find collision scenarios, we formulate the problem as a Markov decision process and use reinforcement learning algorithms to find the most likely failure scenarios. This paper presents Monte Carlo Tree Search (MCTS) and Deep Reinforcement Learning (DRL) solutions that can scale to large environments. We show that DRL can find more likely failure scenarios than MCTS with fewer calls to the simulator. A simulation scenario involving a vehicle approaching a crosswalk is used to validate the framework. Our proposed approach is very general and can be easily applied to other scenarios given the appropriate models of the vehicle and the environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge