Mark D Boyer

Neural Dynamical Systems: Balancing Structure and Flexibility in Physical Prediction

Jun 23, 2020

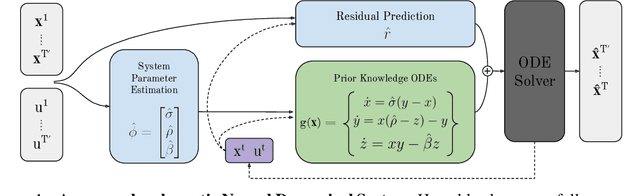

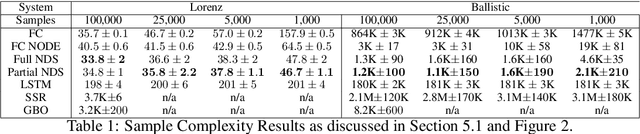

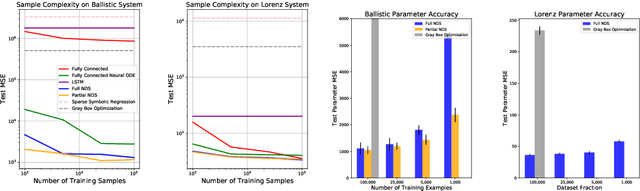

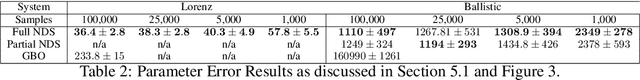

Abstract:We introduce Neural Dynamical Systems (NDS), a method of learning dynamical models in various gray-box settings which incorporates prior knowledge in the form of systems of ordinary differential equations. NDS uses neural networks to estimate free parameters of the system, predicts residual terms, and numerically integrates over time to predict future states. A key insight is that many real dynamic systems of interest are hard to model because the dynamics may vary across rollouts. We mitigate this problem by taking a trajectory of prior states as the input to NDS and train it to re-estimate system parameters using the preceding trajectory. We find that NDS learns dynamics with higher accuracy and fewer samples than a variety of deep learning methods that do not incorporate the prior knowledge and methods from the system identification literature which do. We demonstrate these advantages first on synthetic dynamical systems and then on real data captured from deuterium shots from a nuclear fusion reactor.

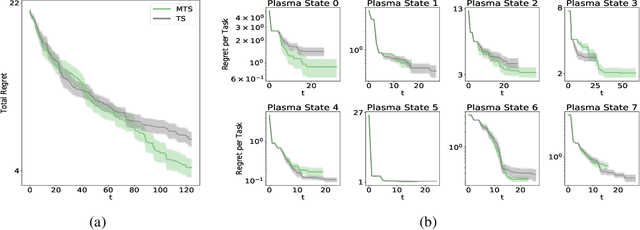

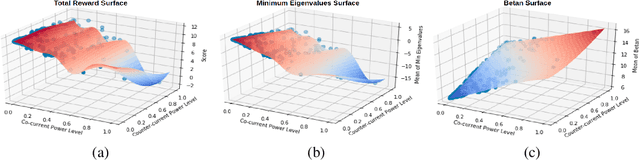

Offline Contextual Bayesian Optimization for Nuclear Fusion

Jan 06, 2020

Abstract:Nuclear fusion is regarded as the energy of the future since it presents the possibility of unlimited clean energy. One obstacle in utilizing fusion as a feasible energy source is the stability of the reaction. Ideally, one would have a controller for the reactor that makes actions in response to the current state of the plasma in order to prolong the reaction as long as possible. In this work, we make preliminary steps to learning such a controller. Since learning on a real world reactor is infeasible, we tackle this problem by attempting to learn optimal controls offline via a simulator, where the state of the plasma can be explicitly set. In particular, we introduce a theoretically grounded Bayesian optimization algorithm that recommends a state and action pair to evaluate at every iteration and show that this results in more efficient use of the simulator.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge