Marian Boktor

Multi-Channel Feature Extraction for Virtual Histological Staining of Photon Absorption Remote Sensing Images

Jul 04, 2023Abstract:Accurate and fast histological staining is crucial in histopathology, impacting diagnostic precision and reliability. Traditional staining methods are time-consuming and subjective, causing delays in diagnosis. Digital pathology plays a vital role in advancing and optimizing histology processes to improve efficiency and reduce turnaround times. This study introduces a novel deep learning-based framework for virtual histological staining using photon absorption remote sensing (PARS) images. By extracting features from PARS time-resolved signals using a variant of the K-means method, valuable multi-modal information is captured. The proposed multi-channel cycleGAN (MC-GAN) model expands on the traditional cycleGAN framework, allowing the inclusion of additional features. Experimental results reveal that specific combinations of features outperform the conventional channels by improving the labeling of tissue structures prior to model training. Applied to human skin and mouse brain tissue, the results underscore the significance of choosing the optimal combination of features, as it reveals a substantial visual and quantitative concurrence between the virtually stained and the gold standard chemically stained hematoxylin and eosin (H&E) images, surpassing the performance of other feature combinations. Accurate virtual staining is valuable for reliable diagnostic information, aiding pathologists in disease classification, grading, and treatment planning. This study aims to advance label-free histological imaging and opens doors for intraoperative microscopy applications.

Virtual Histology with Photon Absorption Remote Sensing using a Cycle-Consistent Generative Adversarial Network with Weakly Registered Pairs

Jun 26, 2023

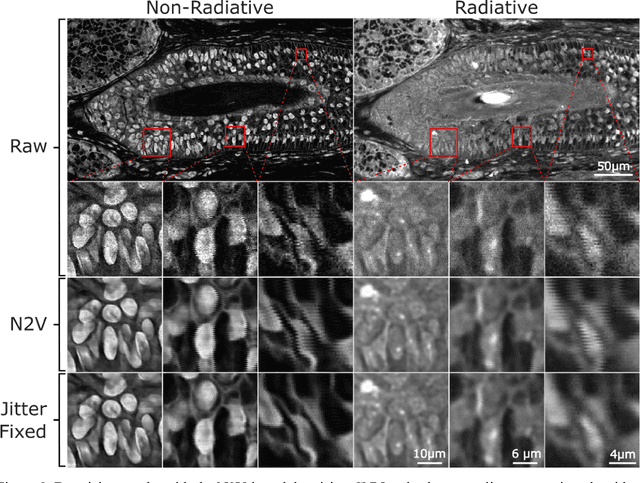

Abstract:Modern histopathology relies on the microscopic examination of thin tissue sections stained with histochemical techniques, typically using brightfield or fluorescence microscopy. However, the staining of samples can permanently alter their chemistry and structure, meaning an individual tissue section must be prepared for each desired staining contrast. This not only consumes valuable tissue samples but also introduces delays in essential diagnostic timelines. In this work, virtual histochemical staining is developed using label-free photon absorption remote sensing (PARS) microscopy. We present a method that generates virtually stained histology images that are indistinguishable from the gold standard hematoxylin and eosin (H&E) staining. First, PARS label-free ultraviolet absorption images are captured directly within unstained tissue specimens. The radiative and non-radiative absorption images are then preprocessed, and virtually stained through the presented pathway. The preprocessing pipeline features a self-supervised Noise2Void denoising convolutional neural network (CNN) as well as a novel algorithm for pixel-level mechanical scanning error correction. These developments significantly enhance the recovery of sub-micron tissue structures, such as nucleoli location and chromatin distribution. Finally, we used a cycle-consistent generative adversarial network CycleGAN architecture to virtually stain the preprocessed PARS data. Virtual staining is applied to thin unstained sections of malignant human skin and breast tissue samples. Clinically relevant details are revealed, with comparable contrast and quality to gold standard H&E-stained images. This work represents a crucial step to deploying label-free microscopy as an alternative to standard histopathology techniques.

Automated Whole Slide Imaging for Label-Free Histology using Photon Absorption Remote Sensing Microscopy

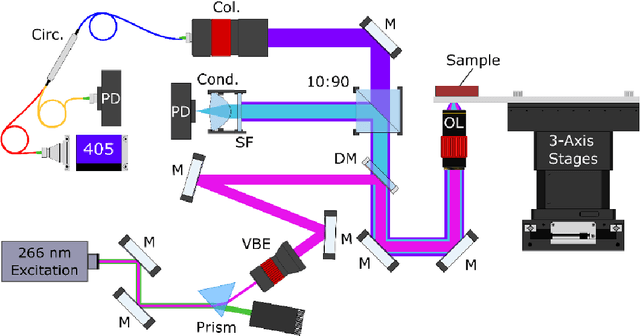

Apr 26, 2023Abstract:The field of histology relies heavily on antiquated tissue processing and staining techniques that limit the efficiency of pathologic diagnoses of cancer and other diseases. Current staining and advanced labeling methods are often destructive and mutually incompatible, requiring new tissue sections for each stain. This prolongs the diagnostic process and depletes valuable biopsy samples. In this study, we present an alternative label-free histology platform using the first transmission-mode Photon Absorption Remote Sensing microscope. Optimized for automated whole slide scanning of unstained tissue samples, the system provides slide images at magnifications up to 40x that are fully compatible with existing digital pathology tools. The scans capture high quality and high-resolution images with subcellular diagnostic detail. After imaging, samples remain suitable for histochemical, immunohistochemical, and other staining techniques. Scattering and absorption (radiative and non-radiative) contrasts are shown in whole slide images of malignant human breast and skin tissues samples. Clinically relevant features are highlighted, and close correspondence and analogous contrast is demonstrated with one-to-one gold standard H&E stained images. Our previously reported pix2pix virtual staining model is applied to an entire whole slide image, showcasing the potential of this approach in whole slide label-free H&E emulation. This work is a critical advance for integrating label-free optical methods into standard histopathology workflows, both enhancing diagnostic efficiency, and broadening the number of stains that can be applied while preserving valuable tissue samples.

Virtual Histological Staining of Label-Free Total Absorption Photoacoustic Remote Sensing (TA-PARS)

Apr 01, 2022

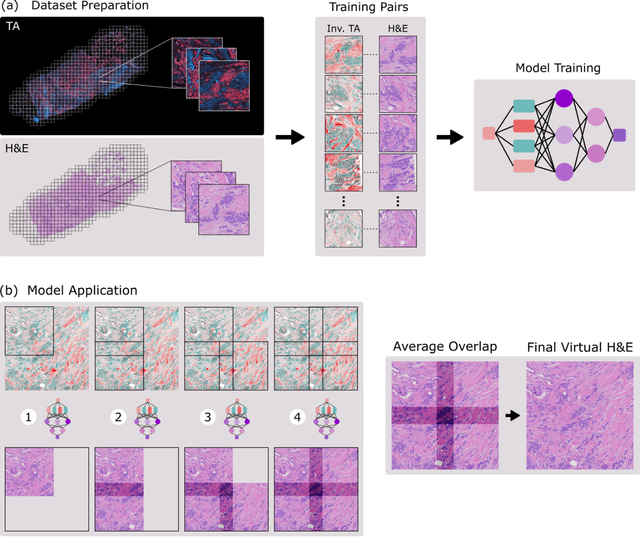

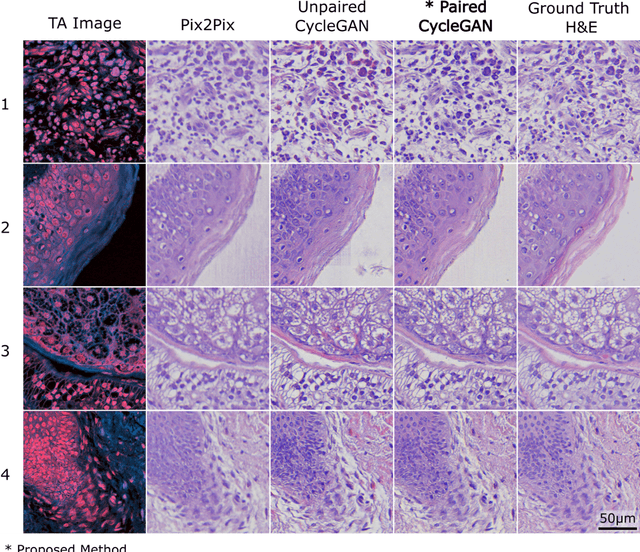

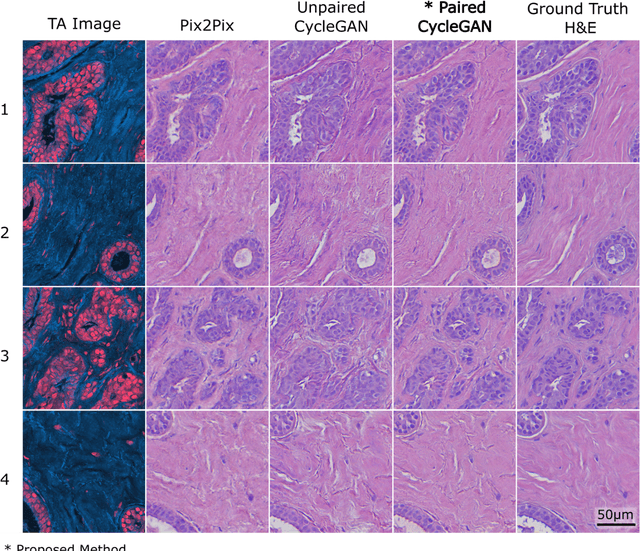

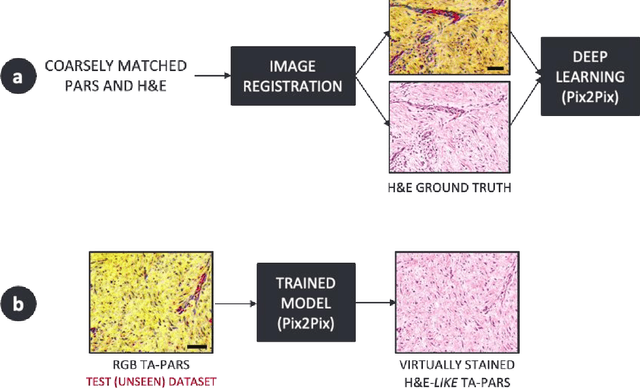

Abstract:Histopathological visualizations are a pillar of modern medicine and biological research. Surgical oncology relies exclusively on post-operative histology to determine definitive surgical success and guide adjuvant treatments. The current histology workflow is based on bright-field microscopic assessment of histochemical stained tissues and has some major limitations. For example, the preparation of stained specimens for brightfield assessment requires lengthy sample processing, delaying interventions for days or even weeks. Hence, there is a pressing need for improved histopathology methods. In this paper, we present a deep-learning-based approach for virtual label-free histochemical staining of total-absorption photoacoustic remote sensing (TA-PARS) images of unstained tissue. TA-PARS provides an array of directly measured label-free contrasts such as scattering and total absorption (radiative and non-radiative), ideal for developing H&E colorizations without the need to infer arbitrary tissue structures. We use a Pix2Pix generative adversarial network (GAN) to develop visualizations analogous to H&E staining from label-free TA-PARS images. Thin sections of human skin tissue were first virtually stained with the TA-PARS, then were chemically stained with H&E producing a one-to-one comparison between the virtual and chemical staining. The one-to-one matched virtually- and chemically- stained images exhibit high concordance validating the digital colorization of the TA-PARS images against the gold standard H&E. TA-PARS images were reviewed by four dermatologic pathologists who confirmed they are of diagnostic quality, and that resolution, contrast, and color permitted interpretation as if they were H&E. The presented approach paves the way for the development of TA-PARS slide-free histology, which promises to dramatically reduce the time from specimen resection to histological imaging.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge