Marco Rospocher

Do LLMs Dream of Ontologies?

Jan 26, 2024Abstract:Large language models (LLMs) have recently revolutionized automated text understanding and generation. The performance of these models relies on the high number of parameters of the underlying neural architectures, which allows LLMs to memorize part of the vast quantity of data seen during the training. This paper investigates whether and to what extent general-purpose pre-trained LLMs have memorized information from known ontologies. Our results show that LLMs partially know ontologies: they can, and do indeed, memorize concepts from ontologies mentioned in the text, but the level of memorization of their concepts seems to vary proportionally to their popularity on the Web, the primary source of their training material. We additionally propose new metrics to estimate the degree of memorization of ontological information in LLMs by measuring the consistency of the output produced across different prompt repetitions, query languages, and degrees of determinism.

A formalisation of BPMN in Description Logics

Sep 22, 2021Abstract:In this paper we present a textual description, in terms of Description Logics, of the BPMN Ontology, which provides a clear semantic formalisation of the structural components of the Business Process Modelling Notation (BPMN), based on the latest stable BPMN specifications from OMG [BPMN Version 1.1 -- January 2008]. The development of the ontology was guided by the description of the complete set of BPMN Element Attributes and Types contained in Annex B of the BPMN specifications.

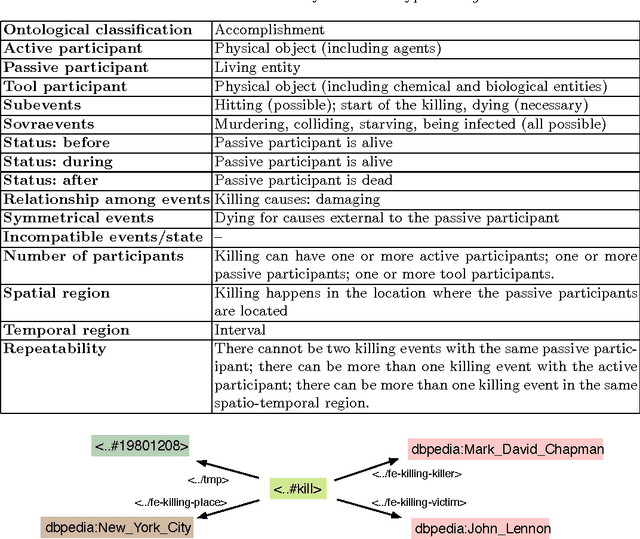

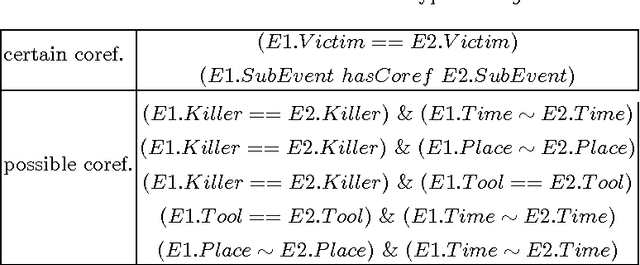

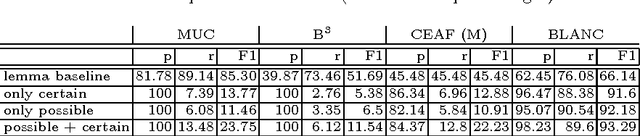

On Coreferring Text-extracted Event Descriptions with the aid of Ontological Reasoning

Dec 01, 2016

Abstract:Systems for automatic extraction of semantic information about events from large textual resources are now available: these tools are capable to generate RDF datasets about text extracted events and this knowledge can be used to reason over the recognized events. On the other hand, text based tasks for event recognition, as for example event coreference (i.e. recognizing whether two textual descriptions refer to the same event), do not take into account ontological information of the extracted events in their process. In this paper, we propose a method to derive event coreference on text extracted event data using semantic based rule reasoning. We demonstrate our method considering a limited (yet representative) set of event types: we introduce a formal analysis on their ontological properties and, on the base of this, we define a set of coreference criteria. We then implement these criteria as RDF-based reasoning rules to be applied on text extracted event data. We evaluate the effectiveness of our approach over a standard coreference benchmark dataset.

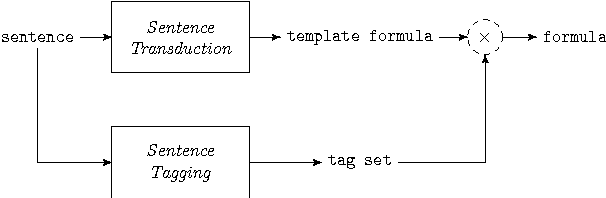

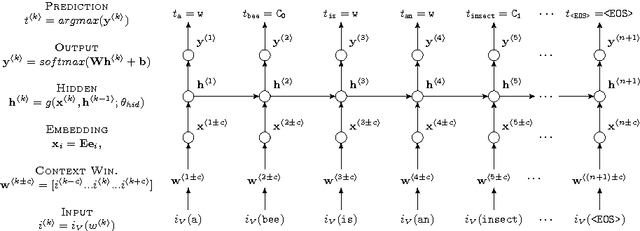

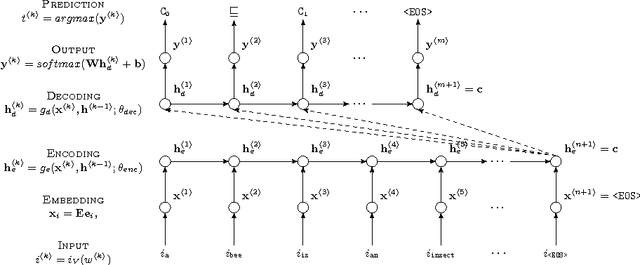

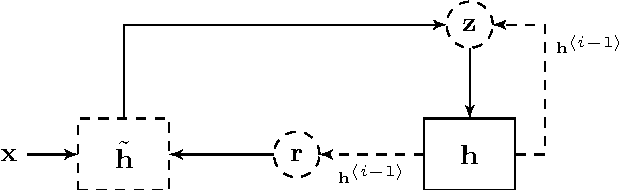

Using Recurrent Neural Network for Learning Expressive Ontologies

Jul 14, 2016

Abstract:Recently, Neural Networks have been proven extremely effective in many natural language processing tasks such as sentiment analysis, question answering, or machine translation. Aiming to exploit such advantages in the Ontology Learning process, in this technical report we present a detailed description of a Recurrent Neural Network based system to be used to pursue such goal.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge