Marco Castellano

HW-SW Optimization of DNNs for Privacy-preserving People Counting on Low-resolution Infrared Arrays

Feb 02, 2024

Abstract:Low-resolution infrared (IR) array sensors enable people counting applications such as monitoring the occupancy of spaces and people flows while preserving privacy and minimizing energy consumption. Deep Neural Networks (DNNs) have been shown to be well-suited to process these sensor data in an accurate and efficient manner. Nevertheless, the space of DNNs' architectures is huge and its manual exploration is burdensome and often leads to sub-optimal solutions. To overcome this problem, in this work, we propose a highly automated full-stack optimization flow for DNNs that goes from neural architecture search, mixed-precision quantization, and post-processing, down to the realization of a new smart sensor prototype, including a Microcontroller with a customized instruction set. Integrating these cross-layer optimizations, we obtain a large set of Pareto-optimal solutions in the 3D-space of energy, memory, and accuracy. Deploying such solutions on our hardware platform, we improve the state-of-the-art achieving up to 4.2x model size reduction, 23.8x code size reduction, and 15.38x energy reduction at iso-accuracy.

Efficient Deep Learning Models for Privacy-preserving People Counting on Low-resolution Infrared Arrays

Apr 12, 2023Abstract:Ultra-low-resolution Infrared (IR) array sensors offer a low-cost, energy-efficient, and privacy-preserving solution for people counting, with applications such as occupancy monitoring. Previous work has shown that Deep Learning (DL) can yield superior performance on this task. However, the literature was missing an extensive comparative analysis of various efficient DL architectures for IR array-based people counting, that considers not only their accuracy, but also the cost of deploying them on memory- and energy-constrained Internet of Things (IoT) edge nodes. In this work, we address this need by comparing 6 different DL architectures on a novel dataset composed of IR images collected from a commercial 8x8 array, which we made openly available. With a wide architectural exploration of each model type, we obtain a rich set of Pareto-optimal solutions, spanning cross-validated balanced accuracy scores in the 55.70-82.70% range. When deployed on a commercial Microcontroller (MCU) by STMicroelectronics, the STM32L4A6ZG, these models occupy 0.41-9.28kB of memory, and require 1.10-7.74ms per inference, while consuming 17.18-120.43 $\mu$J of energy. Our models are significantly more accurate than a previous deterministic method (up to +39.9%), while being up to 3.53x faster and more energy efficient. Further, our models' accuracy is comparable to state-of-the-art DL solutions on similar resolution sensors, despite a much lower complexity. All our models enable continuous, real-time inference on a MCU-based IoT node, with years of autonomous operation without battery recharging.

Human Activity Recognition on Microcontrollers with Quantized and Adaptive Deep Neural Networks

Sep 02, 2022

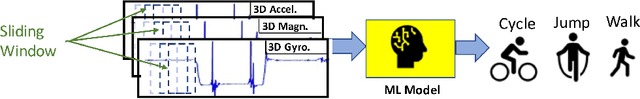

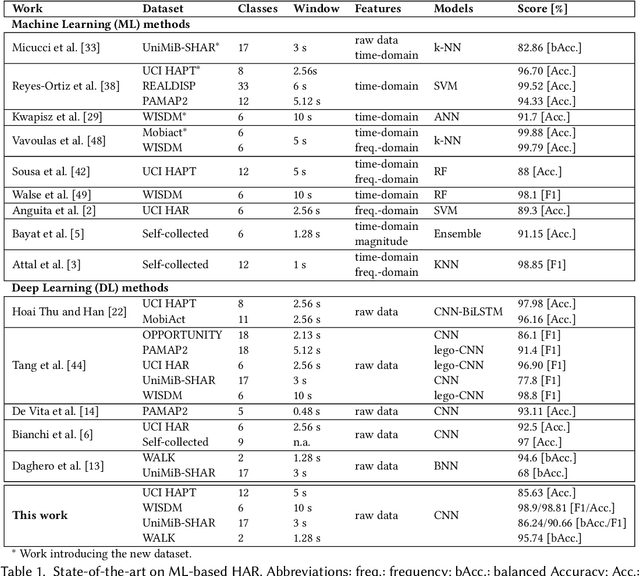

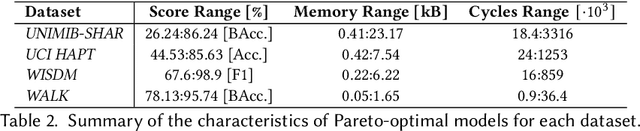

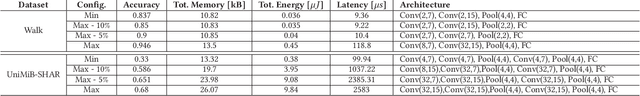

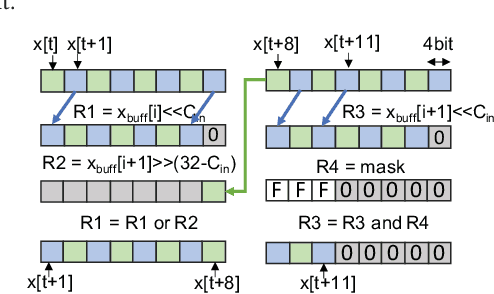

Abstract:Human Activity Recognition (HAR) based on inertial data is an increasingly diffused task on embedded devices, from smartphones to ultra low-power sensors. Due to the high computational complexity of deep learning models, most embedded HAR systems are based on simple and not-so-accurate classic machine learning algorithms. This work bridges the gap between on-device HAR and deep learning, proposing a set of efficient one-dimensional Convolutional Neural Networks (CNNs) deployable on general purpose microcontrollers (MCUs). Our CNNs are obtained combining hyper-parameters optimization with sub-byte and mixed-precision quantization, to find good trade-offs between classification results and memory occupation. Moreover, we also leverage adaptive inference as an orthogonal optimization to tune the inference complexity at runtime based on the processed input, hence producing a more flexible HAR system. With experiments on four datasets, and targeting an ultra-low-power RISC-V MCU, we show that (i) We are able to obtain a rich set of Pareto-optimal CNNs for HAR, spanning more than 1 order of magnitude in terms of memory, latency and energy consumption; (ii) Thanks to adaptive inference, we can derive >20 runtime operating modes starting from a single CNN, differing by up to 10% in classification scores and by more than 3x in inference complexity, with a limited memory overhead; (iii) on three of the four benchmarks, we outperform all previous deep learning methods, reducing the memory occupation by more than 100x. The few methods that obtain better performance (both shallow and deep) are not compatible with MCU deployment. (iv) All our CNNs are compatible with real-time on-device HAR with an inference latency <16ms. Their memory occupation varies in 0.05-23.17 kB, and their energy consumption in 0.005 and 61.59 uJ, allowing years of continuous operation on a small battery supply.

Ultra-compact Binary Neural Networks for Human Activity Recognition on RISC-V Processors

May 25, 2022

Abstract:Human Activity Recognition (HAR) is a relevant inference task in many mobile applications. State-of-the-art HAR at the edge is typically achieved with lightweight machine learning models such as decision trees and Random Forests (RFs), whereas deep learning is less common due to its high computational complexity. In this work, we propose a novel implementation of HAR based on deep neural networks, and precisely on Binary Neural Networks (BNNs), targeting low-power general purpose processors with a RISC-V instruction set. BNNs yield very small memory footprints and low inference complexity, thanks to the replacement of arithmetic operations with bit-wise ones. However, existing BNN implementations on general purpose processors impose constraints tailored to complex computer vision tasks, which result in over-parametrized models for simpler problems like HAR. Therefore, we also introduce a new BNN inference library, which targets ultra-compact models explicitly. With experiments on a single-core RISC-V processor, we show that BNNs trained on two HAR datasets obtain higher classification accuracy compared to a state-of-the-art baseline based on RFs. Furthermore, our BNN reaches the same accuracy of a RF with either less memory (up to 91%) or more energy-efficiency (up to 70%), depending on the complexity of the features extracted by the RF.

* Published in: 2021 18th ACM International Conference on Computing Frontiers (CF)

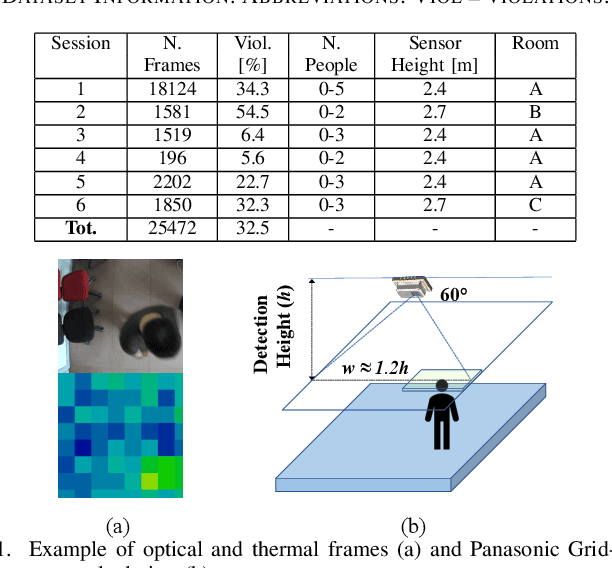

Privacy-preserving Social Distance Monitoring on Microcontrollers with Low-Resolution Infrared Sensors and CNNs

Apr 22, 2022

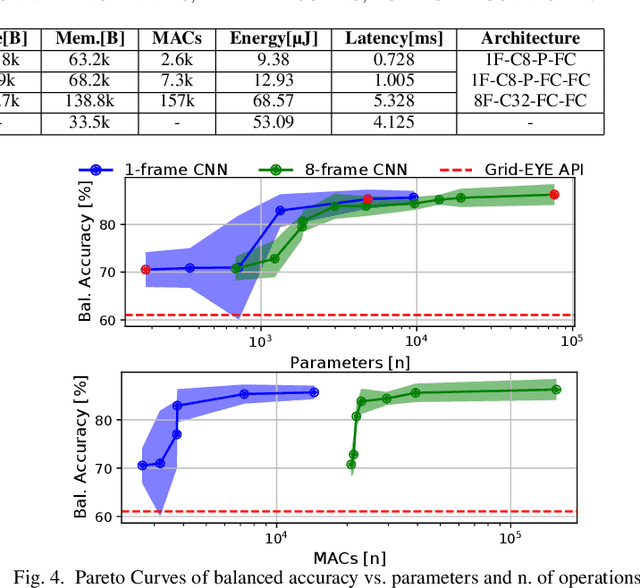

Abstract:Low-resolution infrared (IR) array sensors offer a low-cost, low-power, and privacy-preserving alternative to optical cameras and smartphones/wearables for social distance monitoring in indoor spaces, permitting the recognition of basic shapes, without revealing the personal details of individuals. In this work, we demonstrate that an accurate detection of social distance violations can be achieved processing the raw output of a 8x8 IR array sensor with a small-sized Convolutional Neural Network (CNN). Furthermore, the CNN can be executed directly on a Microcontroller (MCU)-based sensor node. With results on a newly collected open dataset, we show that our best CNN achieves 86.3% balanced accuracy, significantly outperforming the 61% achieved by a state-of-the-art deterministic algorithm. Changing the architectural parameters of the CNN, we obtain a rich Pareto set of models, spanning 70.5-86.3% accuracy and 0.18-75k parameters. Deployed on a STM32L476RG MCU, these models have a latency of 0.73-5.33ms, with an energy consumption per inference of 9.38-68.57{\mu}J.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge