Marco Avella Medina

Differentially Private Inference for Longitudinal Linear Regression

Jan 15, 2026Abstract:Differential Privacy (DP) provides a rigorous framework for releasing statistics while protecting individual information present in a dataset. Although substantial progress has been made on differentially private linear regression, existing methods almost exclusively address the item-level DP setting, where each user contributes a single observation. Many scientific and economic applications instead involve longitudinal or panel data, in which each user contributes multiple dependent observations. In these settings, item-level DP offers inadequate protection, and user-level DP - shielding an individual's entire trajectory - is the appropriate privacy notion. We develop a comprehensive framework for estimation and inference in longitudinal linear regression under user-level DP. We propose a user-level private regression estimator based on aggregating local regressions, and we establish finite-sample guarantees and asymptotic normality under short-range dependence. For inference, we develop a privatized, bias-corrected covariance estimator that is automatically heteroskedasticity- and autocorrelation-consistent. These results provide the first unified framework for practical user-level DP estimation and inference in longitudinal linear regression under dependence, with strong theoretical guarantees and promising empirical performance.

A theoretical framework for M-posteriors: frequentist guarantees and robustness properties

Oct 01, 2025Abstract:We provide a theoretical framework for a wide class of generalized posteriors that can be viewed as the natural Bayesian posterior counterpart of the class of M-estimators in the frequentist world. We call the members of this class M-posteriors and show that they are asymptotically normally distributed under mild conditions on the M-estimation loss and the prior. In particular, an M-posterior contracts in probability around a normal distribution centered at an M-estimator, showing frequentist consistency and suggesting some degree of robustness depending on the reference M-estimator. We formalize the robustness properties of the M-posteriors by a new characterization of the posterior influence function and a novel definition of breakdown point adapted for posterior distributions. We illustrate the wide applicability of our theory in various popular models and illustrate their empirical relevance in some numerical examples.

Thompson Sampling Itself is Differentially Private

Jul 20, 2024

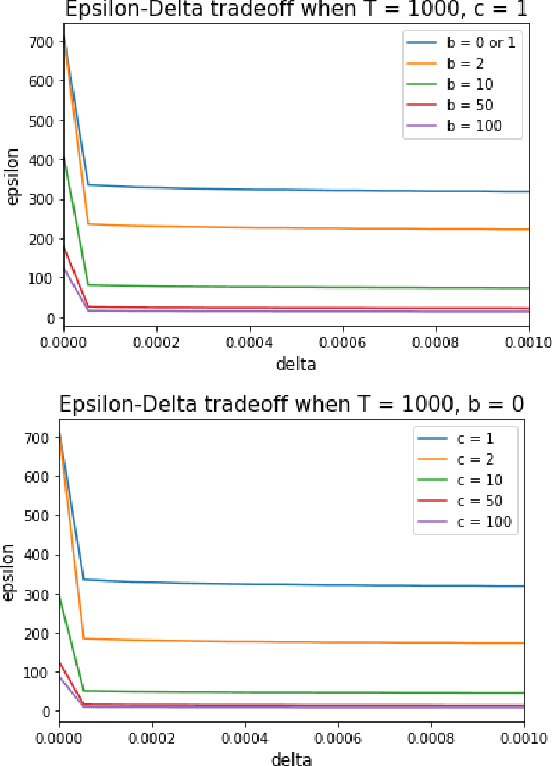

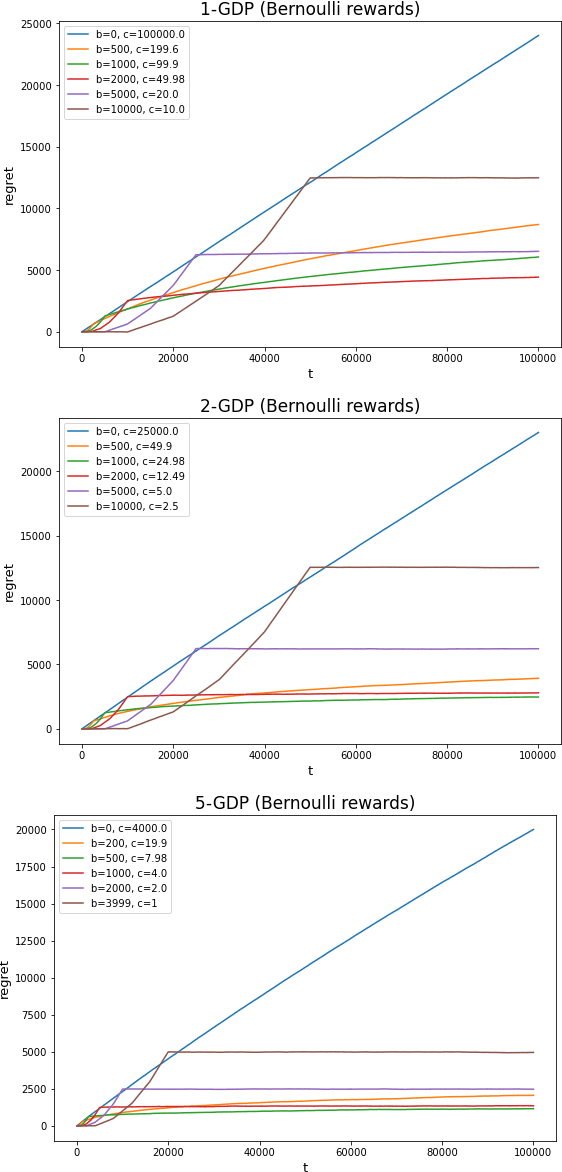

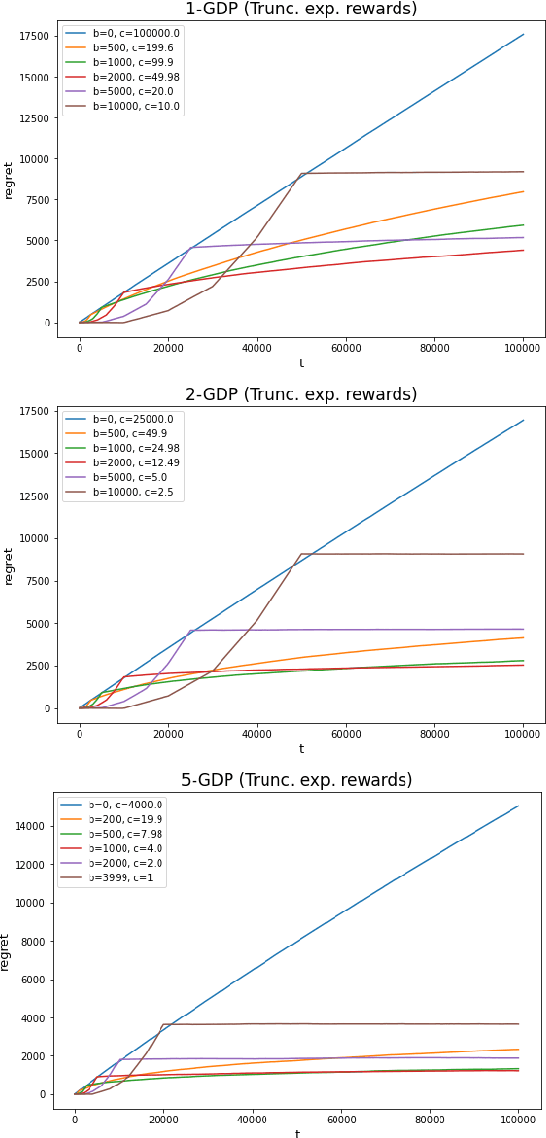

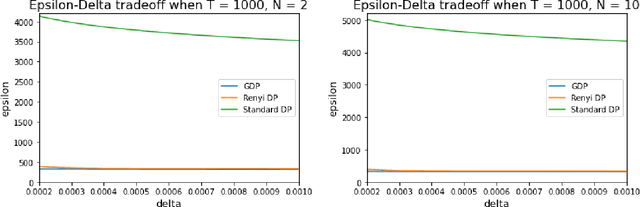

Abstract:In this work we first show that the classical Thompson sampling algorithm for multi-arm bandits is differentially private as-is, without any modification. We provide per-round privacy guarantees as a function of problem parameters and show composition over $T$ rounds; since the algorithm is unchanged, existing $O(\sqrt{NT\log N})$ regret bounds still hold and there is no loss in performance due to privacy. We then show that simple modifications -- such as pre-pulling all arms a fixed number of times, increasing the sampling variance -- can provide tighter privacy guarantees. We again provide privacy guarantees that now depend on the new parameters introduced in the modification, which allows the analyst to tune the privacy guarantee as desired. We also provide a novel regret analysis for this new algorithm, and show how the new parameters also impact expected regret. Finally, we empirically validate and illustrate our theoretical findings in two parameter regimes and demonstrate that tuning the new parameters substantially improve the privacy-regret tradeoff.

Spectral learning of multivariate extremes

Nov 15, 2021

Abstract:We propose a spectral clustering algorithm for analyzing the dependence structure of multivariate extremes. More specifically, we focus on the asymptotic dependence of multivariate extremes characterized by the angular or spectral measure in extreme value theory. Our work studies the theoretical performance of spectral clustering based on a random $k$-nearest neighbor graph constructed from an extremal sample, i.e., the angular part of random vectors for which the radius exceeds a large threshold. In particular, we derive the asymptotic distribution of extremes arising from a linear factor model and prove that, under certain conditions, spectral clustering can consistently identify the clusters of extremes arising in this model. Leveraging this result we propose a simple consistent estimation strategy for learning the angular measure. Our theoretical findings are complemented with numerical experiments illustrating the finite sample performance of our methods.

On the Robustness to Misspecification of $α$-Posteriors and Their Variational Approximations

Apr 16, 2021Abstract:$\alpha$-posteriors and their variational approximations distort standard posterior inference by downweighting the likelihood and introducing variational approximation errors. We show that such distortions, if tuned appropriately, reduce the Kullback-Leibler (KL) divergence from the true, but perhaps infeasible, posterior distribution when there is potential parametric model misspecification. To make this point, we derive a Bernstein-von Mises theorem showing convergence in total variation distance of $\alpha$-posteriors and their variational approximations to limiting Gaussian distributions. We use these distributions to evaluate the KL divergence between true and reported posteriors. We show this divergence is minimized by choosing $\alpha$ strictly smaller than one, assuming there is a vanishingly small probability of model misspecification. The optimized value becomes smaller as the the misspecification becomes more severe. The optimized KL divergence increases logarithmically in the degree of misspecification and not linearly as with the usual posterior.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge