Manfred Vogel

Swiss Parliaments Corpus Re-Imagined (SPC_R): Enhanced Transcription with RAG-based Correction and Predicted BLEU

Jun 09, 2025Abstract:This paper presents a new long-form release of the Swiss Parliaments Corpus, converting entire multi-hour Swiss German debate sessions (each aligned with the official session protocols) into high-quality speech-text pairs. Our pipeline starts by transcribing all session audio into Standard German using Whisper Large-v3 under high-compute settings. We then apply a two-step GPT-4o correction process: first, GPT-4o ingests the raw Whisper output alongside the official protocols to refine misrecognitions, mainly named entities. Second, a separate GPT-4o pass evaluates each refined segment for semantic completeness. We filter out any segments whose Predicted BLEU score (derived from Whisper's average token log-probability) and GPT-4o evaluation score fall below a certain threshold. The final corpus contains 801 hours of audio, of which 751 hours pass our quality control. Compared to the original sentence-level SPC release, our long-form dataset achieves a 6-point BLEU improvement, demonstrating the power of combining robust ASR, LLM-based correction, and data-driven filtering for low-resource, domain-specific speech corpora.

Fine-tuning Whisper on Low-Resource Languages for Real-World Applications

Dec 20, 2024

Abstract:This paper presents a new approach to fine-tuning OpenAI's Whisper model for low-resource languages by introducing a novel data generation method that converts sentence-level data into a long-form corpus, using Swiss German as a case study. Non-sentence-level data, which could improve the performance of long-form audio, is difficult to obtain and often restricted by copyright laws. Our method bridges this gap by transforming more accessible sentence-level data into a format that preserves the model's ability to handle long-form audio and perform segmentation without requiring non-sentence-level data. Our data generation process improves performance in several real-world applications and leads to the development of a new state-of-the-art speech-to-text (STT) model for Swiss German. We compare our model with a non-fine-tuned Whisper and our previous state-of-the-art Swiss German STT models, where our new model achieves higher BLEU scores. Our results also indicate that the proposed method is adaptable to other low-resource languages, supported by written guidance and code that allows the creation of fine-tuned Whisper models, which keep segmentation capabilities and allow the transcription of longer audio files using only sentence-level data with high quality.

Dialect Transfer for Swiss German Speech Translation

Oct 13, 2023Abstract:This paper investigates the challenges in building Swiss German speech translation systems, specifically focusing on the impact of dialect diversity and differences between Swiss German and Standard German. Swiss German is a spoken language with no formal writing system, it comprises many diverse dialects and is a low-resource language with only around 5 million speakers. The study is guided by two key research questions: how does the inclusion and exclusion of dialects during the training of speech translation models for Swiss German impact the performance on specific dialects, and how do the differences between Swiss German and Standard German impact the performance of the systems? We show that dialect diversity and linguistic differences pose significant challenges to Swiss German speech translation, which is in line with linguistic hypotheses derived from empirical investigations.

Text-to-Speech Pipeline for Swiss German -- A comparison

May 31, 2023Abstract:In this work, we studied the synthesis of Swiss German speech using different Text-to-Speech (TTS) models. We evaluated the TTS models on three corpora, and we found, that VITS models performed best, hence, using them for further testing. We also introduce a new method to evaluate TTS models by letting the discriminator of a trained vocoder GAN model predict whether a given waveform is human or synthesized. In summary, our best model delivers speech synthesis for different Swiss German dialects with previously unachieved quality.

STT4SG-350: A Speech Corpus for All Swiss German Dialect Regions

May 30, 2023

Abstract:We present STT4SG-350 (Speech-to-Text for Swiss German), a corpus of Swiss German speech, annotated with Standard German text at the sentence level. The data is collected using a web app in which the speakers are shown Standard German sentences, which they translate to Swiss German and record. We make the corpus publicly available. It contains 343 hours of speech from all dialect regions and is the largest public speech corpus for Swiss German to date. Application areas include automatic speech recognition (ASR), text-to-speech, dialect identification, and speaker recognition. Dialect information, age group, and gender of the 316 speakers are provided. Genders are equally represented and the corpus includes speakers of all ages. Roughly the same amount of speech is provided per dialect region, which makes the corpus ideally suited for experiments with speech technology for different dialects. We provide training, validation, and test splits of the data. The test set consists of the same spoken sentences for each dialect region and allows a fair evaluation of the quality of speech technologies in different dialects. We train an ASR model on the training set and achieve an average BLEU score of 74.7 on the test set. The model beats the best published BLEU scores on 2 other Swiss German ASR test sets, demonstrating the quality of the corpus.

Improving Metrics for Speech Translation

May 22, 2023Abstract:We introduce Parallel Paraphrasing ($\text{Para}_\text{both}$), an augmentation method for translation metrics making use of automatic paraphrasing of both the reference and hypothesis. This method counteracts the typically misleading results of speech translation metrics such as WER, CER, and BLEU if only a single reference is available. We introduce two new datasets explicitly created to measure the quality of metrics intended to be applied to Swiss German speech-to-text systems. Based on these datasets, we show that we are able to significantly improve the correlation with human quality perception if our method is applied to commonly used metrics.

2nd Swiss German Speech to Standard German Text Shared Task at SwissText 2022

Jan 17, 2023

Abstract:We present the results and findings of the 2nd Swiss German speech to Standard German text shared task at SwissText 2022. Participants were asked to build a sentence-level Swiss German speech to Standard German text system specialized on the Grisons dialect. The objective was to maximize the BLEU score on a test set of Grisons speech. 3 teams participated, with the best-performing system achieving a BLEU score of 70.1.

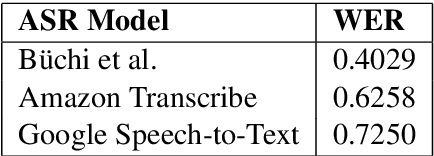

Swiss German Speech to Text system evaluation

Jul 01, 2022Abstract:We present an in-depth evaluation of four commercially available Speech-to-Text (STT) systems for Swiss German. The systems are anonymized and referred to as system a-d in this report. We compare the four systems to our STT model, referred to as FHNW from hereon after, and provide details on how we trained our model. To evaluate the models, we use two STT datasets from different domains. The Swiss Parliament Corpus (SPC) test set and a private dataset in the news domain with an even distribution across seven dialect regions. We provide a detailed error analysis to detect the three systems' strengths and weaknesses. This analysis is limited by the characteristics of the two test sets. Our model scored the highest bilingual evaluation understudy (BLEU) on both datasets. On the SPC test set, we obtain a BLEU score of 0.607, whereas the best commercial system reaches a BLEU score of 0.509. On our private test set, we obtain a BLEU score of 0.722 and the best commercial system a BLEU score of 0.568.

SDS-200: A Swiss German Speech to Standard German Text Corpus

May 19, 2022

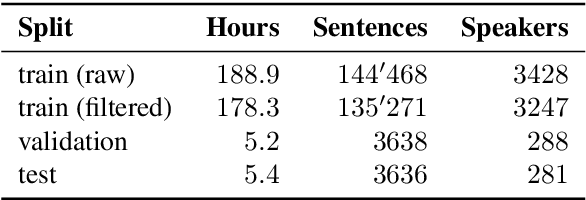

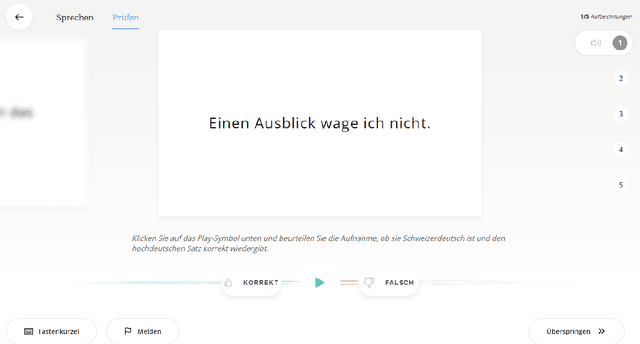

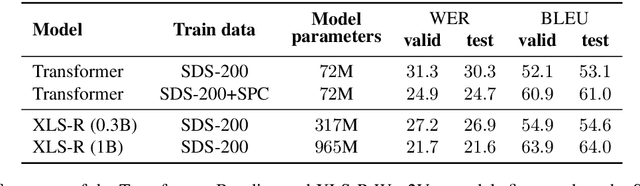

Abstract:We present SDS-200, a corpus of Swiss German dialectal speech with Standard German text translations, annotated with dialect, age, and gender information of the speakers. The dataset allows for training speech translation, dialect recognition, and speech synthesis systems, among others. The data was collected using a web recording tool that is open to the public. Each participant was given a text in Standard German and asked to translate it to their Swiss German dialect before recording it. To increase the corpus quality, recordings were validated by other participants. The data consists of 200 hours of speech by around 4000 different speakers and covers a large part of the Swiss-German dialect landscape. We release SDS-200 alongside a baseline speech translation model, which achieves a word error rate (WER) of 30.3 and a BLEU score of 53.1 on the SDS-200 test set. Furthermore, we use SDS-200 to fine-tune a pre-trained XLS-R model, achieving 21.6 WER and 64.0 BLEU.

Swiss Parliaments Corpus, an Automatically Aligned Swiss German Speech to Standard German Text Corpus

Oct 06, 2020

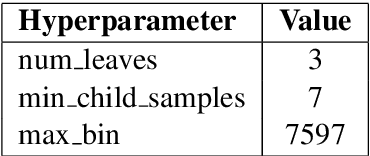

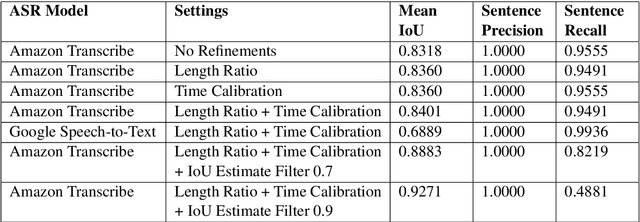

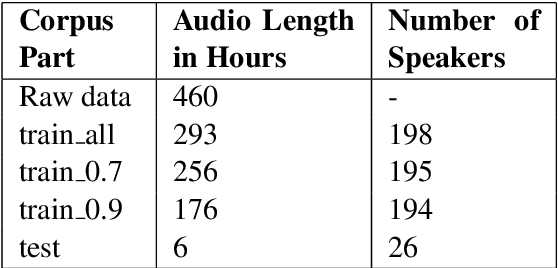

Abstract:We present a forced sentence alignment procedure for Swiss German speech and Standard German text. It is able to create a speech-to-text corpus in a fully automatic fashion, given an audio recording and the corresponding unaligned transcript. Compared to a manual alignment, it achieves a mean IoU of 0.8401 with a sentence recall of 0.9491. When applying our IoU estimate filter, the mean IoU can be further improved to 0.9271 at the cost of a lower sentence recall of 0.4881. Using this procedure, we created the Swiss Parliaments Corpus, an automatically aligned Swiss German speech to Standard German text corpus. 65 % of the raw data could be transformed to sentence-level audio-text-pairs, resulting in 293 hours of training data. We have made the corpus freely available for download.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge