Mahdi Taheri

SAFFIRA: a Framework for Assessing the Reliability of Systolic-Array-Based DNN Accelerators

Mar 05, 2024

Abstract:Systolic array has emerged as a prominent architecture for Deep Neural Network (DNN) hardware accelerators, providing high-throughput and low-latency performance essential for deploying DNNs across diverse applications. However, when used in safety-critical applications, reliability assessment is mandatory to guarantee the correct behavior of DNN accelerators. While fault injection stands out as a well-established practical and robust method for reliability assessment, it is still a very time-consuming process. This paper addresses the time efficiency issue by introducing a novel hierarchical software-based hardware-aware fault injection strategy tailored for systolic array-based DNN accelerators.

AdAM: Adaptive Fault-Tolerant Approximate Multiplier for Edge DNN Accelerators

Mar 05, 2024Abstract:In this paper, we propose an architecture of a novel adaptive fault-tolerant approximate multiplier tailored for ASIC-based DNN accelerators.

Exploration of Activation Fault Reliability in Quantized Systolic Array-Based DNN Accelerators

Jan 17, 2024Abstract:The stringent requirements for the Deep Neural Networks (DNNs) accelerator's reliability stand along with the need for reducing the computational burden on the hardware platforms, i.e. reducing the energy consumption and execution time as well as increasing the efficiency of DNN accelerators. Moreover, the growing demand for specialized DNN accelerators with tailored requirements, particularly for safety-critical applications, necessitates a comprehensive design space exploration to enable the development of efficient and robust accelerators that meet those requirements. Therefore, the trade-off between hardware performance, i.e. area and delay, and the reliability of the DNN accelerator implementation becomes critical and requires tools for analysis. This paper presents a comprehensive methodology for exploring and enabling a holistic assessment of the trilateral impact of quantization on model accuracy, activation fault reliability, and hardware efficiency. A fully automated framework is introduced that is capable of applying various quantization-aware techniques, fault injection, and hardware implementation, thus enabling the measurement of hardware parameters. Moreover, this paper proposes a novel lightweight protection technique integrated within the framework to ensure the dependable deployment of the final systolic-array-based FPGA implementation. The experiments on established benchmarks demonstrate the analysis flow and the profound implications of quantization on reliability, hardware performance, and network accuracy, particularly concerning the transient faults in the network's activations.

Enhancing Fault Resilience of QNNs by Selective Neuron Splitting

Jun 16, 2023Abstract:The superior performance of Deep Neural Networks (DNNs) has led to their application in various aspects of human life. Safety-critical applications are no exception and impose rigorous reliability requirements on DNNs. Quantized Neural Networks (QNNs) have emerged to tackle the complexity of DNN accelerators, however, they are more prone to reliability issues. In this paper, a recent analytical resilience assessment method is adapted for QNNs to identify critical neurons based on a Neuron Vulnerability Factor (NVF). Thereafter, a novel method for splitting the critical neurons is proposed that enables the design of a Lightweight Correction Unit (LCU) in the accelerator without redesigning its computational part. The method is validated by experiments on different QNNs and datasets. The results demonstrate that the proposed method for correcting the faults has a twice smaller overhead than a selective Triple Modular Redundancy (TMR) while achieving a similar level of fault resiliency.

A Novel Fault-Tolerant Logic Style with Self-Checking Capability

May 31, 2023Abstract:We introduce a novel logic style with self-checking capability to enhance hardware reliability at logic level. The proposed logic cells have two-rail inputs/outputs, and the functionality for each rail of outputs enables construction of faulttolerant configurable circuits. The AND and OR gates consist of 8 transistors based on CNFET technology, while the proposed XOR gate benefits from both CNFET and low-power MGDI technologies in its transistor arrangement. To demonstrate the feasibility of our new logic gates, we used an AES S-box implementation as the use case. The extensive simulation results using HSPICE indicate that the case-study circuit using on proposed gates has superior speed and power consumption compared to other implementations with error-detection capability

APPRAISER: DNN Fault Resilience Analysis Employing Approximation Errors

May 31, 2023

Abstract:Nowadays, the extensive exploitation of Deep Neural Networks (DNNs) in safety-critical applications raises new reliability concerns. In practice, methods for fault injection by emulation in hardware are efficient and widely used to study the resilience of DNN architectures for mitigating reliability issues already at the early design stages. However, the state-of-the-art methods for fault injection by emulation incur a spectrum of time-, design- and control-complexity problems. To overcome these issues, a novel resiliency assessment method called APPRAISER is proposed that applies functional approximation for a non-conventional purpose and employs approximate computing errors for its interest. By adopting this concept in the resiliency assessment domain, APPRAISER provides thousands of times speed-up in the assessment process, while keeping high accuracy of the analysis. In this paper, APPRAISER is validated by comparing it with state-of-the-art approaches for fault injection by emulation in FPGA. By this, the feasibility of the idea is demonstrated, and a new perspective in resiliency evaluation for DNNs is opened.

Special Session: Approximation and Fault Resiliency of DNN Accelerators

May 31, 2023

Abstract:Deep Learning, and in particular, Deep Neural Network (DNN) is nowadays widely used in many scenarios, including safety-critical applications such as autonomous driving. In this context, besides energy efficiency and performance, reliability plays a crucial role since a system failure can jeopardize human life. As with any other device, the reliability of hardware architectures running DNNs has to be evaluated, usually through costly fault injection campaigns. This paper explores the approximation and fault resiliency of DNN accelerators. We propose to use approximate (AxC) arithmetic circuits to agilely emulate errors in hardware without performing fault injection on the DNN. To allow fast evaluation of AxC DNN, we developed an efficient GPU-based simulation framework. Further, we propose a fine-grain analysis of fault resiliency by examining fault propagation and masking in networks

A Systematic Literature Review on Hardware Reliability Assessment Methods for Deep Neural Networks

May 09, 2023Abstract:Artificial Intelligence (AI) and, in particular, Machine Learning (ML) have emerged to be utilized in various applications due to their capability to learn how to solve complex problems. Over the last decade, rapid advances in ML have presented Deep Neural Networks (DNNs) consisting of a large number of neurons and layers. DNN Hardware Accelerators (DHAs) are leveraged to deploy DNNs in the target applications. Safety-critical applications, where hardware faults/errors would result in catastrophic consequences, also benefit from DHAs. Therefore, the reliability of DNNs is an essential subject of research. In recent years, several studies have been published accordingly to assess the reliability of DNNs. In this regard, various reliability assessment methods have been proposed on a variety of platforms and applications. Hence, there is a need to summarize the state of the art to identify the gaps in the study of the reliability of DNNs. In this work, we conduct a Systematic Literature Review (SLR) on the reliability assessment methods of DNNs to collect relevant research works as much as possible, present a categorization of them, and address the open challenges. Through this SLR, three kinds of methods for reliability assessment of DNNs are identified including Fault Injection (FI), Analytical, and Hybrid methods. Since the majority of works assess the DNN reliability by FI, we characterize different approaches and platforms of the FI method comprehensively. Moreover, Analytical and Hybrid methods are propounded. Thus, different reliability assessment methods for DNNs have been elaborated on their conducted DNN platforms and reliability evaluation metrics. Finally, we highlight the advantages and disadvantages of the identified methods and address the open challenges in the research area.

Noise-Tolerance GPU-based Age Estimation Using ResNet-50

Apr 26, 2023Abstract:The human face contains important and understandable information such as personal identity, gender, age, and ethnicity. In recent years, a person's age has been studied as one of the important features of the face. The age estimation system consists of a combination of two modules, the presentation of the face image and the extraction of age characteristics, and then the detection of the exact age or age group based on these characteristics. So far, various algorithms have been presented for age estimation, each of which has advantages and disadvantages. In this work, we implemented a deep residual neural network on the UTKFace data set. We validated our implementation by comparing it with the state-of-the-art implementations of different age estimation algorithms and the results show 28.3% improvement in MAE as one of the critical error validation metrics compared to the recent works and also 71.39% MAE improvements compared to the implemented AlexNet. In the end, we show that the performance degradation of our implemented network is lower than 1.5% when injecting 15 dB noise to the input data (5 times more than the normal environmental noise) which justifies the noise tolerance of our proposed method.

LRDB: LSTM Raw data DNA Base-caller based on long-short term models in an active learning environment

Mar 15, 2023

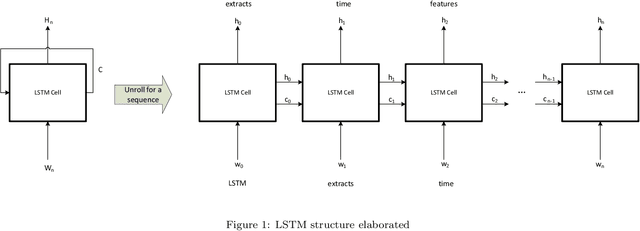

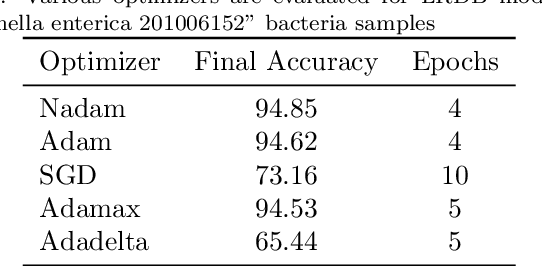

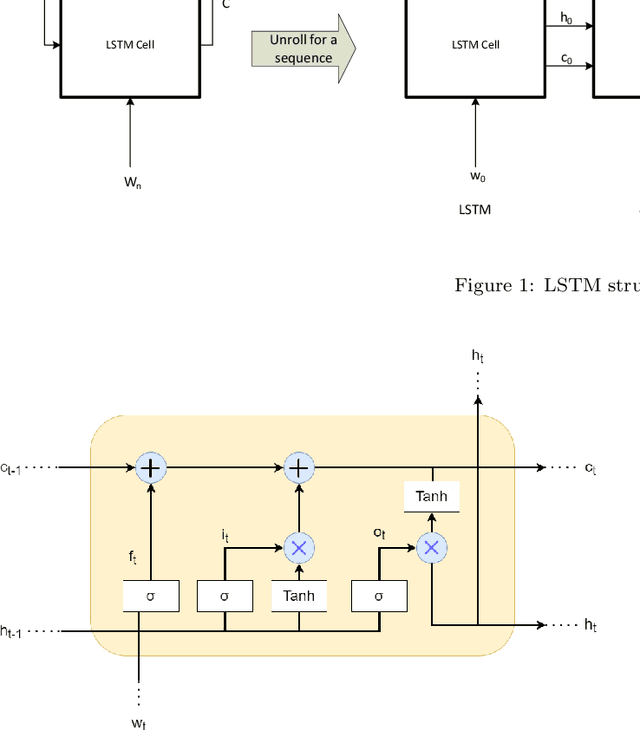

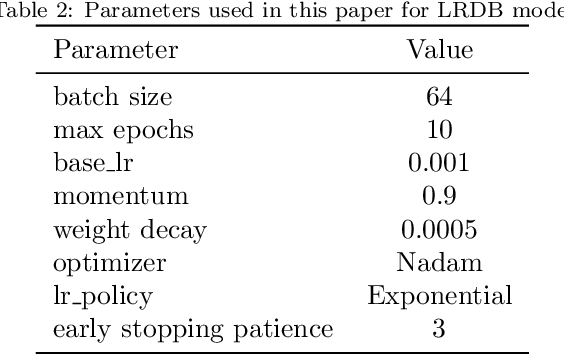

Abstract:The first important step in extracting DNA characters is using the output data of MinION devices in the form of electrical current signals. Various cutting-edge base callers use this data to detect the DNA characters based on the input. In this paper, we discuss several shortcomings of prior base callers in the case of time-critical applications, privacy-aware design, and the problem of catastrophic forgetting. Next, we propose the LRDB model, a lightweight open-source model for private developments with a better read-identity (0.35% increase) for the target bacterial samples in the paper. We have limited the extent of training data and benefited from the transfer learning algorithm to make the active usage of the LRDB viable in critical applications. Henceforth, less training time for adapting to new DNA samples (in our case, Bacterial samples) is needed. Furthermore, LRDB can be modified concerning the user constraints as the results show a negligible accuracy loss in case of using fewer parameters. We have also assessed the noise-tolerance property, which offers about a 1.439% decline in accuracy for a 15dB noise injection, and the performance metrics show that the model executes in a medium speed range compared with current cutting-edge models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge