Maad Ebrahim

Predicting Bitcoin Market Trends with Enhanced Technical Indicator Integration and Classification Models

Oct 09, 2024

Abstract:Thanks to the high potential for profit, trading has become increasingly attractive to investors as the cryptocurrency and stock markets rapidly expand. However, because financial markets are intricate and dynamic, accurately predicting prices remains a significant challenge. The volatile nature of the cryptocurrency market makes it even harder for traders and investors to make decisions. This study presents a machine learning model based on classification to forecast the direction of the cryptocurrency market, i.e., whether prices will increase or decrease. The model is trained using historical data and important technical indicators such as the Moving Average Convergence Divergence, the Relative Strength Index, and Bollinger Bands. We illustrate our approach with an empirical study of the closing price of Bitcoin. Several simulations, including a confusion matrix and Receiver Operating Characteristic curve, are used to assess the model's performance, and the results show a buy/sell signal accuracy of over 92%. These findings demonstrate how machine learning models can assist investors and traders of cryptocurrencies in making wise/informed decisions in a very volatile market.

Cryptocurrency Price Forecasting Using XGBoost Regressor and Technical Indicators

Jul 16, 2024

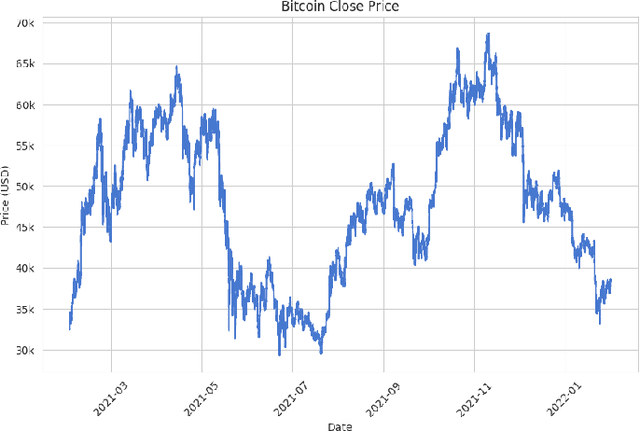

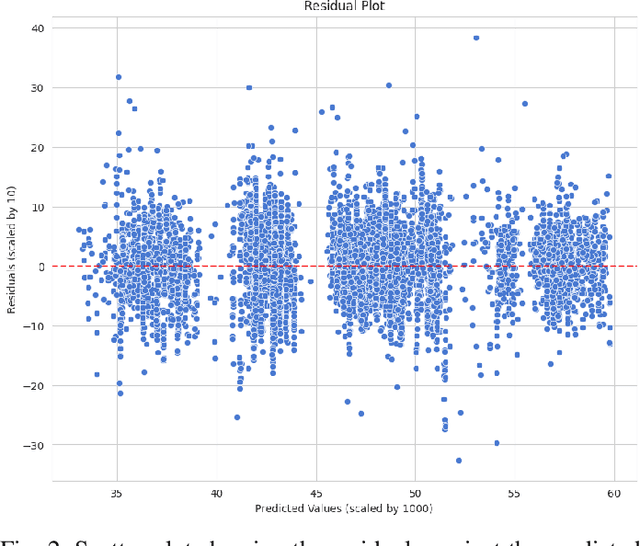

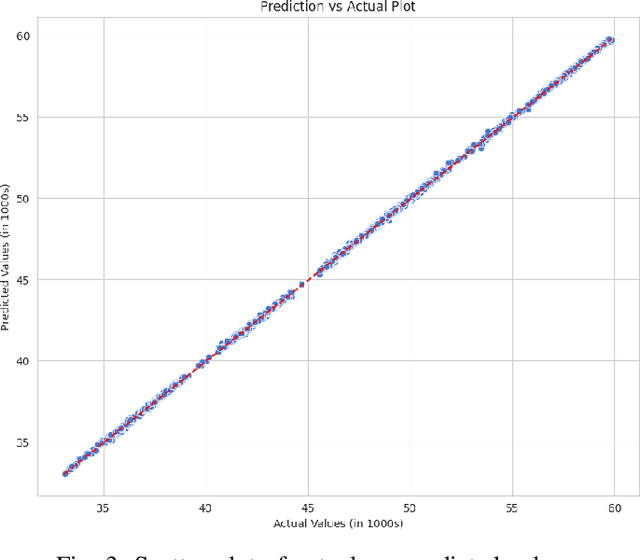

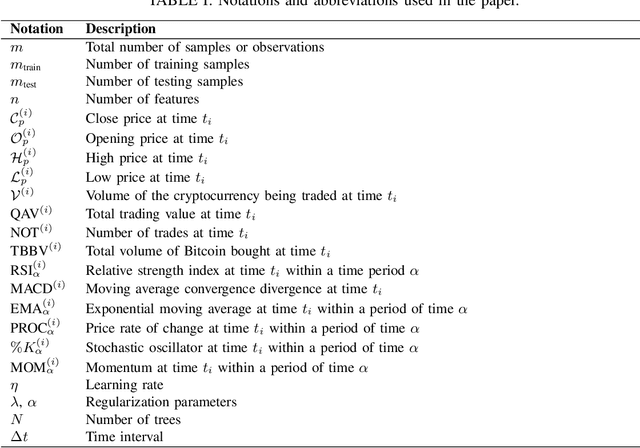

Abstract:The rapid growth of the stock market has attracted many investors due to its potential for significant profits. However, predicting stock prices accurately is difficult because financial markets are complex and constantly changing. This is especially true for the cryptocurrency market, which is known for its extreme volatility, making it challenging for traders and investors to make wise and profitable decisions. This study introduces a machine learning approach to predict cryptocurrency prices. Specifically, we make use of important technical indicators such as Exponential Moving Average (EMA) and Moving Average Convergence Divergence (MACD) to train and feed the XGBoost regressor model. We demonstrate our approach through an analysis focusing on the closing prices of Bitcoin cryptocurrency. We evaluate the model's performance through various simulations, showing promising results that suggest its usefulness in aiding/guiding cryptocurrency traders and investors in dynamic market conditions.

Fully Distributed Fog Load Balancing with Multi-Agent Reinforcement Learning

May 15, 2024

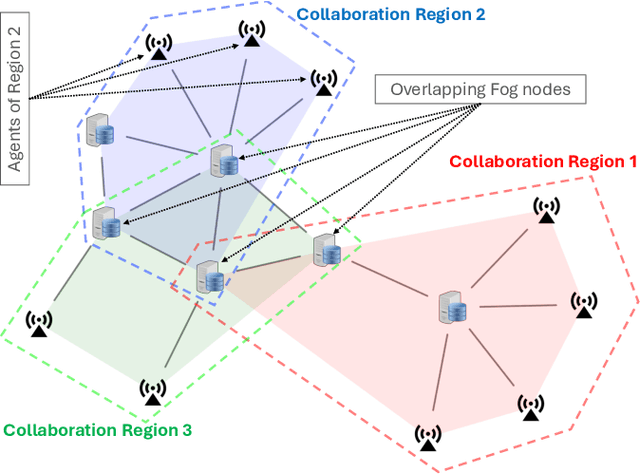

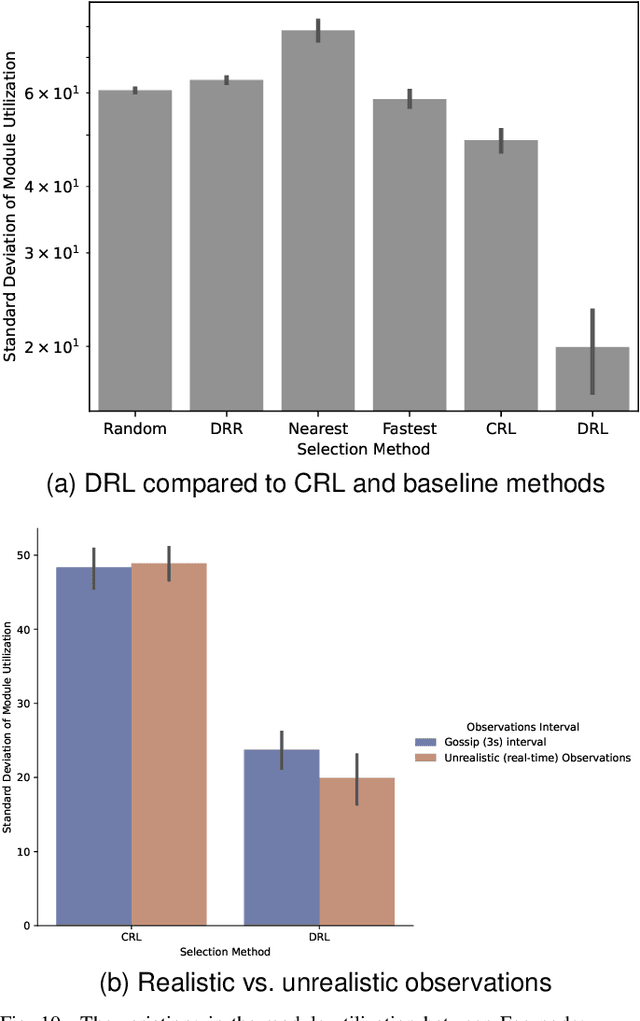

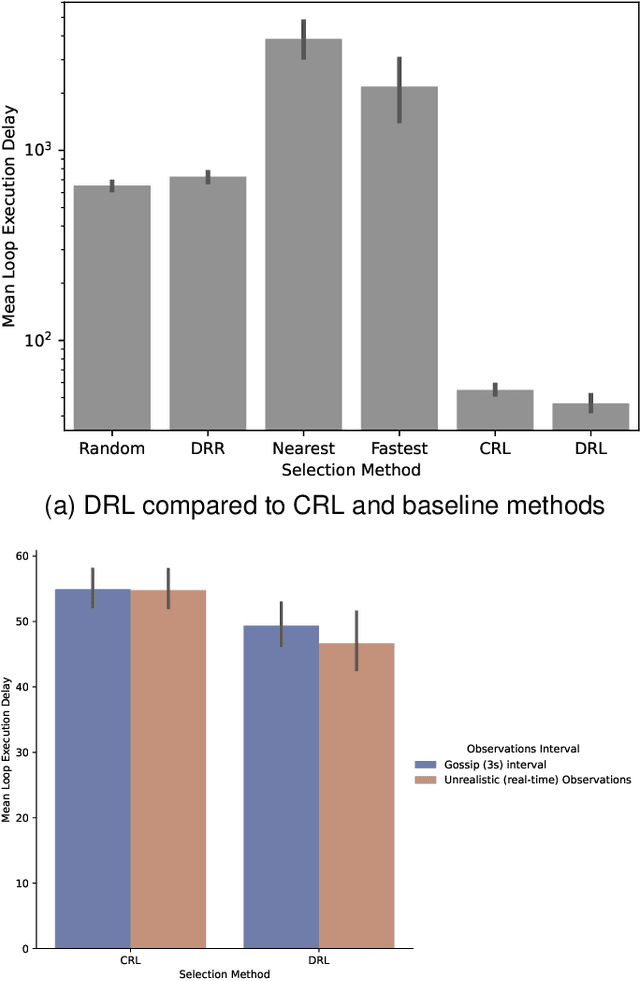

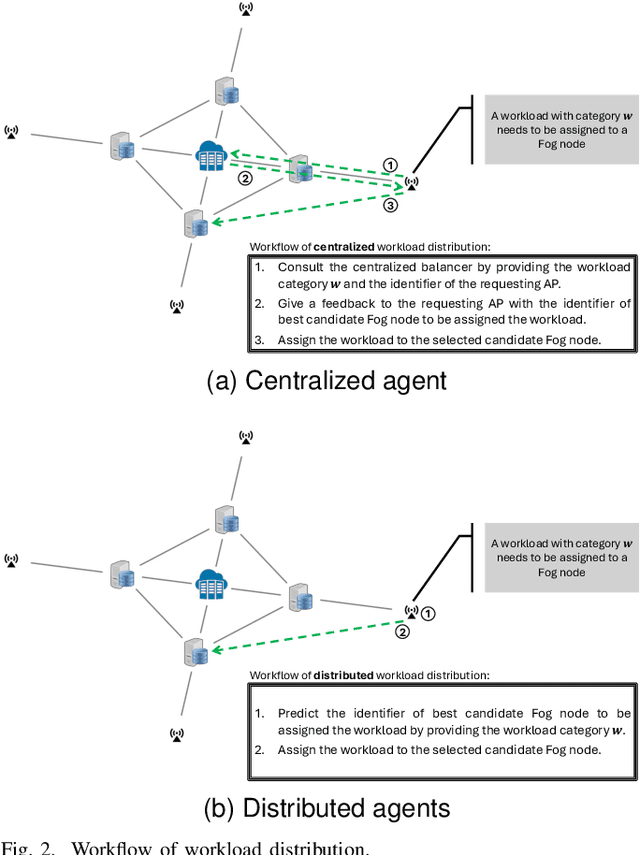

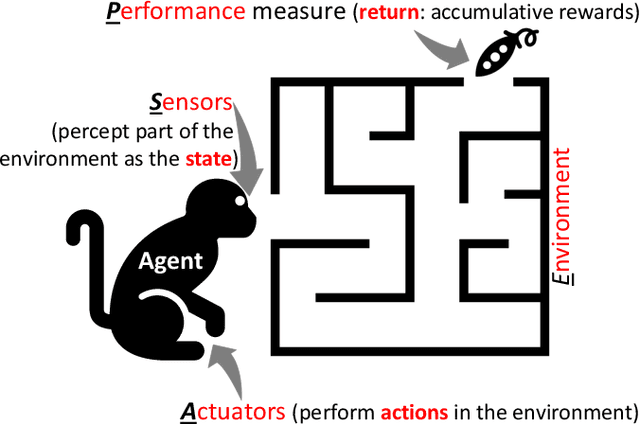

Abstract:Real-time Internet of Things (IoT) applications require real-time support to handle the ever-growing demand for computing resources to process IoT workloads. Fog Computing provides high availability of such resources in a distributed manner. However, these resources must be efficiently managed to distribute unpredictable traffic demands among heterogeneous Fog resources. This paper proposes a fully distributed load-balancing solution with Multi-Agent Reinforcement Learning (MARL) that intelligently distributes IoT workloads to optimize the waiting time while providing fair resource utilization in the Fog network. These agents use transfer learning for life-long self-adaptation to dynamic changes in the environment. By leveraging distributed decision-making, MARL agents effectively minimize the waiting time compared to a single centralized agent solution and other baselines, enhancing end-to-end execution delay. Besides performance gain, a fully distributed solution allows for a global-scale implementation where agents can work independently in small collaboration regions, leveraging nearby local resources. Furthermore, we analyze the impact of a realistic frequency to observe the state of the environment, unlike the unrealistic common assumption in the literature of having observations readily available in real-time for every required action. The findings highlight the trade-off between realism and performance using an interval-based Gossip-based multi-casting protocol against assuming real-time observation availability for every generated workload.

Lifelong Learning for Fog Load Balancing: A Transfer Learning Approach

Oct 08, 2023

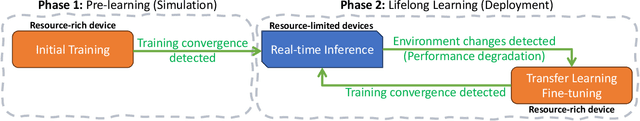

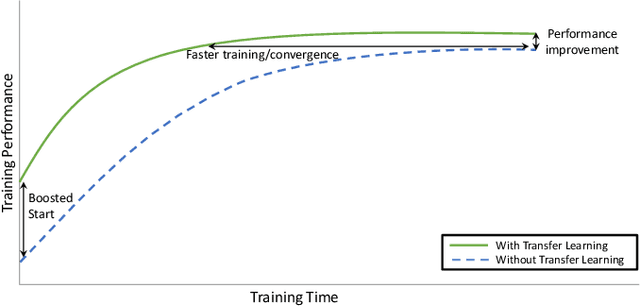

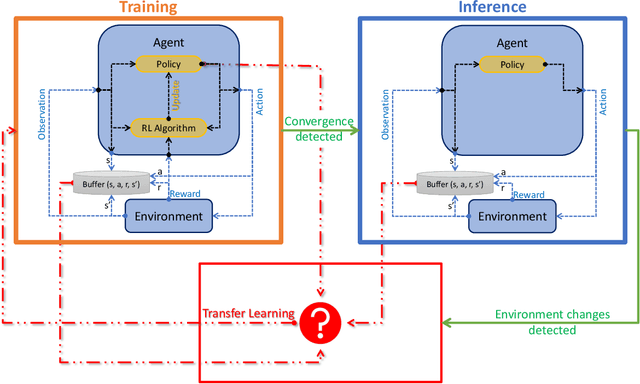

Abstract:Fog computing emerged as a promising paradigm to address the challenges of processing and managing data generated by the Internet of Things (IoT). Load balancing (LB) plays a crucial role in Fog computing environments to optimize the overall system performance. It requires efficient resource allocation to improve resource utilization, minimize latency, and enhance the quality of service for end-users. In this work, we improve the performance of privacy-aware Reinforcement Learning (RL) agents that optimize the execution delay of IoT applications by minimizing the waiting delay. To maintain privacy, these agents optimize the waiting delay by minimizing the change in the number of queued requests in the whole system, i.e., without explicitly observing the actual number of requests that are queued in each Fog node nor observing the compute resource capabilities of those nodes. Besides improving the performance of these agents, we propose in this paper a lifelong learning framework for these agents, where lightweight inference models are used during deployment to minimize action delay and only retrained in case of significant environmental changes. To improve the performance, minimize the training cost, and adapt the agents to those changes, we explore the application of Transfer Learning (TL). TL transfers the knowledge acquired from a source domain and applies it to a target domain, enabling the reuse of learned policies and experiences. TL can be also used to pre-train the agent in simulation before fine-tuning it in the real environment; this significantly reduces failure probability compared to learning from scratch in the real environment. To our knowledge, there are no existing efforts in the literature that use TL to address lifelong learning for RL-based Fog LB; this is one of the main obstacles in deploying RL LB solutions in Fog systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge