Lifelong Learning for Fog Load Balancing: A Transfer Learning Approach

Paper and Code

Oct 08, 2023

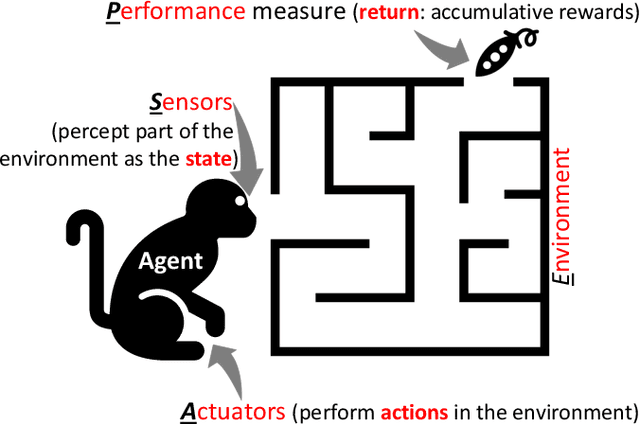

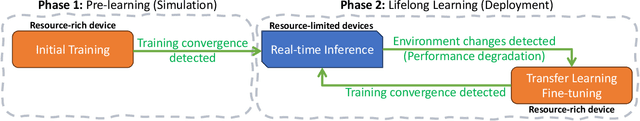

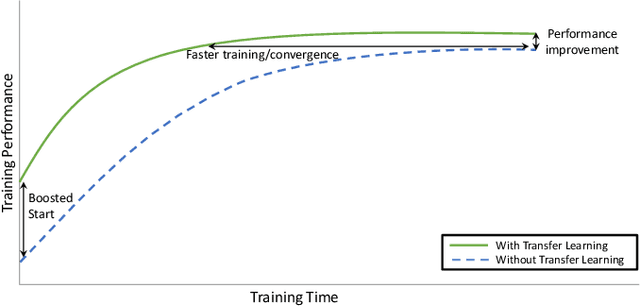

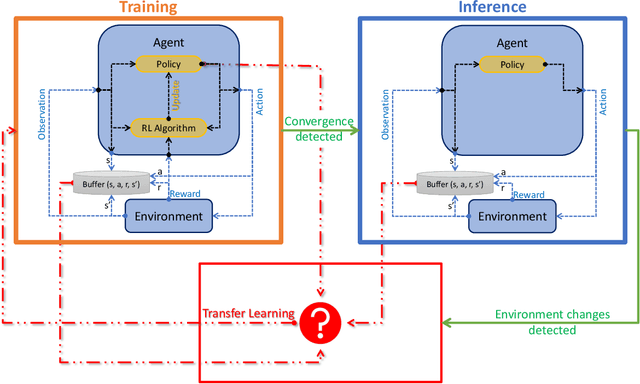

Fog computing emerged as a promising paradigm to address the challenges of processing and managing data generated by the Internet of Things (IoT). Load balancing (LB) plays a crucial role in Fog computing environments to optimize the overall system performance. It requires efficient resource allocation to improve resource utilization, minimize latency, and enhance the quality of service for end-users. In this work, we improve the performance of privacy-aware Reinforcement Learning (RL) agents that optimize the execution delay of IoT applications by minimizing the waiting delay. To maintain privacy, these agents optimize the waiting delay by minimizing the change in the number of queued requests in the whole system, i.e., without explicitly observing the actual number of requests that are queued in each Fog node nor observing the compute resource capabilities of those nodes. Besides improving the performance of these agents, we propose in this paper a lifelong learning framework for these agents, where lightweight inference models are used during deployment to minimize action delay and only retrained in case of significant environmental changes. To improve the performance, minimize the training cost, and adapt the agents to those changes, we explore the application of Transfer Learning (TL). TL transfers the knowledge acquired from a source domain and applies it to a target domain, enabling the reuse of learned policies and experiences. TL can be also used to pre-train the agent in simulation before fine-tuning it in the real environment; this significantly reduces failure probability compared to learning from scratch in the real environment. To our knowledge, there are no existing efforts in the literature that use TL to address lifelong learning for RL-based Fog LB; this is one of the main obstacles in deploying RL LB solutions in Fog systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge