Luoxiao Yang

ViTime: A Visual Intelligence-Based Foundation Model for Time Series Forecasting

Jul 10, 2024

Abstract:The success of large pretrained models in natural language processing (NLP) and computer vision (CV) has opened new avenues for constructing foundation models for time series forecasting (TSF). Traditional TSF foundation models rely heavily on numerical data fitting. In contrast, the human brain is inherently skilled at processing visual information, prefer predicting future trends by observing visualized sequences. From a biomimetic perspective, utilizing models to directly process numerical sequences might not be the most effective route to achieving Artificial General Intelligence (AGI). This paper proposes ViTime, a novel Visual Intelligence-based foundation model for TSF. ViTime overcomes the limitations of numerical time series data fitting by utilizing visual data processing paradigms and employs a innovative data synthesis method during training, called Real Time Series (RealTS). Experiments on a diverse set of previously unseen forecasting datasets demonstrate that ViTime achieves state-of-the-art zero-shot performance, even surpassing the best individually trained supervised models in some situations. These findings suggest that visual intelligence can significantly enhance time series analysis and forecasting, paving the way for more advanced and versatile models in the field. The code for our framework is accessible at https://github.com/IkeYang/ViTime.

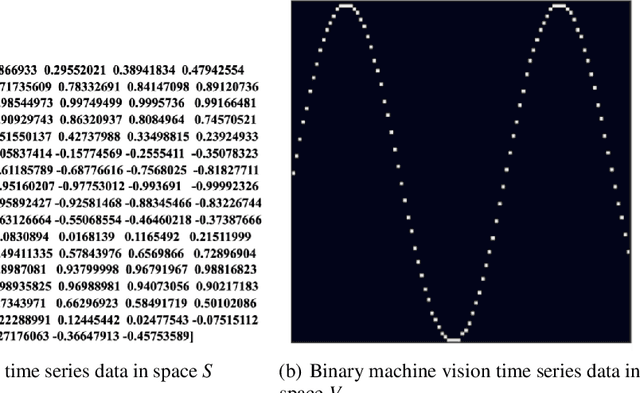

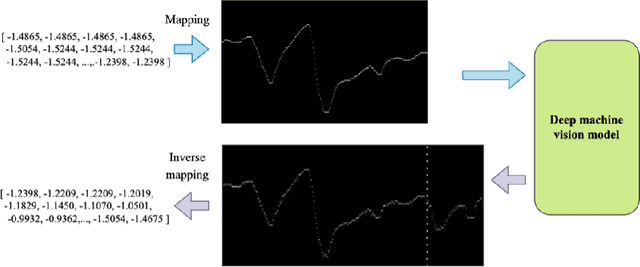

Your time series is worth a binary image: machine vision assisted deep framework for time series forecasting

Feb 28, 2023

Abstract:Time series forecasting (TSF) has been a challenging research area, and various models have been developed to address this task. However, almost all these models are trained with numerical time series data, which is not as effectively processed by the neural system as visual information. To address this challenge, this paper proposes a novel machine vision assisted deep time series analysis (MV-DTSA) framework. The MV-DTSA framework operates by analyzing time series data in a novel binary machine vision time series metric space, which includes a mapping and an inverse mapping function from the numerical time series space to the binary machine vision space, and a deep machine vision model designed to address the TSF task in the binary space. A comprehensive computational analysis demonstrates that the proposed MV-DTSA framework outperforms state-of-the-art deep TSF models, without requiring sophisticated data decomposition or model customization. The code for our framework is accessible at https://github.com/IkeYang/ machine-vision-assisted-deep-time-series-analysis-MV-DTSA-.

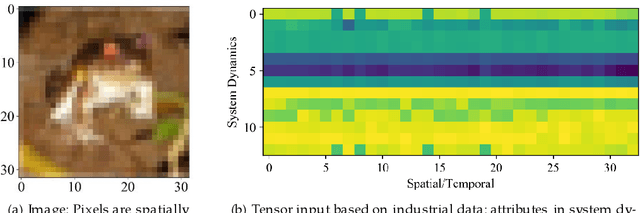

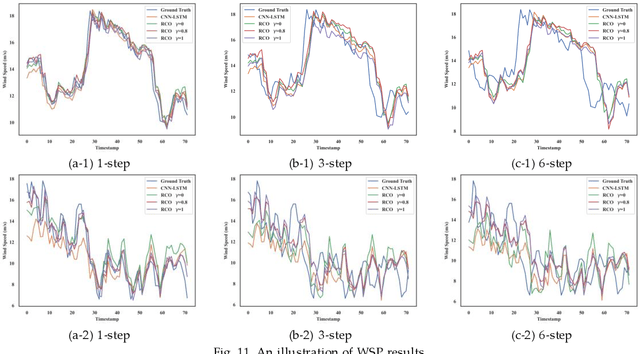

Rubik's Cube Operator: A Plug And Play Permutation Module for Better Arranging High Dimensional Industrial Data in Deep Convolutional Processes

Mar 24, 2022

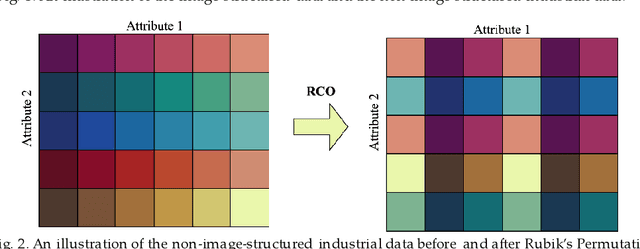

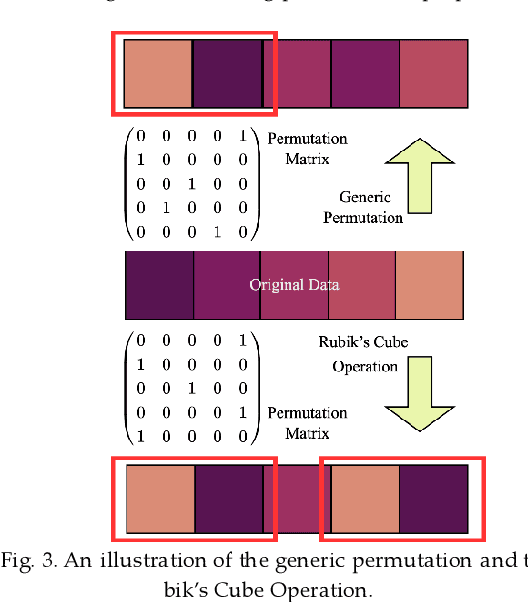

Abstract:The convolutional neural network (CNN) has been widely applied to process the industrial data based tensor input, which integrates data records of distributed industrial systems from the spatial, temporal, and system dynamics aspects. However, unlike images, information in the industrial data based tensor is not necessarily spatially ordered. Thus, directly applying CNN is ineffective. To tackle such issue, we propose a plug and play module, the Rubik's Cube Operator (RCO), to adaptively permutate the data organization of the industrial data based tensor to an optimal or suboptimal order of attributes before being processed by CNNs, which can be updated with subsequent CNNs together via the gradient-based optimizer. The proposed RCO maintains K binary and right stochastic permutation matrices to permutate attributes of K axes of the input industrial data based tensor. A novel learning process is proposed to enable learning permutation matrices from data, where the Gumbel-Softmax is employed to reparameterize elements of permutation matrices, and the soft regularization loss is proposed and added to the task-specific loss to ensure the feature diversity of the permuted data. We verify the effectiveness of the proposed RCO via considering two representative learning tasks processing industrial data via CNNs, the wind power prediction (WPP) and the wind speed prediction (WSP) from the renewable energy domain. Computational experiments are conducted based on four datasets collected from different wind farms and the results demonstrate that the proposed RCO can improve the performance of CNN based networks significantly.

Generative Wind Power Curve Modeling Via Machine Vision: A Self-learning Deep Convolutional Network Based Method

Aug 19, 2021

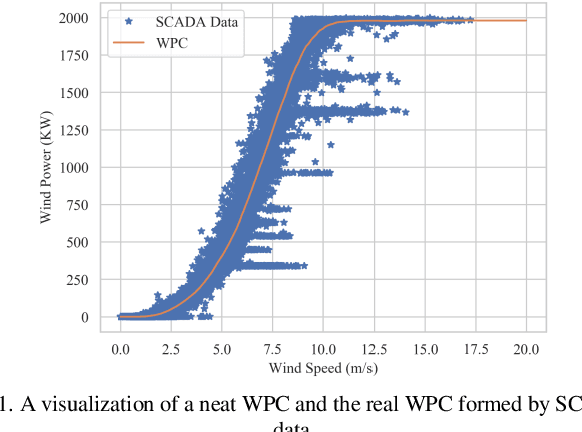

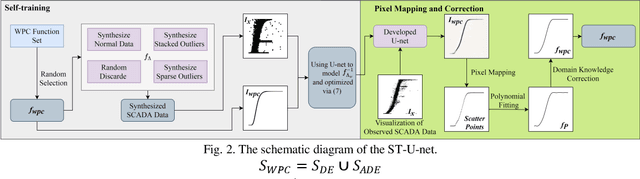

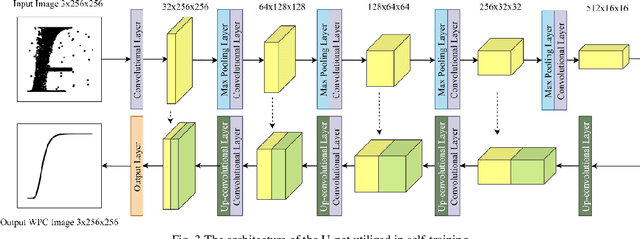

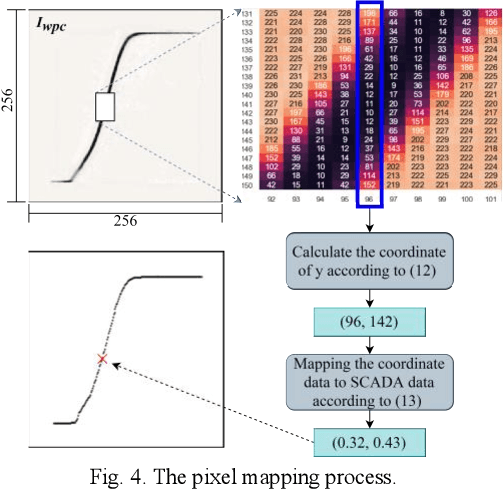

Abstract:This paper develops a novel self-training U-net (STU-net) based method for the automated WPC model generation without requiring data pre-processing. The self-training (ST) process of STU-net has two steps. First, different from traditional studies regarding the WPC modeling as a curve fitting problem, in this paper, we renovate the WPC modeling formulation from a machine vision aspect. To develop sufficiently diversified training samples, we synthesize supervisory control and data acquisition (SCADA) data based on a set of S-shape functions depicting WPCs. These synthesized SCADA data and WPC functions are visualized as images and paired as training samples(I_x, I_wpc). A U-net is then developed to approximate the model recovering I_wpc from I_x. The developed U-net is applied into observed SCADA data and can successfully generate the I_wpc. Moreover, we develop a pixel mapping and correction process to derive a mathematical form f_wpc representing I_wpcgenerated previously. The proposed STU-net only needs to train once and does not require any data preprocessing in applications. Numerical experiments based on 76 WTs are conducted to validate the superiority of the proposed method by benchmarking against classical WPC modeling methods. To demonstrate the repeatability of the presented research, we release our code at https://github.com/IkeYang/STU-net.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge