Luke Thorburn

The Moral Case for Using Language Model Agents for Recommendation

Oct 15, 2024Abstract:Our information and communication environment has fallen short of the ideals that networked global communication might have served. Identifying all the causes of its pathologies is difficult, but existing recommender systems very likely play a contributing role. In this paper, which draws on the normative tools of philosophy of computing, informed by empirical and technical insights from natural language processing and recommender systems, we make the moral case for an alternative approach. We argue that existing recommenders incentivise mass surveillance, concentrate power, fall prey to narrow behaviourism, and compromise user agency. Rather than just trying to avoid algorithms entirely, or to make incremental improvements to the current paradigm, researchers and engineers should explore an alternative paradigm: the use of language model (LM) agents to source and curate content that matches users' preferences and values, expressed in natural language. The use of LM agents for recommendation poses its own challenges, including those related to candidate generation, computational efficiency, preference modelling, and prompt injection. Nonetheless, if implemented successfully LM agents could: guide us through the digital public sphere without relying on mass surveillance; shift power away from platforms towards users; optimise for what matters instead of just for behavioural proxies; and scaffold our agency instead of undermining it.

Information Loss in Euclidean Preference Models

Aug 17, 2022

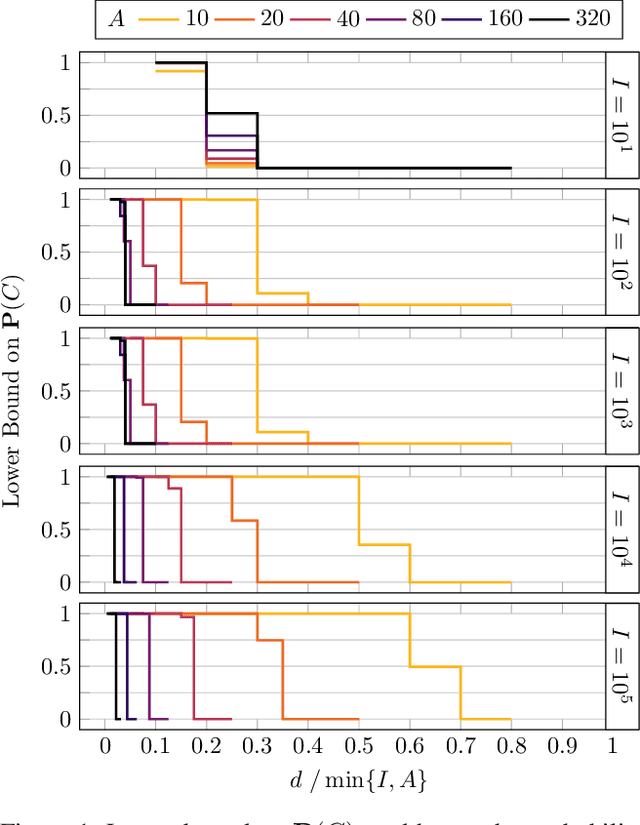

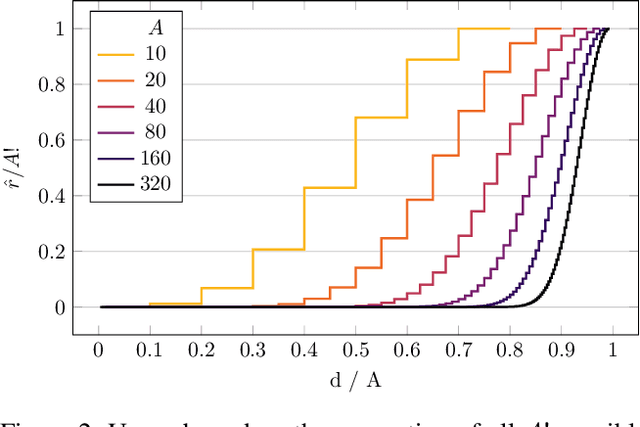

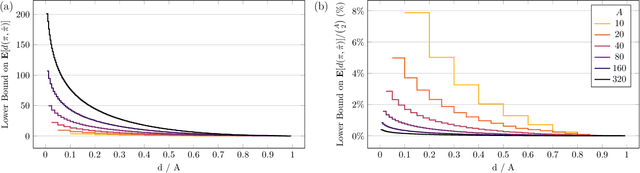

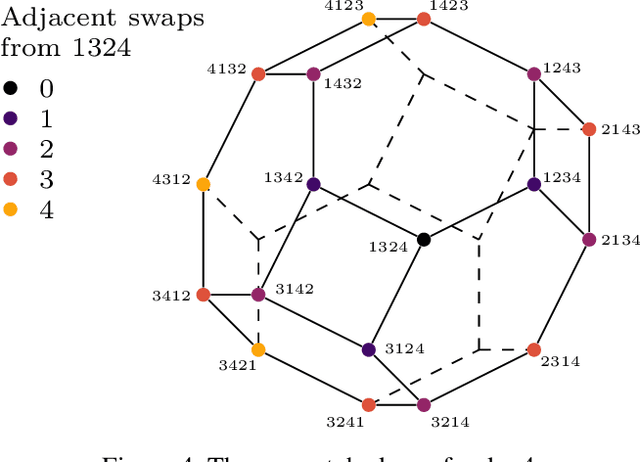

Abstract:Spatial models of preference, in the form of vector embeddings, are learned by many deep learning systems including recommender systems. Often these models are assumed to approximate a Euclidean structure, where an individual prefers alternatives positioned closer to their "ideal point", as measured by the Euclidean metric. However, Bogomolnaia and Laslier (2007) showed that there exist ordinal preference profiles that cannot be represented with this structure if the Euclidean space has two fewer dimensions than there are individuals or alternatives. We extend this result, showing that there are realistic situations in which almost all preference profiles cannot be represented with the Euclidean model, and derive a theoretical lower bound on the information lost when approximating non-representable preferences with the Euclidean model. Our results have implications for the interpretation and use of vector embeddings, because in some cases close approximation of arbitrary, true preferences is possible only if the dimensionality of the embeddings is a substantial fraction of the number of individuals or alternatives.

Online Handbook of Argumentation for AI: Volume 2

Jun 23, 2021

Abstract:This volume contains revised versions of the papers selected for the second volume of the Online Handbook of Argumentation for AI (OHAAI). Previously, formal theories of argument and argument interaction have been proposed and studied, and this has led to the more recent study of computational models of argument. Argumentation, as a field within artificial intelligence (AI), is highly relevant for researchers interested in symbolic representations of knowledge and defeasible reasoning. The purpose of this handbook is to provide an open access and curated anthology for the argumentation research community. OHAAI is designed to serve as a research hub to keep track of the latest and upcoming PhD-driven research on the theory and application of argumentation in all areas related to AI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge