Lukas Mandrake

Jet Propulsion Laboratory, California Institute of Technology

Science Autonomy using Machine Learning for Astrobiology

Apr 01, 2025Abstract:In recent decades, artificial intelligence (AI) including machine learning (ML) have become vital for space missions enabling rapid data processing, advanced pattern recognition, and enhanced insight extraction. These tools are especially valuable in astrobiology applications, where models must distinguish biotic patterns from complex abiotic backgrounds. Advancing the integration of autonomy through AI and ML into space missions is a complex challenge, and we believe that by focusing on key areas, we can make significant progress and offer practical recommendations for tackling these obstacles.

Onboard Science Instrument Autonomy for the Detection of Microscopy Biosignatures on the Ocean Worlds Life Surveyor

Apr 25, 2023Abstract:The quest to find extraterrestrial life is a critical scientific endeavor with civilization-level implications. Icy moons in our solar system are promising targets for exploration because their liquid oceans make them potential habitats for microscopic life. However, the lack of a precise definition of life poses a fundamental challenge to formulating detection strategies. To increase the chances of unambiguous detection, a suite of complementary instruments must sample multiple independent biosignatures (e.g., composition, motility/behavior, and visible structure). Such an instrument suite could generate 10,000x more raw data than is possible to transmit from distant ocean worlds like Enceladus or Europa. To address this bandwidth limitation, Onboard Science Instrument Autonomy (OSIA) is an emerging discipline of flight systems capable of evaluating, summarizing, and prioritizing observational instrument data to maximize science return. We describe two OSIA implementations developed as part of the Ocean Worlds Life Surveyor (OWLS) prototype instrument suite at the Jet Propulsion Laboratory. The first identifies life-like motion in digital holographic microscopy videos, and the second identifies cellular structure and composition via innate and dye-induced fluorescence. Flight-like requirements and computational constraints were used to lower barriers to infusion, similar to those available on the Mars helicopter, "Ingenuity." We evaluated the OSIA's performance using simulated and laboratory data and conducted a live field test at the hypersaline Mono Lake planetary analog site. Our study demonstrates the potential of OSIA for enabling biosignature detection and provides insights and lessons learned for future mission concepts aimed at exploring the outer solar system.

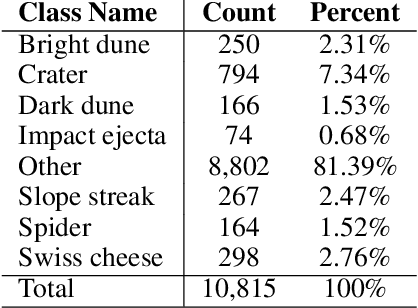

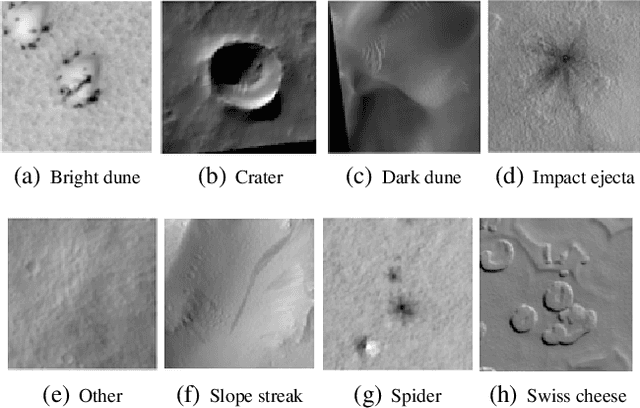

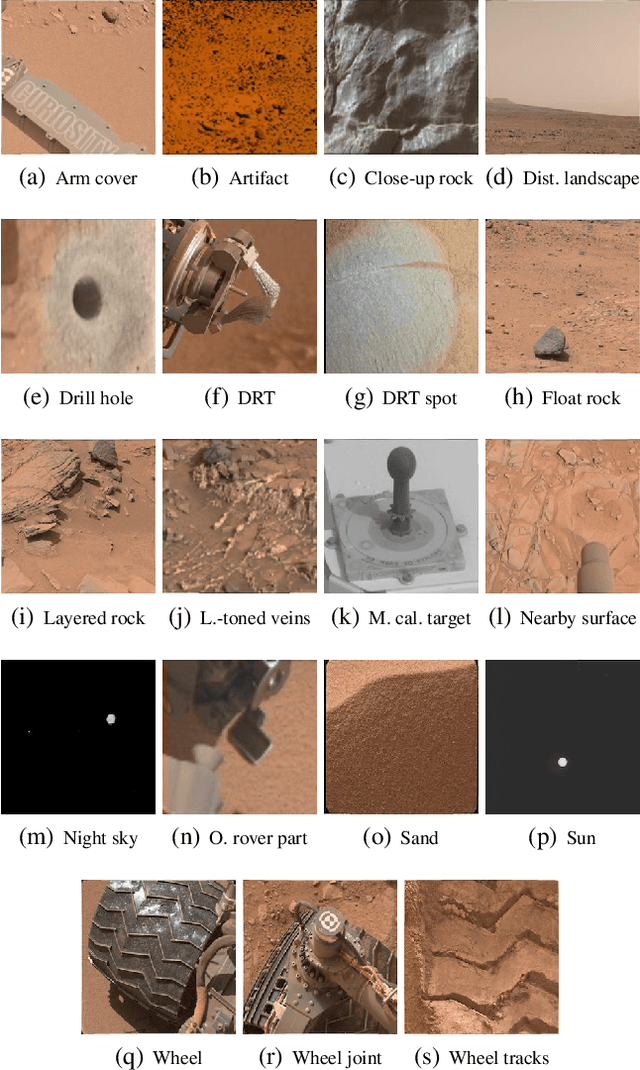

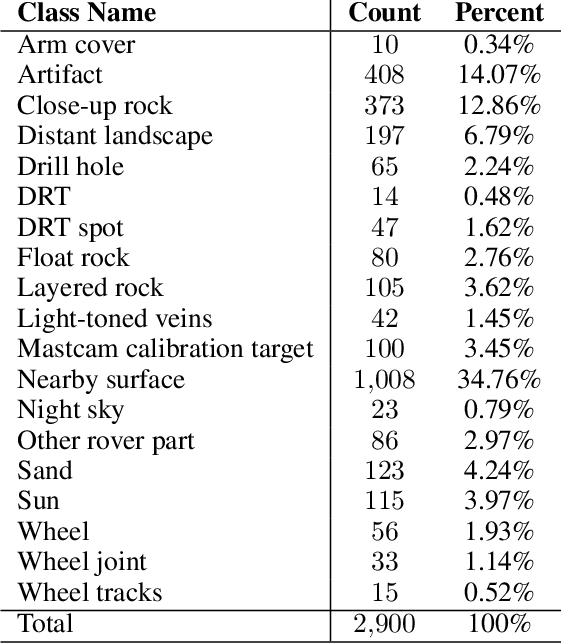

Mars Image Content Classification: Three Years of NASA Deployment and Recent Advances

Feb 09, 2021

Abstract:The NASA Planetary Data System hosts millions of images acquired from the planet Mars. To help users quickly find images of interest, we have developed and deployed content-based classification and search capabilities for Mars orbital and surface images. The deployed systems are publicly accessible using the PDS Image Atlas. We describe the process of training, evaluating, calibrating, and deploying updates to two CNN classifiers for images collected by Mars missions. We also report on three years of deployment including usage statistics, lessons learned, and plans for the future.

Discrete linear-complexity reinforcement learning in continuous action spaces for Q-learning algorithms

Jul 23, 2018

Abstract:In this article, we sketch an algorithm that extends the Q-learning algorithms to the continuous action space domain. Our method is based on the discretization of the action space. Despite the commonly used discretization methods, our method does not increase the discretized problem dimensionality exponentially. We will show that our proposed method is linear in complexity when the discretization is employed. The variant of the Q-learning algorithm presented in this work, labeled as Finite Step Q-Learning (FSQ), can be deployed to both shallow and deep neural network architectures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge