Steffen Mauceri

Conditional Diffusion-Based Retrieval of Atmospheric CO2 from Earth Observing Spectroscopy

Apr 23, 2025Abstract:Satellite-based estimates of greenhouse gas (GHG) properties from observations of reflected solar spectra are integral for understanding and monitoring complex terrestrial systems and their impact on the carbon cycle due to their near global coverage. Known as retrieval, making GHG concentration estimations from these observations is a non-linear Bayesian inverse problem, which is operationally solved using a computationally expensive algorithm called Optimal Estimation (OE), providing a Gaussian approximation to a non-Gaussian posterior. This leads to issues in solver algorithm convergence, and to unrealistically confident uncertainty estimates for the retrieved quantities. Upcoming satellite missions will provide orders of magnitude more data than the current constellation of GHG observers. Development of fast and accurate retrieval algorithms with robust uncertainty quantification is critical. Doing so stands to provide substantial climate impact of moving towards the goal of near continuous real-time global monitoring of carbon sources and sinks which is essential for policy making. To achieve this goal, we propose a diffusion-based approach to flexibly retrieve a Gaussian or non-Gaussian posterior, for NASA's Orbiting Carbon Observatory-2 spectrometer, while providing a substantial computational speed-up over the current operational state-of-the-art.

* Published as a workshop paper in "Tackling Climate Change with Machine Learning", ICLR 2025 Workshop on Tackling Climate Change with Machine Learning. https://www.climatechange.ai/papers/iclr2025/12

Onboard Science Instrument Autonomy for the Detection of Microscopy Biosignatures on the Ocean Worlds Life Surveyor

Apr 25, 2023Abstract:The quest to find extraterrestrial life is a critical scientific endeavor with civilization-level implications. Icy moons in our solar system are promising targets for exploration because their liquid oceans make them potential habitats for microscopic life. However, the lack of a precise definition of life poses a fundamental challenge to formulating detection strategies. To increase the chances of unambiguous detection, a suite of complementary instruments must sample multiple independent biosignatures (e.g., composition, motility/behavior, and visible structure). Such an instrument suite could generate 10,000x more raw data than is possible to transmit from distant ocean worlds like Enceladus or Europa. To address this bandwidth limitation, Onboard Science Instrument Autonomy (OSIA) is an emerging discipline of flight systems capable of evaluating, summarizing, and prioritizing observational instrument data to maximize science return. We describe two OSIA implementations developed as part of the Ocean Worlds Life Surveyor (OWLS) prototype instrument suite at the Jet Propulsion Laboratory. The first identifies life-like motion in digital holographic microscopy videos, and the second identifies cellular structure and composition via innate and dye-induced fluorescence. Flight-like requirements and computational constraints were used to lower barriers to infusion, similar to those available on the Mars helicopter, "Ingenuity." We evaluated the OSIA's performance using simulated and laboratory data and conducted a live field test at the hypersaline Mono Lake planetary analog site. Our study demonstrates the potential of OSIA for enabling biosignature detection and provides insights and lessons learned for future mission concepts aimed at exploring the outer solar system.

K-textures, a self supervised hard clustering deep learning algorithm for satellite images segmentation

May 18, 2022

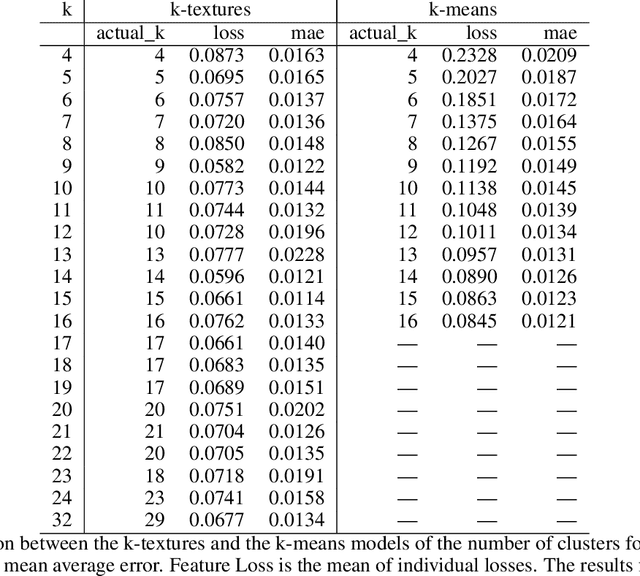

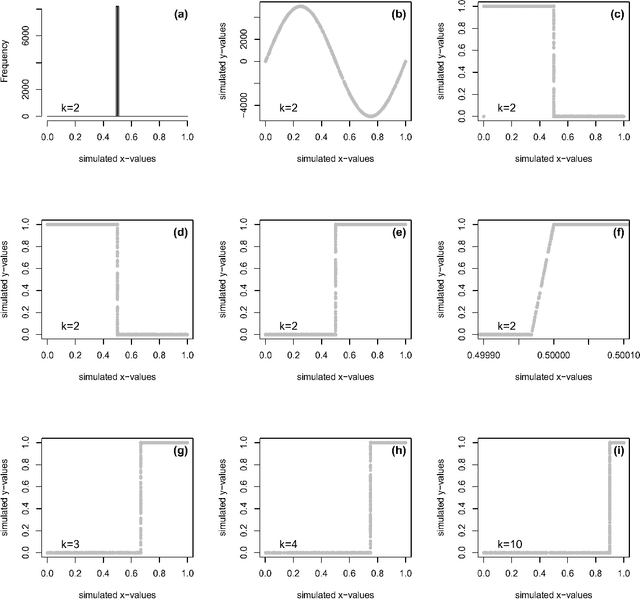

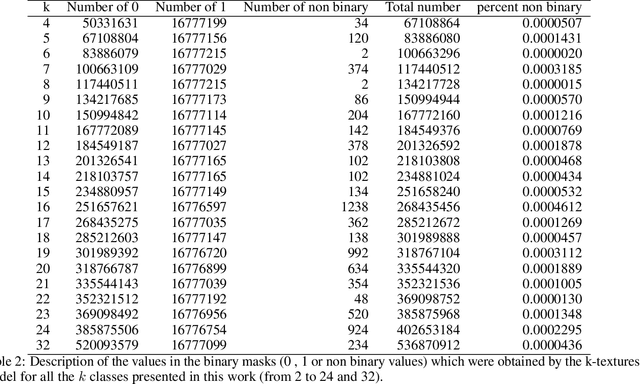

Abstract:Deep learning self supervised algorithms that can segment an image in a fixed number of hard labels such as the k-means algorithm and only relying only on deep learning techniques are still lacking. Here, we introduce the k-textures algorithm which provides self supervised segmentation of a 4-band image (RGB-NIR) for a $k$ number of classes. An example of its application on high resolution Planet satellite imagery is given. Our algorithm shows that discrete search is feasible using convolutional neural networks (CNN) and gradient descent. The model detects $k$ hard clustering classes represented in the model as $k$ discrete binary masks and their associated $k$ independently generated textures, that combined are a simulation of the original image. The similarity loss is the mean squared error between the features of the original and the simulated image, both extracted from the penultimate convolutional block of Keras 'imagenet' pretrained VGG-16 model and a custom feature extractor made with Planet data. The main advances of the k-textures model are: first, the $k$ discrete binary masks are obtained inside the model using gradient descent. The model allows for the generation of discrete binary masks using a novel method using a hard sigmoid activation function. Second, it provides hard clustering classes -- each pixels has only one class. Finally, in comparison to k-means, where each pixel is considered independently, here, contextual information is also considered and each class is not associated only to a similar values in the color channels but to a texture. Our approach is designed to ease the production of training samples for satellite image segmentation. The model codes and weights are available at https://doi.org/10.5281/zenodo.6359859

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge