Lukas Jendele

Learning Functionally Decomposed Hierarchies for Continuous Control Tasks

Feb 14, 2020

Abstract:Solving long-horizon sequential decision making tasks in environments with sparse rewards is a longstanding problem in reinforcement learning (RL) research. Hierarchical Reinforcement Learning (HRL) has held the promise to enhance the capabilities of RL agents via operation on different levels of temporal abstraction. Despite the success of recent works in dealing with inherent nonstationarity and sample complexity, it remains difficult to generalize to unseen environments and to transfer different layers of the policy to other agents. In this paper, we propose a novel HRL architecture, Hierarchical Decompositional Reinforcement Learning (HiDe), which allows decomposition of the hierarchical layers into independent subtasks, yet allows for joint training of all layers in end-to-end manner. The main insight is to combine a control policy on a lower level with an image-based planning policy on a higher level. We evaluate our method on various complex continuous control tasks, demonstrating that generalization across environments and transfer of higher level policies, such as from a simple ball to a complex humanoid, can be achieved. See videos https://sites.google.com/view/hide-rl.

Adversarial Augmentation for Enhancing Classification of Mammography Images

Feb 20, 2019

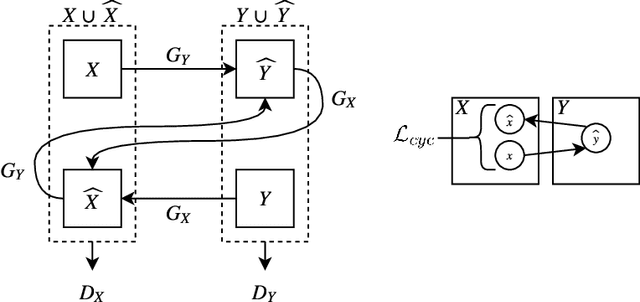

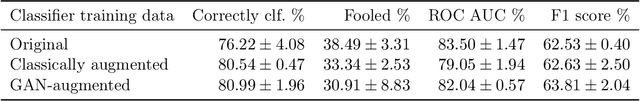

Abstract:Supervised deep learning relies on the assumption that enough training data is available, which presents a problem for its application to several fields, like medical imaging. On the example of a binary image classification task (breast cancer recognition), we show that pretraining a generative model for meaningful image augmentation helps enhance the performance of the resulting classifier. By augmenting the data, performance on downstream classification tasks could be improved even with a relatively small training set. We show that this "adversarial augmentation" yields promising results compared to classical image augmentation on the example of breast cancer classification.

Injecting and removing malignant features in mammography with CycleGAN: Investigation of an automated adversarial attack using neural networks

Nov 19, 2018

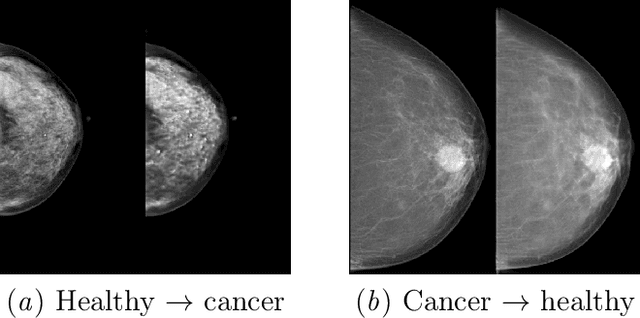

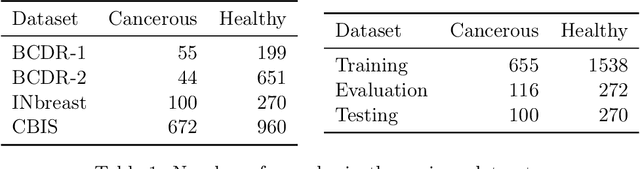

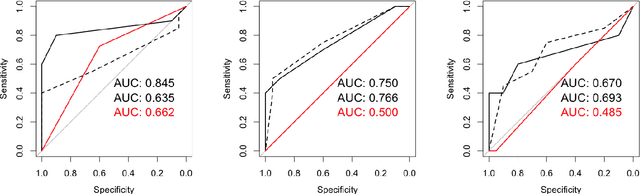

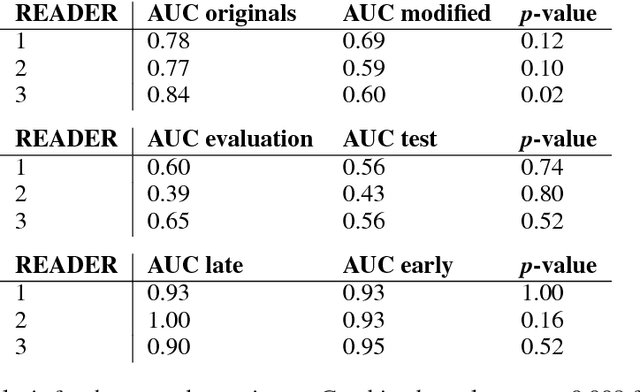

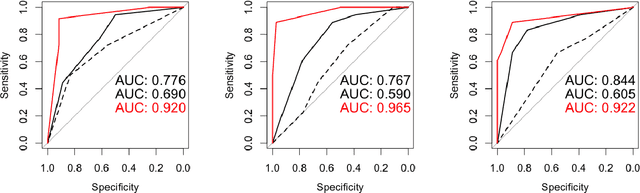

Abstract:$\textbf{Purpose}$ To train a cycle-consistent generative adversarial network (CycleGAN) on mammographic data to inject or remove features of malignancy, and to determine whether these AI-mediated attacks can be detected by radiologists. $\textbf{Material and Methods}$ From the two publicly available datasets, BCDR and INbreast, we selected images from cancer patients and healthy controls. An internal dataset served as test data, withheld during training. We ran two experiments training CycleGAN on low and higher resolution images ($256 \times 256$ px and $512 \times 408$ px). Three radiologists read the images and rated the likelihood of malignancy on a scale from 1-5 and the likelihood of the image being manipulated. The readout was evaluated by ROC analysis (Area under the ROC curve = AUC). $\textbf{Results}$ At the lower resolution, only one radiologist exhibited markedly lower detection of cancer (AUC=0.85 vs 0.63, p=0.06), while the other two were unaffected (0.67 vs. 0.69 and 0.75 vs. 0.77, p=0.55). Only one radiologist could discriminate between original and modified images slightly better than guessing/chance (0.66, p=0.008). At the higher resolution, all radiologists showed significantly lower detection rate of cancer in the modified images (0.77-0.84 vs. 0.59-0.69, p=0.008), however, they were now able to reliably detect modified images due to better visibility of artifacts (0.92, 0.92 and 0.97). $\textbf{Conclusion}$ A CycleGAN can implicitly learn malignant features and inject or remove them so that a substantial proportion of small mammographic images would consequently be misdiagnosed. At higher resolutions, however, the method is currently limited and has a clear trade-off between manipulation of images and introduction of artifacts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge