Lukas Hirsch

Predicting breast cancer with AI for individual risk-adjusted MRI screening and early detection

Nov 29, 2023Abstract:Women with an increased life-time risk of breast cancer undergo supplemental annual screening MRI. We propose to predict the risk of developing breast cancer within one year based on the current MRI, with the objective of reducing screening burden and facilitating early detection. An AI algorithm was developed on 53,858 breasts from 12,694 patients who underwent screening or diagnostic MRI and accrued over 12 years, with 2,331 confirmed cancers. A first U-Net was trained to segment lesions and identify regions of concern. A second convolutional network was trained to detect malignant cancer using features extracted by the U-Net. This network was then fine-tuned to estimate the risk of developing cancer within a year in cases that radiologists considered normal or likely benign. Risk predictions from this AI were evaluated with a retrospective analysis of 9,183 breasts from a high-risk screening cohort, which were not used for training. Statistical analysis focused on the tradeoff between number of omitted exams versus negative predictive value, and number of potential early detections versus positive predictive value. The AI algorithm identified regions of concern that coincided with future tumors in 52% of screen-detected cancers. Upon directed review, a radiologist found that 71.3% of cancers had a visible correlate on the MRI prior to diagnosis, 65% of these correlates were identified by the AI model. Reevaluating these regions in 10% of all cases with higher AI-predicted risk could have resulted in up to 33% early detections by a radiologist. Additionally, screening burden could have been reduced in 16% of lower-risk cases by recommending a later follow-up without compromising current interval cancer rate. With increasing datasets and improving image quality we expect this new AI-aided, adaptive screening to meaningfully reduce screening burden and improve early detection.

Deep learning achieves radiologist-level performance of tumor segmentation in breast MRI

Sep 21, 2020

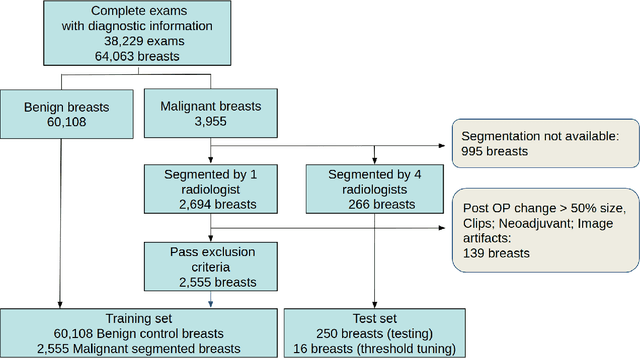

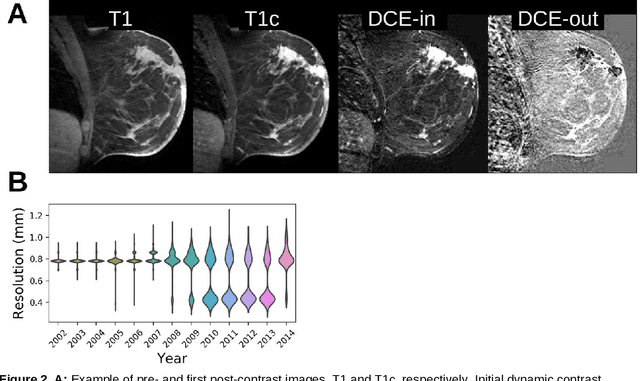

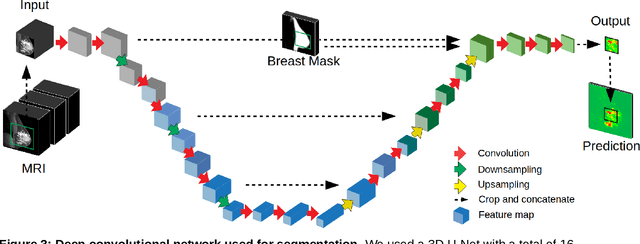

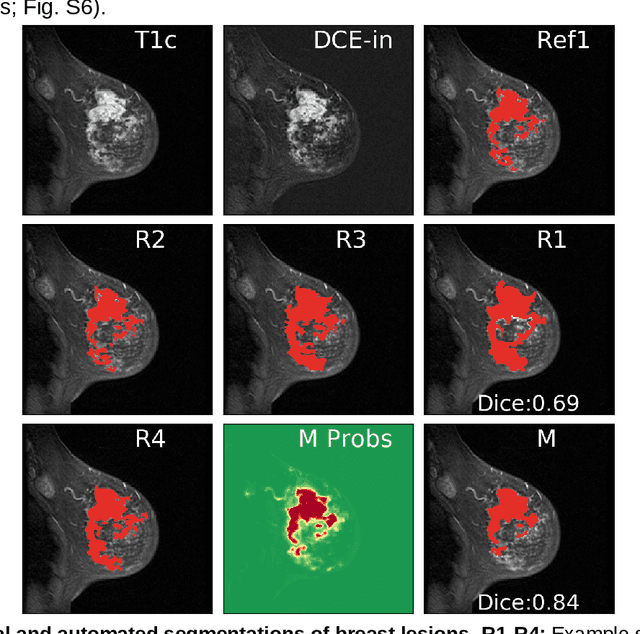

Abstract:Purpose: The goal of this research was to develop a deep network architecture that achieves fully-automated radiologist-level segmentation of breast tumors in MRI. Materials and Methods: We leveraged 38,229 clinical MRI breast exams collected retrospectively from women aged 12-94 (mean age 54) who presented between 2002 and 2014 at a single clinical site. The training set for the network consisted of 2,555 malignant breasts that were segmented in 2D by experienced radiologists, as well as 60,108 benign breasts that served as negative controls. The test set consisted of 250 exams with tumors segmented independently by four radiologists. We selected among several 3D deep convolutional neural network architectures, input modalities and harmonization methods. The outcome measure was the Dice score for 2D segmentation, and was compared between the network and radiologists using the Wilcoxon signed-rank test and the TOST procedure. Results: The best-performing network on the training set was a volumetric U-Net with contrast enhancement dynamic as input and with intensity normalized for each exam. In the test set the median Dice score of this network was 0.77. The performance of the network was equivalent to that of the radiologists (TOST procedure with radiologist performance of 0.69-0.84 as equivalence bounds: p = 5e-10 and p = 2e-5, respectively; N = 250) and compares favorably with published state of the art (0.6-0.77). Conclusion: When trained on a dataset of over 60 thousand breasts, a volumetric U-Net performs as well as expert radiologists at segmenting malignant breast lesions in MRI.

Tissue segmentation with deep 3D networks and spatial priors

May 24, 2019

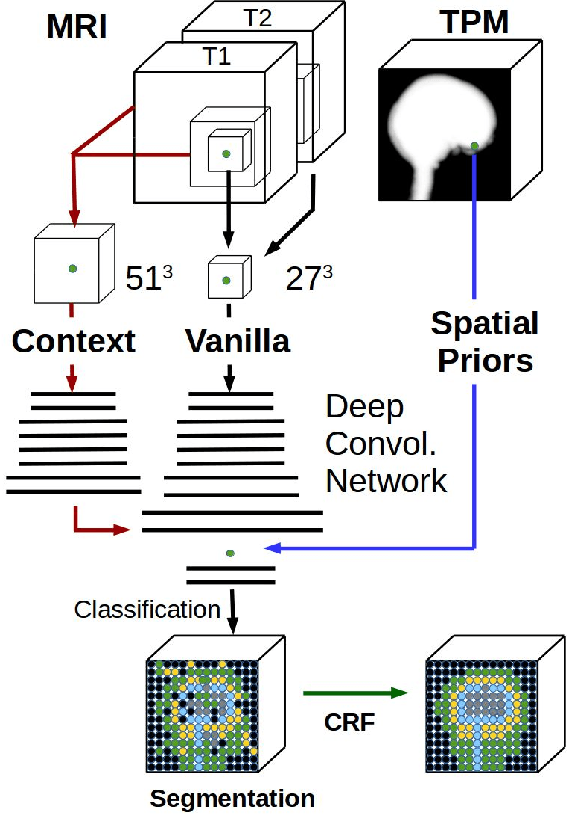

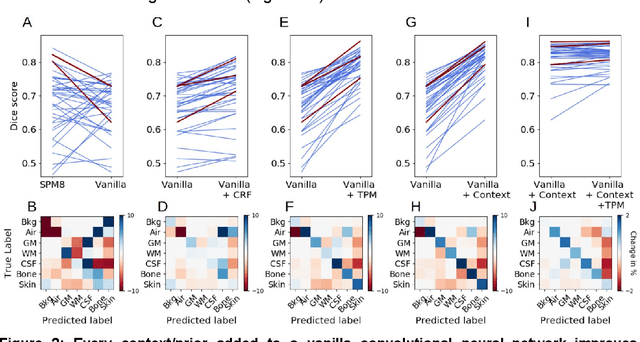

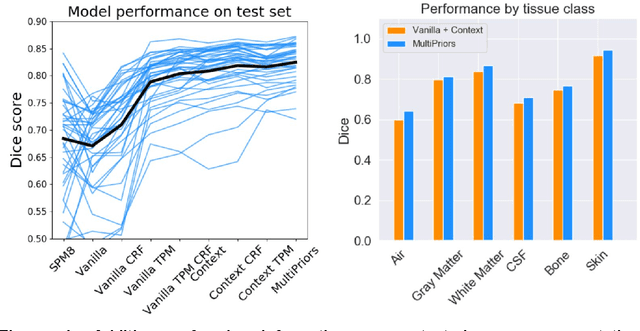

Abstract:Conventional automated segmentation of the human head distinguishes different tissues based on image intensities in an MRI volume and prior tissue probability maps (TPM). This works well for normal head anatomies, but fails in the presence of unexpected lesions. Deep convolutional neural networks leverage instead volumetric spatial patterns and can be trained to segment lesions, but have thus far not integrated prior probabilities. Here we add to a three-dimensional convolutional network spatial priors with a TPM, morphological priors with conditional random fields, and context with a wider field-of-view at lower resolution. The new architecture, which we call MultiPrior, was designed to be a fully-trainable, three-dimensional convolutional network. Thus, the resulting architecture represents a neural network with learnable spatial memories. When trained on a set of stroke patients and healthy subjects, MultiPrior outperforms the state-of-the-art segmentation tools such as DeepMedic and SPM segmentation. The approach is further demonstrated on patients with disorders of consciousness, where we find that cognitive state correlates positively with gray-matter volumes and negatively with the extent of ventricles. We make the code and trained networks freely available to support future clinical research projects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge