Lukas Ewecker

An Analysis of Driver-Initiated Takeovers during Assisted Driving and their Effect on Driver Satisfaction

Apr 19, 2024Abstract:During the use of Advanced Driver Assistance Systems (ADAS), drivers can intervene in the active function and take back control due to various reasons. However, the specific reasons for driver-initiated takeovers in naturalistic driving are still not well understood. In order to get more information on the reasons behind these takeovers, a test group study was conducted. There, 17 participants used a predictive longitudinal driving function for their daily commutes and annotated the reasons for their takeovers during active function use. In this paper, the recorded takeovers are analyzed and the different reasons for them are highlighted. The results show that the reasons can be divided into three main categories. The most common category consists of takeovers which aim to adjust the behavior of the ADAS within its Operational Design Domain (ODD) in order to better match the drivers' personal preferences. Other reasons include takeovers due to leaving the ADAS's ODD and corrections of incorrect sensing state information. Using the questionnaire results of the test group study, it was found that the number and frequency of takeovers especially within the ADAS's ODD have a significant negative impact on driver satisfaction. Therefore, the driver satisfaction with the ADAS could be increased by adapting its behavior to the drivers' wishes and thereby lowering the number of takeovers within the ODD. The information contained in the takeover behavior of the drivers could be used as feedback for the ADAS. Finally, it is shown that there are considerable differences in the takeover behavior of different drivers, which shows a need for ADAS individualization.

Combining Visual Saliency Methods and Sparse Keypoint Annotations to Providently Detect Vehicles at Night

Apr 25, 2022

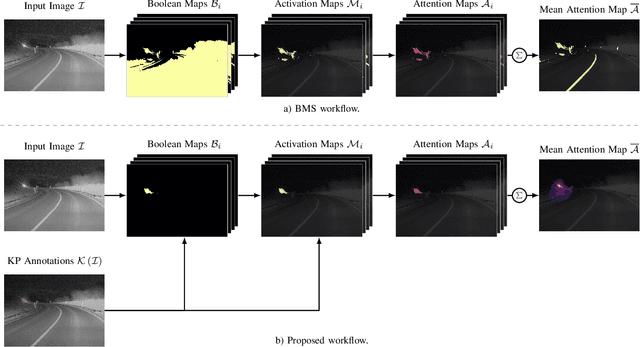

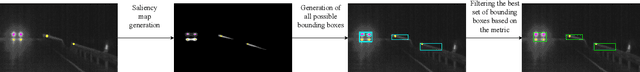

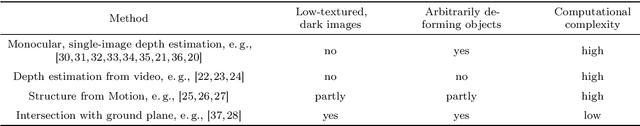

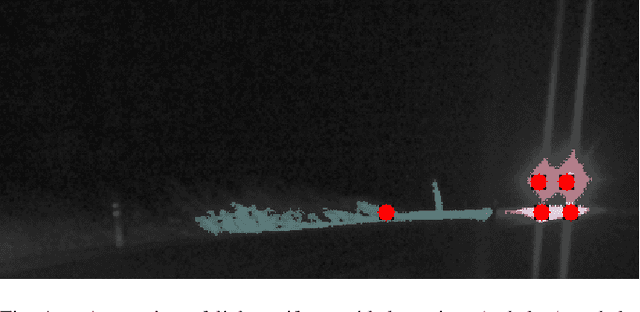

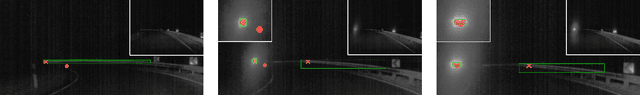

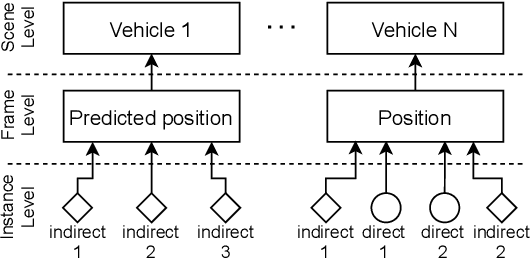

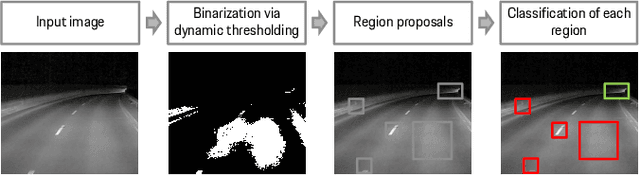

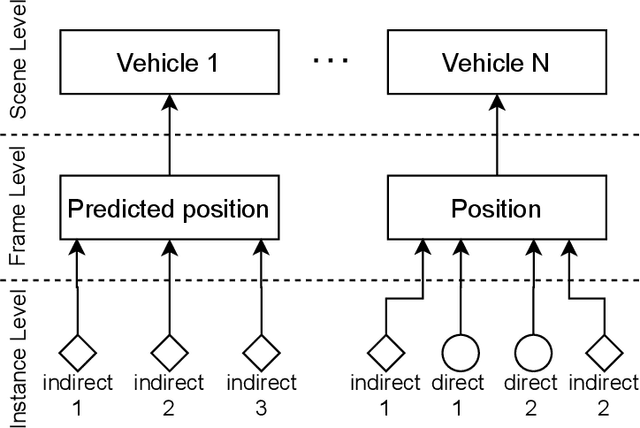

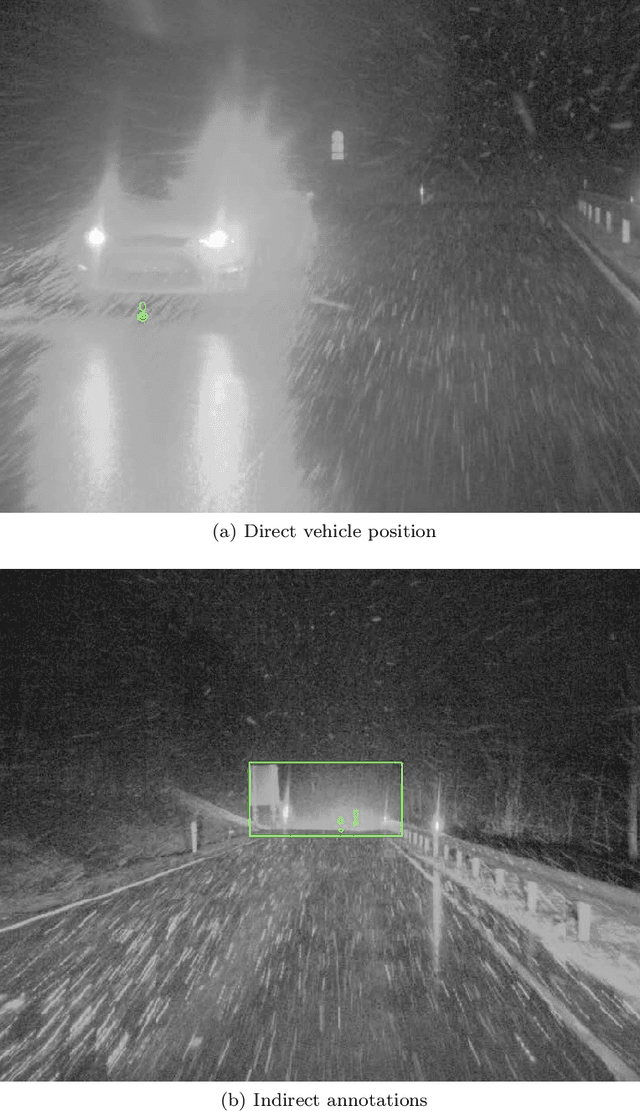

Abstract:Provident detection of other road users at night has the potential for increasing road safety. For this purpose, humans intuitively use visual cues, such as light cones and light reflections emitted by other road users to be able to react to oncoming traffic at an early stage. This behavior can be imitated by computer vision methods by predicting the appearance of vehicles based on emitted light reflections caused by the vehicle's headlights. Since current object detection algorithms are mainly based on detecting directly visible objects annotated via bounding boxes, the detection and annotation of light reflections without sharp boundaries is challenging. For this reason, the extensive open-source dataset PVDN (Provident Vehicle Detection at Night) was published, which includes traffic scenarios at night with light reflections annotated via keypoints. In this paper, we explore the potential of saliency-based approaches to create different object representations based on the visual saliency and sparse keypoint annotations of the PVDN dataset. For that, we extend the general idea of Boolean map saliency towards a context-aware approach by taking into consideration sparse keypoint annotations by humans. We show that this approach allows for an automated derivation of different object representations, such as binary maps or bounding boxes so that detection models can be trained on different annotation variants and the problem of providently detecting vehicles at night can be tackled from different perspectives. With that, we provide further powerful tools and methods to study the problem of detecting vehicles at night before they are actually visible.

Provident Vehicle Detection at Night for Advanced Driver Assistance Systems

Aug 11, 2021

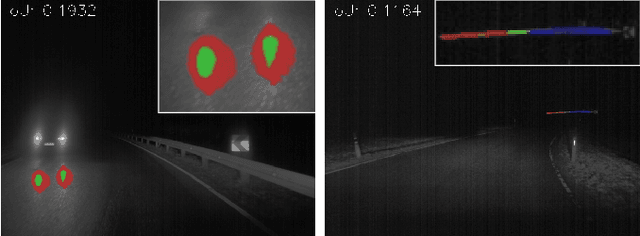

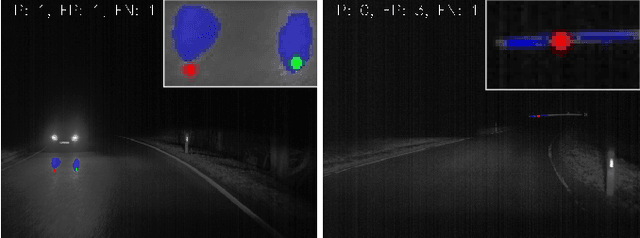

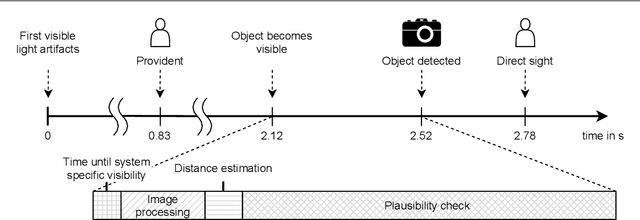

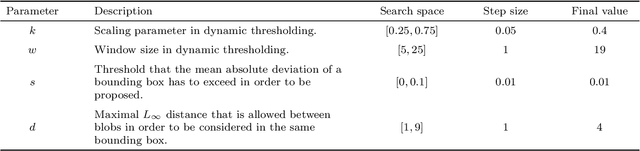

Abstract:In recent years, computer vision algorithms have become more and more powerful, which enabled technologies such as autonomous driving to evolve with rapid pace. However, current algorithms mainly share one limitation: They rely on directly visible objects. This is a major drawback compared to human behavior, where indirect visual cues caused by the actual object (e.g., shadows) are already used intuitively to retrieve information or anticipate occurring objects. While driving at night, this performance deficit becomes even more obvious: Humans already process the light artifacts caused by oncoming vehicles to assume their future appearance, whereas current object detection systems rely on the oncoming vehicle's direct visibility. Based on previous work in this subject, we present with this paper a complete system capable of solving the task to providently detect oncoming vehicles at nighttime based on their caused light artifacts. For that, we outline the full algorithm architecture ranging from the detection of light artifacts in the image space, localizing the objects in the three-dimensional space, and verifying the objects over time. To demonstrate the applicability, we deploy the system in a test vehicle and use the information of providently detected vehicles to control the glare-free high beam system proactively. Using this experimental setting, we quantify the time benefit that the provident vehicle detection system provides compared to an in-production computer vision system. Additionally, the glare-free high beam use case provides a real-time and real-world visualization interface of the detection results. With this contribution, we want to put awareness on the unconventional sensing task of provident object detection and further close the performance gap between human behavior and computer vision algorithms in order to bring autonomous and automated driving a step forward.

A Dataset for Provident Vehicle Detection at Night

May 27, 2021

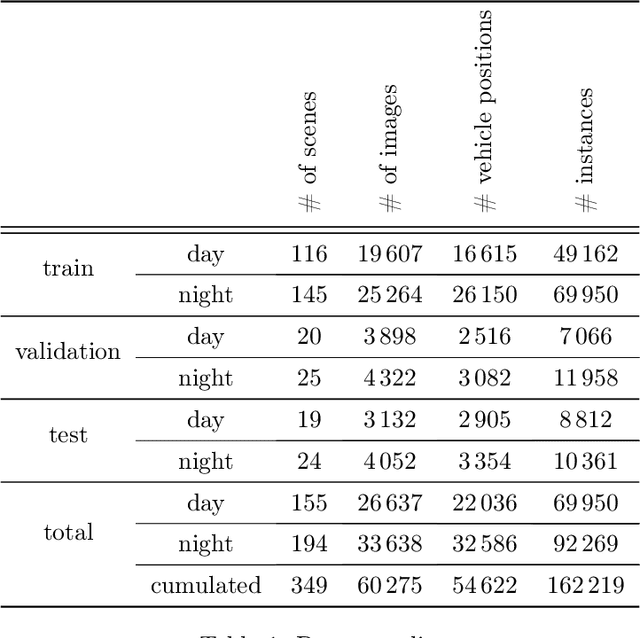

Abstract:In current object detection, algorithms require the object to be directly visible in order to be detected. As humans, however, we intuitively use visual cues caused by the respective object to already make assumptions about its appearance. In the context of driving, such cues can be shadows during the day and often light reflections at night. In this paper, we study the problem of how to map this intuitive human behavior to computer vision algorithms to detect oncoming vehicles at night just from the light reflections they cause by their headlights. For that, we present an extensive open-source dataset containing 59746 annotated grayscale images out of 346 different scenes in a rural environment at night. In these images, all oncoming vehicles, their corresponding light objects (e.g., headlamps), and their respective light reflections (e.g., light reflections on guardrails) are labeled. In this context, we discuss the characteristics of the dataset and the challenges in objectively describing visual cues such as light reflections. We provide different metrics for different ways to approach the task and report the results we achieved using state-of-the-art and custom object detection models as a first benchmark. With that, we want to bring attention to a new and so far neglected field in computer vision research, encourage more researchers to tackle the problem, and thereby further close the gap between human performance and computer vision systems.

Provident Vehicle Detection at Night: The PVDN Dataset

Jan 23, 2021

Abstract:For advanced driver assistance systems, it is crucial to have information about oncoming vehicles as early as possible. At night, this task is especially difficult due to poor lighting conditions. For that, during nighttime, every vehicle uses headlamps to improve sight and therefore ensure safe driving. As humans, we intuitively assume oncoming vehicles before the vehicles are actually physically visible by detecting light reflections caused by their headlamps. In this paper, we present a novel dataset containing 59746 annotated grayscale images out of 346 different scenes in a rural environment at night. In these images, all oncoming vehicles, their corresponding light objects (e.g., headlamps), and their respective light reflections (e.g., light reflections on guardrails) are labeled. This is accompanied by an in-depth analysis of the dataset characteristics. With that, we are providing the first open-source dataset with comprehensive ground truth data to enable research into new methods of detecting oncoming vehicles based on the light reflections they cause, long before they are directly visible. We consider this as an essential step to further close the performance gap between current advanced driver assistance systems and human behavior.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge