Luisa F. Polania

Learning Furniture Compatibility with Graph Neural Networks

Apr 15, 2020

Abstract:We propose a graph neural network (GNN) approach to the problem of predicting the stylistic compatibility of a set of furniture items from images. While most existing results are based on siamese networks which evaluate pairwise compatibility between items, the proposed GNN architecture exploits relational information among groups of items. We present two GNN models, both of which comprise a deep CNN that extracts a feature representation for each image, a gated recurrent unit (GRU) network that models interactions between the furniture items in a set, and an aggregation function that calculates the compatibility score. In the first model, a generalized contrastive loss function that promotes the generation of clustered embeddings for items belonging to the same furniture set is introduced. Also, in the first model, the edge function between nodes in the GRU and the aggregation function are fixed in order to limit model complexity and allow training on smaller datasets; in the second model, the edge function and aggregation function are learned directly from the data. We demonstrate state-of-the art accuracy for compatibility prediction and "fill in the blank" tasks on the Bonn and Singapore furniture datasets. We further introduce a new dataset, called the Target Furniture Collections dataset, which contains over 6000 furniture items that have been hand-curated by stylists to make up 1632 compatible sets. We also demonstrate superior prediction accuracy on this dataset.

Deep Adaptive Wavelet Network

Dec 10, 2019

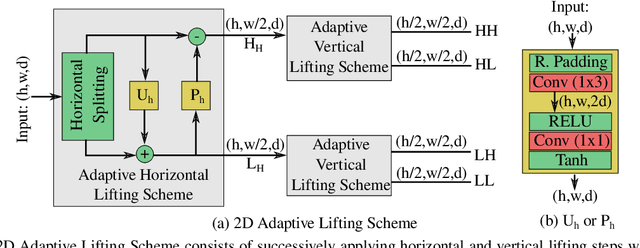

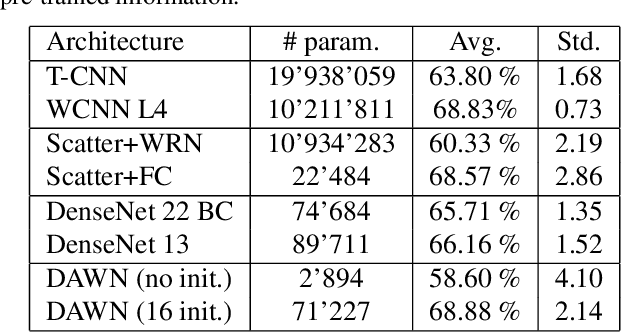

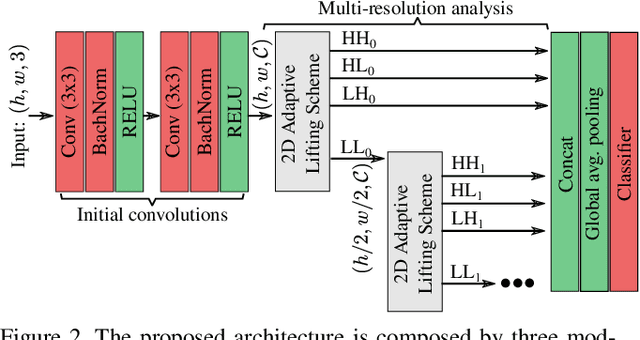

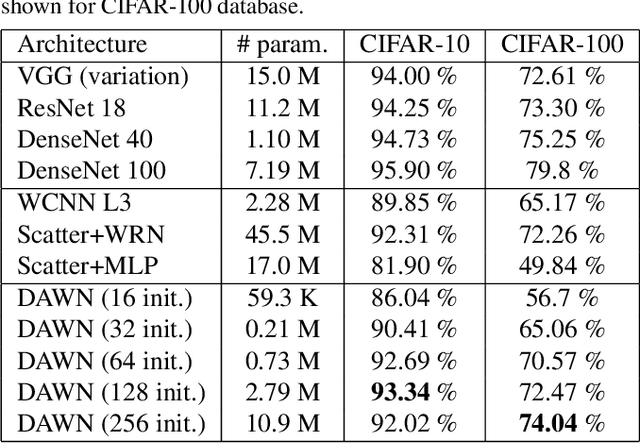

Abstract:Even though convolutional neural networks have become the method of choice in many fields of computer vision, they still lack interpretability and are usually designed manually in a cumbersome trial-and-error process. This paper aims at overcoming those limitations by proposing a deep neural network, which is designed in a systematic fashion and is interpretable, by integrating multiresolution analysis at the core of the deep neural network design. By using the lifting scheme, it is possible to generate a wavelet representation and design a network capable of learning wavelet coefficients in an end-to-end form. Compared to state-of-the-art architectures, the proposed model requires less hyper-parameter tuning and achieves competitive accuracy in image classification tasks

Graph Neural Networks for Image Understanding Based on Multiple Cues: Group Emotion Recognition and Event Recognition as Use Cases

Sep 19, 2019

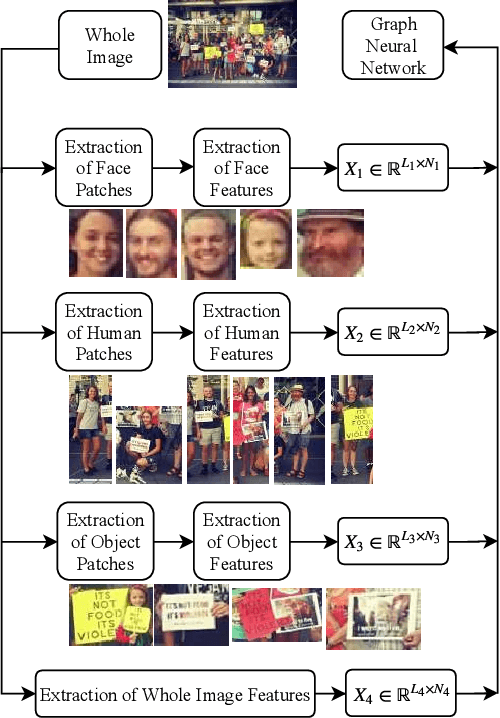

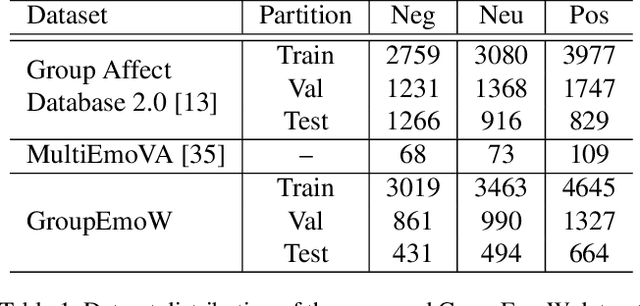

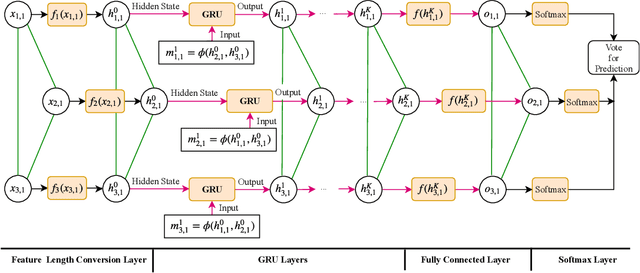

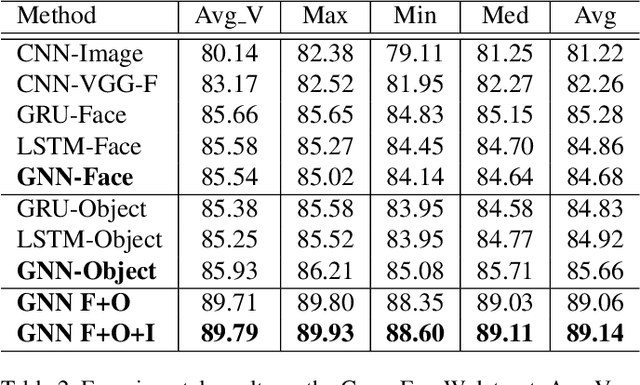

Abstract:A graph neural network (GNN) for image understanding based on multiple cues is proposed in this paper. Compared to traditional feature and decision fusion approaches that neglect the fact that features can interact and exchange information, the proposed GNN is able to pass information among features extracted from different models. Two image understanding tasks, namely group-level emotion recognition (GER) and event recognition, which are highly semantic and require the interaction of several deep models to synthesize multiple cues, were selected to validate the performance of the proposed method. It is shown through experiments that the proposed method achieves state-of-the-art performance on the selected image understanding tasks. In addition, a new group-level emotion recognition database is introduced and shared in this paper.

Learning fashion compatibility across apparel categories for outfit recommendation

May 01, 2019

Abstract:This paper addresses the problem of generating recommendations for completing the outfit given that a user is interested in a particular apparel item. The proposed method is based on a siamese network used for feature extraction followed by a fully-connected network used for learning a fashion compatibility metric. The embeddings generated by the siamese network are augmented with color histogram features motivated by the important role that color plays in determining fashion compatibility. The training of the network is formulated as a maximum a posteriori (MAP) problem where Laplacian distributions are assumed for the filters of the siamese network to promote sparsity and matrix-variate normal distributions are assumed for the weights of the metric network to efficiently exploit correlations between the input units of each fully-connected layer.

Exploiting Restricted Boltzmann Machines and Deep Belief Networks in Compressed Sensing

May 30, 2017

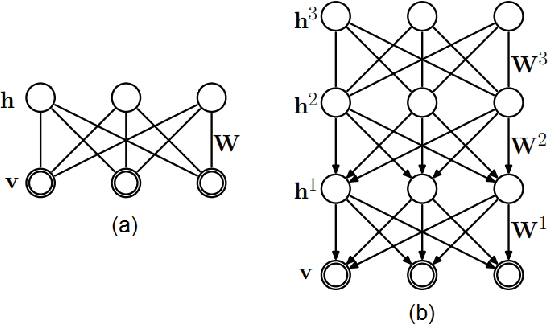

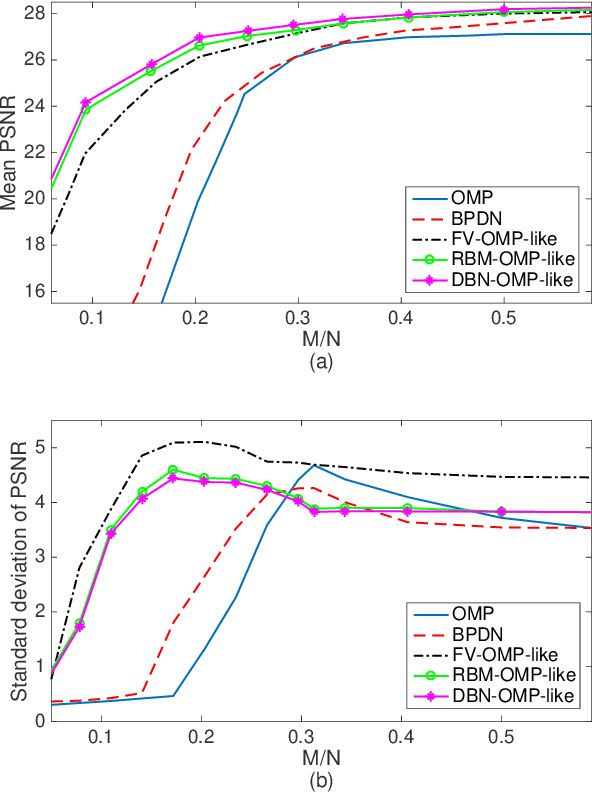

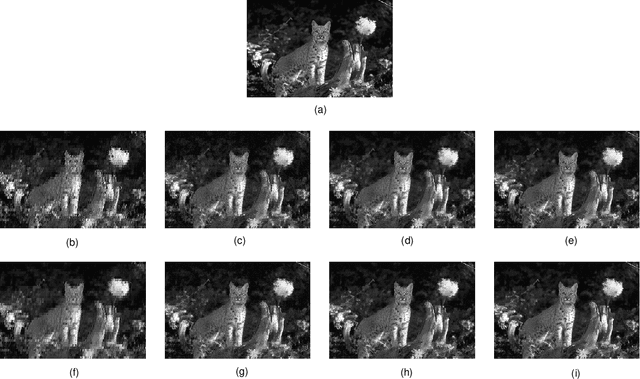

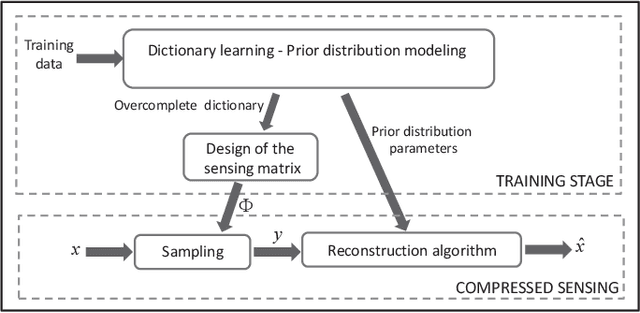

Abstract:This paper proposes a CS scheme that exploits the representational power of restricted Boltzmann machines and deep learning architectures to model the prior distribution of the sparsity pattern of signals belonging to the same class. The determined probability distribution is then used in a maximum a posteriori (MAP) approach for the reconstruction. The parameters of the prior distribution are learned from training data. The motivation behind this approach is to model the higher-order statistical dependencies between the coefficients of the sparse representation, with the final goal of improving the reconstruction. The performance of the proposed method is validated on the Berkeley Segmentation Dataset and the MNIST Database of handwritten digits.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge