Luis Contreras

Multimodal feedback for active robot-object interaction

Sep 10, 2018

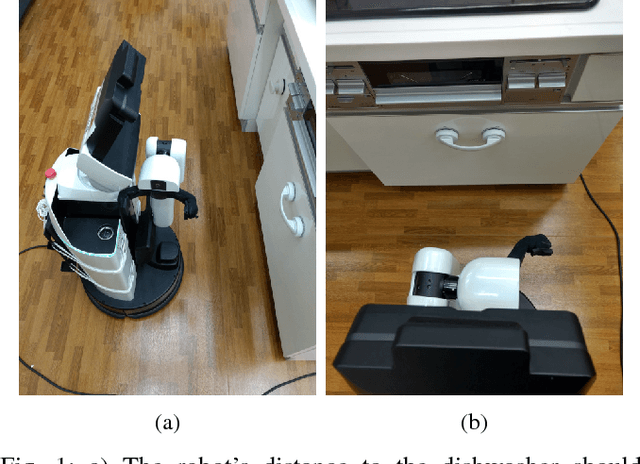

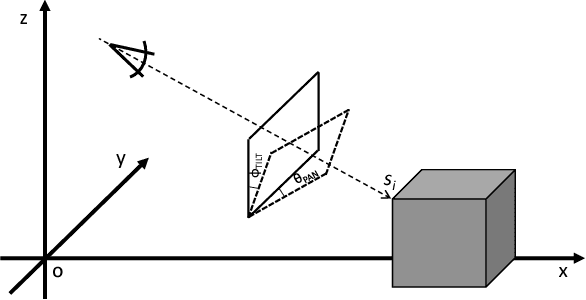

Abstract:In this work, we present a multimodal system for active robot-object interaction using laser-based SLAM, RGBD images, and contact sensors. In the object manipulation task, the robot adjusts its initial pose with respect to obstacles and target objects through RGBD data so it can perform object grasping in different configuration spaces while avoiding collisions, and updates the information related to the last steps of the manipulation process using the contact sensors in its hand. We perform a series of experiment to evaluate the performance of the proposed system following the the RoboCup2018 international competition regulations. We compare our approach with a number of baselines, namely a no-feedback method and visual-only and tactile-only feedback methods, where our proposed visual-and-tactile feedback method performs best.

Intelligent flat-and-textureless object manipulation in Service Robots

Sep 10, 2018

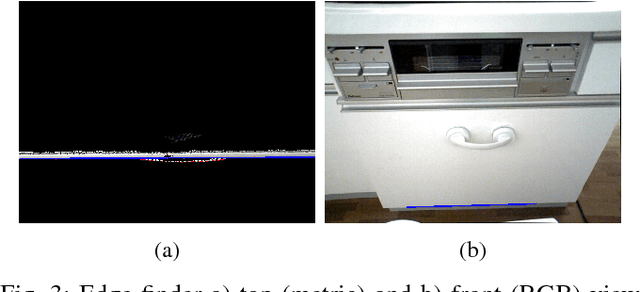

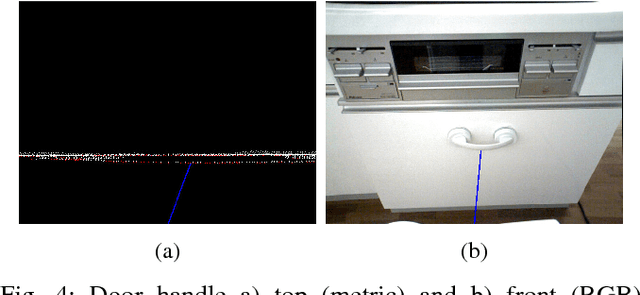

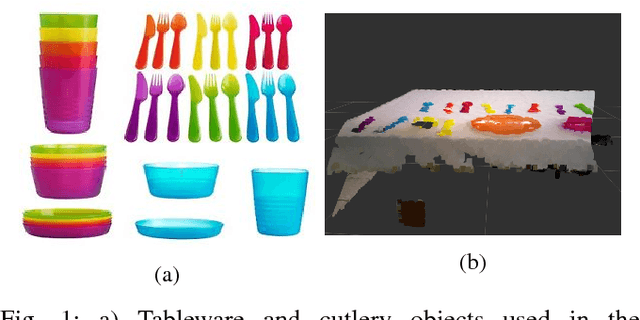

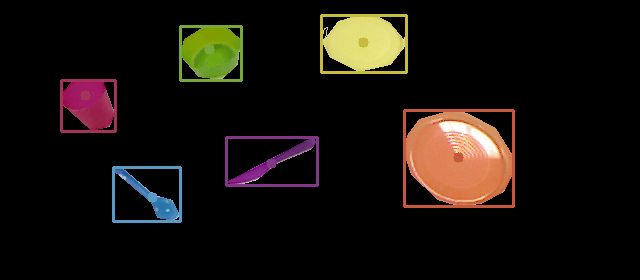

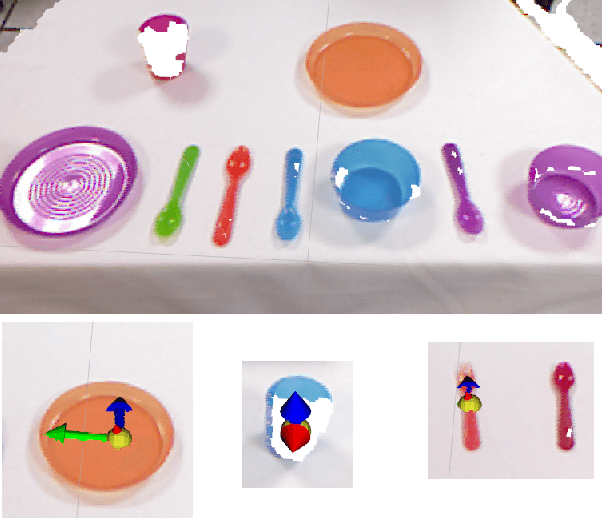

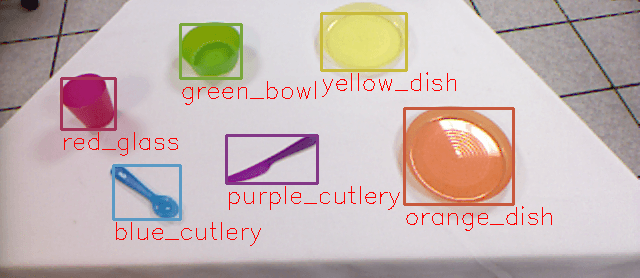

Abstract:This work introduces our approach to the flat and textureless object grasping problem. In particular, we address the tableware and cutlery manipulation problem where a service robot has to clean up a table. Our solution integrates colour and 2D and 3D geometry information to describe objects, and this information is given to the robot action planner to find the best grasping trajectory depending on the object class. Furthermore, we use visual feedback as a verification step to determine if the grasping process has successfully occurred. We evaluate our approach in both an open and a standard service robot platform following the RoboCup@Home international tournament regulations.

Towards CNN map representation and compression for camera relocalisation

May 16, 2018

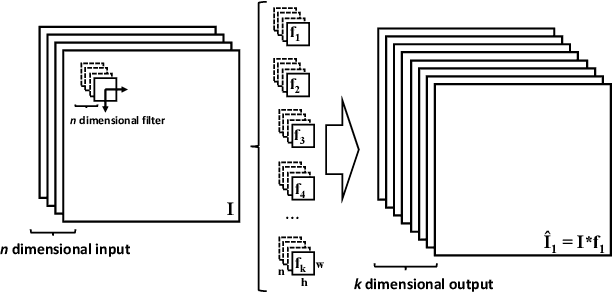

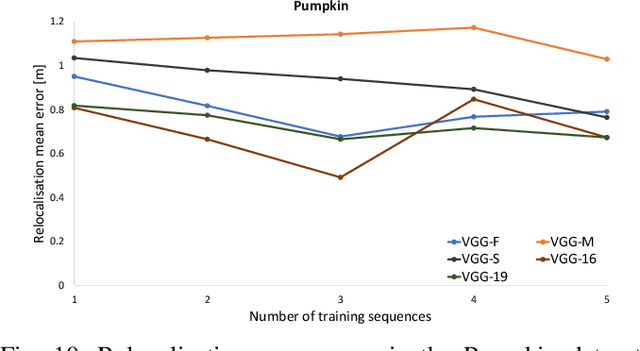

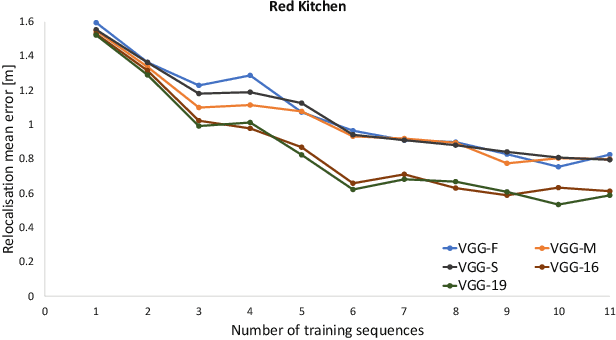

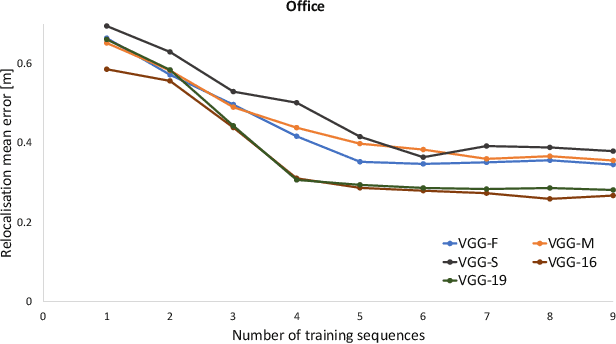

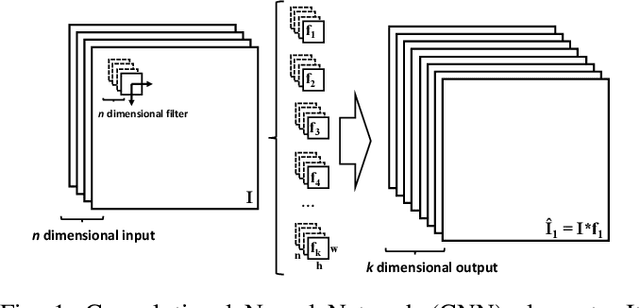

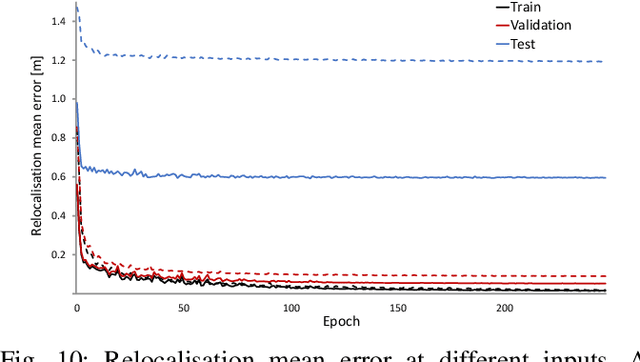

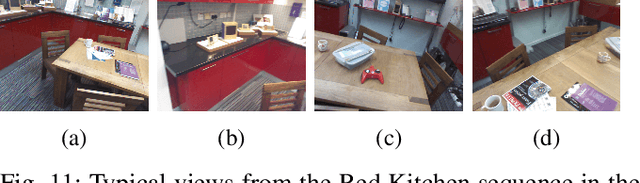

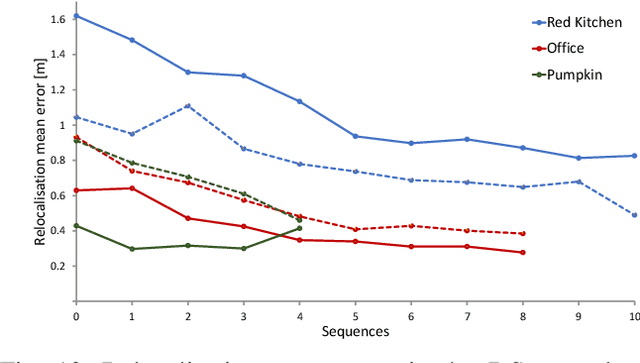

Abstract:This paper presents a study on the use of Convolutional Neural Networks for camera relocalisation and its application to map compression. We follow state of the art visual relocalisation results and evaluate the response to different data inputs. We use a CNN map representation and introduce the notion of map compression under this paradigm by using smaller CNN architectures without sacrificing relocalisation performance. We evaluate this approach in a series of publicly available datasets over a number of CNN architectures with different sizes, both in complexity and number of layers. This formulation allows us to improve relocalisation accuracy by increasing the number of training trajectories while maintaining a constant-size CNN.

Towards CNN Map Compression for camera relocalisation

Mar 02, 2017

Abstract:This paper presents a study on the use of Convolutional Neural Networks for camera relocalisation and its application to map compression. We follow state of the art visual relocalisation results and evaluate response to different data inputs -- namely, depth, grayscale, RGB, spatial position and combinations of these. We use a CNN map representation and introduce the notion of CNN map compression by using a smaller CNN architecture. We evaluate our proposal in a series of publicly available datasets. This formulation allows us to improve relocalisation accuracy by increasing the number of training trajectories while maintaining a constant-size CNN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge