Hiroyuki Okada

Evaluation of Impression Difference of a Domestic Mobile Manipulator with Autonomous and/or Remote Control in Fetch-and-Carry Tasks

Dec 30, 2025Abstract:A single service robot can present two distinct agencies: its onboard autonomy and an operator-mediated agency, yet users experience them through one physical body. We formalize this dual-agency structure as a User-Robot-Operator triad in an autonomous remote-control setting that combines autonomous execution with remote human support. Prior to the recent surge of language-based and multimodal interfaces, we developed and evaluated an early-stage prototype in 2020 that combined natural-language text chat with freehand sketch annotations over the robot's live camera view to support remote intervention. We evaluated three modes - autonomous, remote, and hybrid - in controlled fetch-and-carry tasks using a domestic mobile manipulator (HSR) on a World Robot Summit 2020 rule-compliant test field. The results show systematic mode-dependent differences in user-rated affinity and additional insights on perceived security, indicating that switching or blending agency within one robot measurably shapes human impressions. These findings provide empirical guidance for designing human-in-the-loop mobile manipulation in domestic physical tasks.

* Published in Advanced Robotics (2020). This paper defines an autonomous remote-control setting that makes the User-Robot-Operator triadic interaction explicit in physical tasks, and reports empirical differences in affinity and perceived security across autonomous, remote, and hybrid modes. Please cite: Advanced Robotics 34(20):1291-1308, 2020. DOI:10.1080/01691864.2020.1780152

Decoding BACnet Packets: A Large Language Model Approach for Packet Interpretation

Jul 22, 2024Abstract:The Industrial Control System (ICS) environment encompasses a wide range of intricate communication protocols, posing substantial challenges for Security Operations Center (SOC) analysts tasked with monitoring, interpreting, and addressing network activities and security incidents. Conventional monitoring tools and techniques often struggle to provide a clear understanding of the nature and intent of ICS-specific communications. To enhance comprehension, we propose a software solution powered by a Large Language Model (LLM). This solution currently focused on BACnet protocol, processes a packet file data and extracts context by using a mapping database, and contemporary context retrieval methods for Retrieval Augmented Generation (RAG). The processed packet information, combined with the extracted context, serves as input to the LLM, which generates a concise packet file summary for the user. The software delivers a clear, coherent, and easily understandable summary of network activities, enabling SOC analysts to better assess the current state of the control system.

Multimodal feedback for active robot-object interaction

Sep 10, 2018

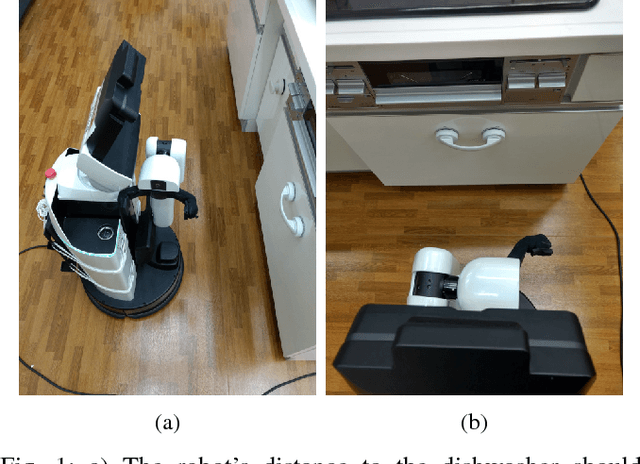

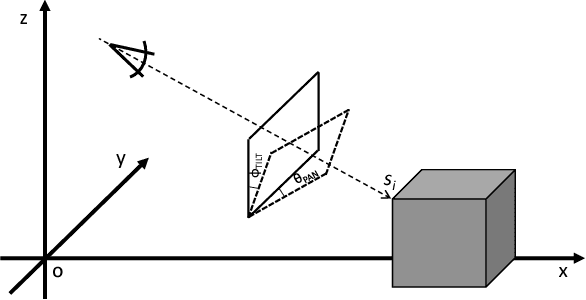

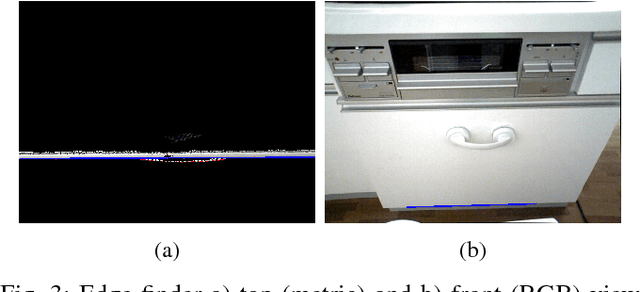

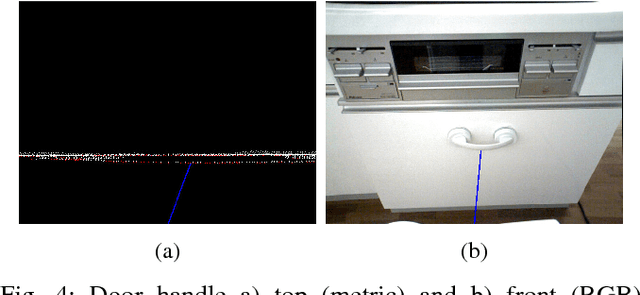

Abstract:In this work, we present a multimodal system for active robot-object interaction using laser-based SLAM, RGBD images, and contact sensors. In the object manipulation task, the robot adjusts its initial pose with respect to obstacles and target objects through RGBD data so it can perform object grasping in different configuration spaces while avoiding collisions, and updates the information related to the last steps of the manipulation process using the contact sensors in its hand. We perform a series of experiment to evaluate the performance of the proposed system following the the RoboCup2018 international competition regulations. We compare our approach with a number of baselines, namely a no-feedback method and visual-only and tactile-only feedback methods, where our proposed visual-and-tactile feedback method performs best.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge