Luciano Silva

Towards real-time object recognition and pose estimation in point clouds

Nov 27, 2020

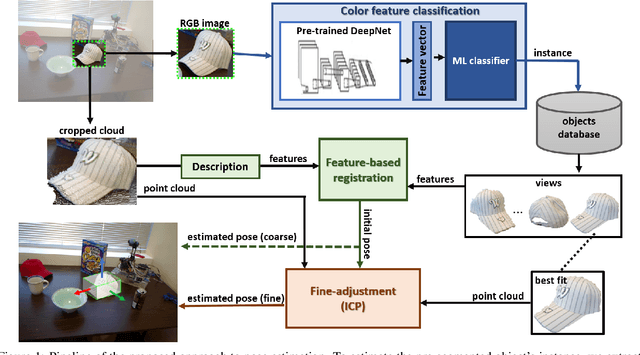

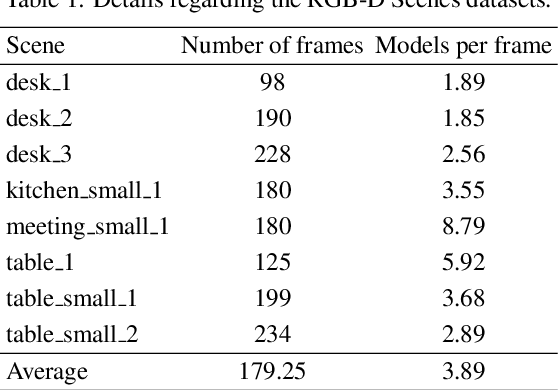

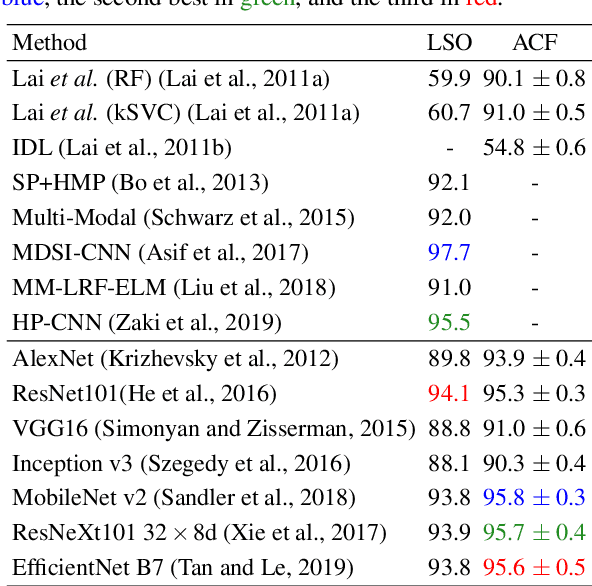

Abstract:Object recognition and 6DoF pose estimation are quite challenging tasks in computer vision applications. Despite efficiency in such tasks, standard methods deliver far from real-time processing rates. This paper presents a novel pipeline to estimate a fine 6DoF pose of objects, applied to realistic scenarios in real-time. We split our proposal into three main parts. Firstly, a Color feature classification leverages the use of pre-trained CNN color features trained on the ImageNet for object detection. A Feature-based registration module conducts a coarse pose estimation, and finally, a Fine-adjustment step performs an ICP-based dense registration. Our proposal achieves, in the best case, an accuracy performance of almost 83\% on the RGB-D Scenes dataset. Regarding processing time, the object detection task is done at a frame processing rate up to 90 FPS, and the pose estimation at almost 14 FPS in a full execution strategy. We discuss that due to the proposal's modularity, we could let the full execution occurs only when necessary and perform a scheduled execution that unlocks real-time processing, even for multitask situations.

Learning to Orient Surfaces by Self-supervised Spherical CNNs

Nov 13, 2020

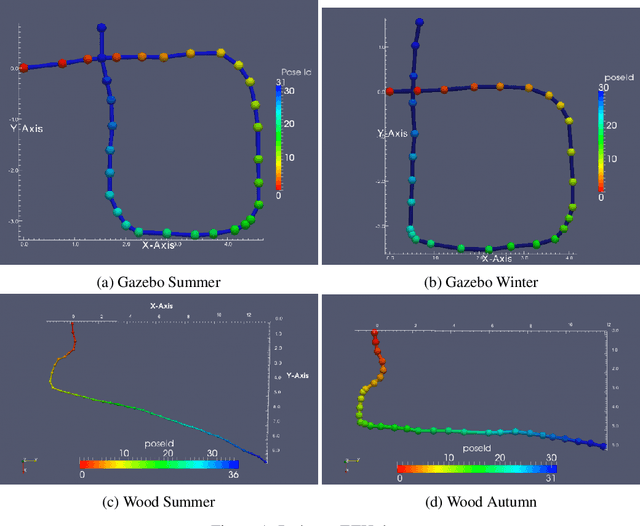

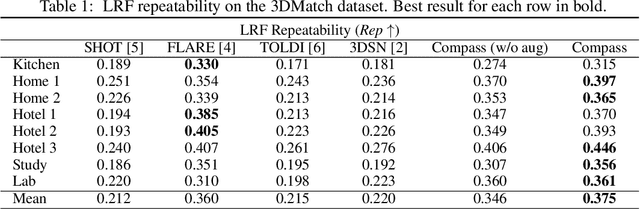

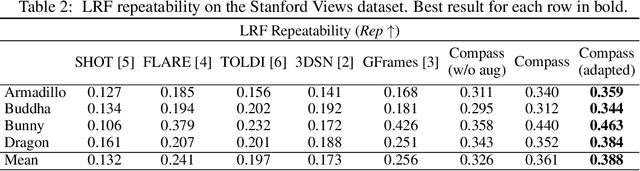

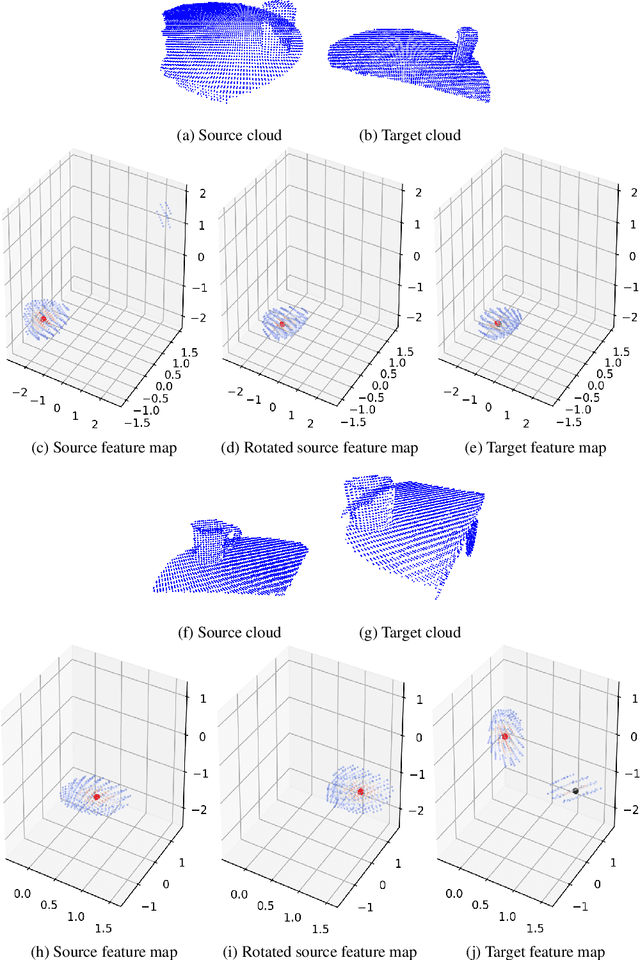

Abstract:Defining and reliably finding a canonical orientation for 3D surfaces is key to many Computer Vision and Robotics applications. This task is commonly addressed by handcrafted algorithms exploiting geometric cues deemed as distinctive and robust by the designer. Yet, one might conjecture that humans learn the notion of the inherent orientation of 3D objects from experience and that machines may do so alike. In this work, we show the feasibility of learning a robust canonical orientation for surfaces represented as point clouds. Based on the observation that the quintessential property of a canonical orientation is equivariance to 3D rotations, we propose to employ Spherical CNNs, a recently introduced machinery that can learn equivariant representations defined on the Special Orthogonal group SO(3). Specifically, spherical correlations compute feature maps whose elements define 3D rotations. Our method learns such feature maps from raw data by a self-supervised training procedure and robustly selects a rotation to transform the input point cloud into a learned canonical orientation. Thereby, we realize the first end-to-end learning approach to define and extract the canonical orientation of 3D shapes, which we aptly dub Compass. Experiments on several public datasets prove its effectiveness at orienting local surface patches as well as whole objects.

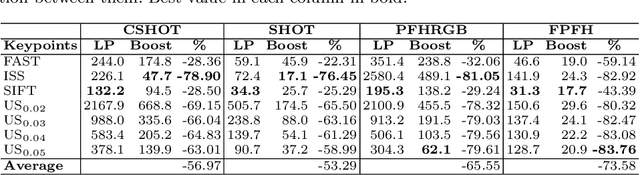

Boosting Object Recognition in Point Clouds by Saliency Detection

Nov 06, 2019

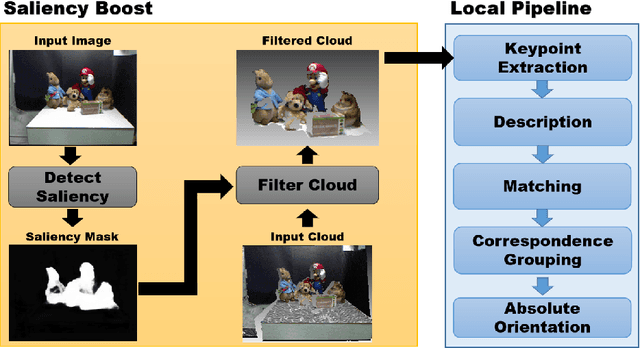

Abstract:Object recognition in 3D point clouds is a challenging task, mainly when time is an important factor to deal with, such as in industrial applications. Local descriptors are an amenable choice whenever the 6 DoF pose of recognized objects should also be estimated. However, the pipeline for this kind of descriptors is highly time-consuming. In this work, we propose an update to the traditional pipeline, by adding a preliminary filtering stage referred to as saliency boost. We perform tests on a standard object recognition benchmark by considering four keypoint detectors and four local descriptors, in order to compare time and recognition performance between the traditional pipeline and the boosted one. Results on time show that the boosted pipeline could turn out up to 5 times faster, with the recognition rate improving in most of the cases and exhibiting only a slight decrease in the others. These results suggest that the boosted pipeline can speed-up processing time substantially with limited impacts or even benefits in recognition accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge