Luca Barbieri

Target Classification for Integrated Sensing and Communication in Industrial Deployments

Dec 23, 2025

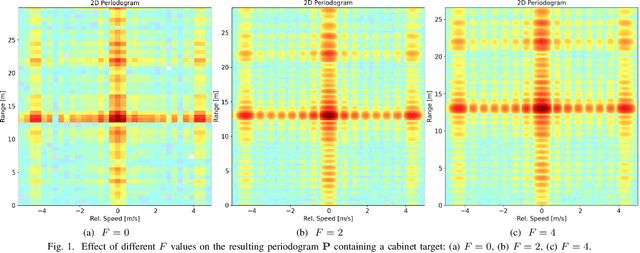

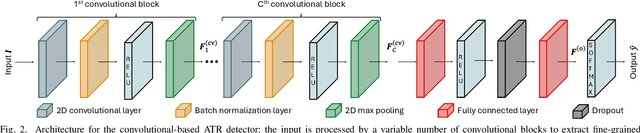

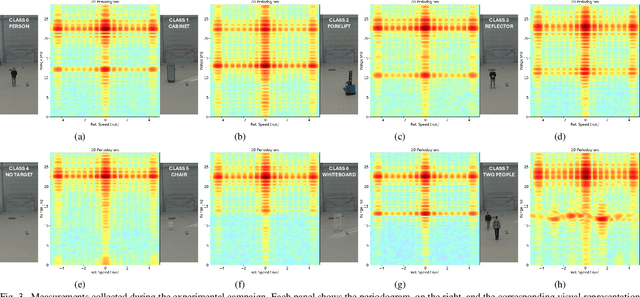

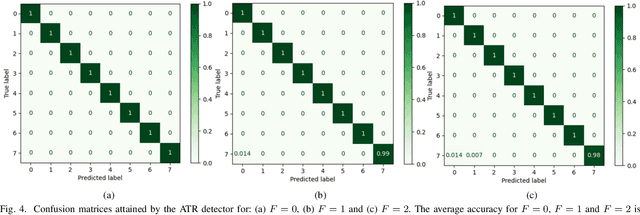

Abstract:Integrated Sensing and Communication (ISAC) systems enable cellular networks to jointly operate as communication technology and sense the environment. While opportunities and potential performance have been largely investigated in simulations, few experimental works have showcased Automatic Target Recognition (ATR) effectiveness in a real-world deployment based on cellular radio units. To bridge this gap, this paper presents an initial study investigating the feasibility of ATR for ISAC. Our ATR solution uses a Deep Learning (DL)-based detector to infer the target class directly from the radar images generated by the ISAC system. The DL detector is evaluated with experimental data from a ISAC testbed based on commercially available mmWave radio units in the ARENA 2036 industrial research campus located in Stuttgart, Germany. Experimental results demonstrate accurate classification performance, demonstrating the feasibility of ATR ISAC with cellular hardware in our setup. We finally provide insights about the open generalization challenges, that will fuel future work on the topic.

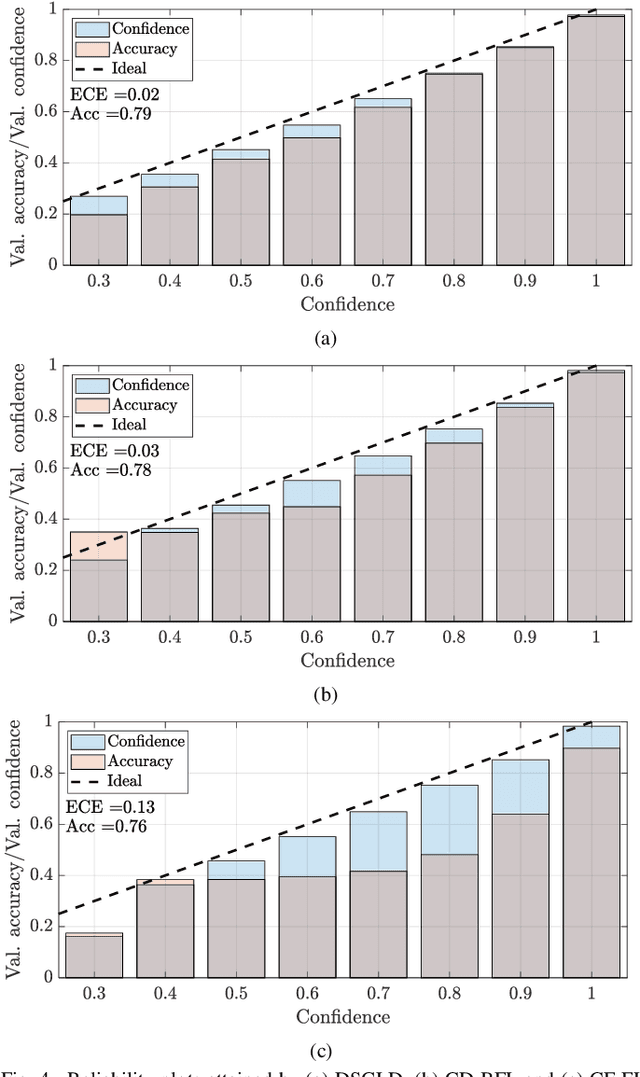

Bayesian Federated Learning for Continual Training

Apr 21, 2025

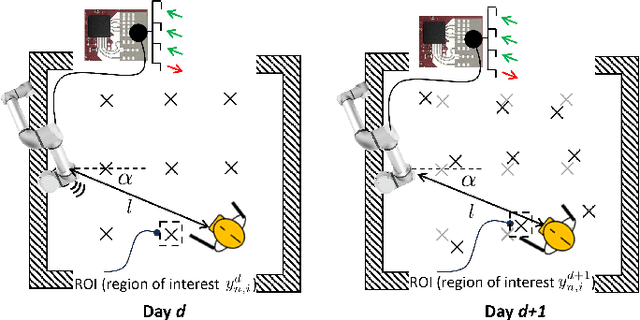

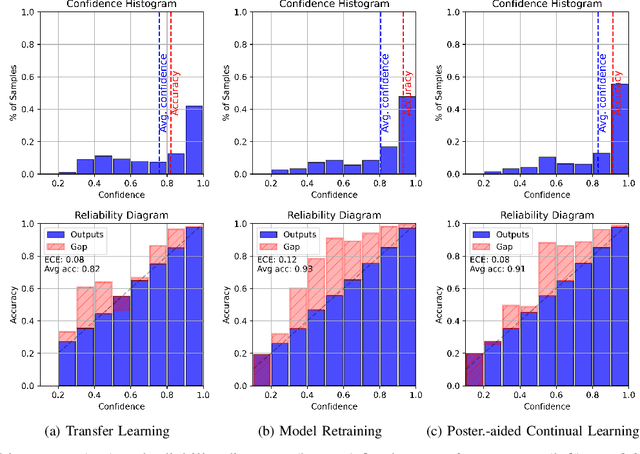

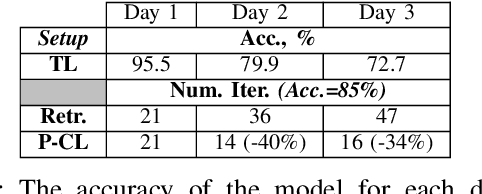

Abstract:Bayesian Federated Learning (BFL) enables uncertainty quantification and robust adaptation in distributed learning. In contrast to the frequentist approach, it estimates the posterior distribution of a global model, offering insights into model reliability. However, current BFL methods neglect continual learning challenges in dynamic environments where data distributions shift over time. We propose a continual BFL framework applied to human sensing with radar data collected over several days. Using Stochastic Gradient Langevin Dynamics (SGLD), our approach sequentially updates the model, leveraging past posteriors to construct the prior for the new tasks. We assess the accuracy, the expected calibration error (ECE) and the convergence speed of our approach against several baselines. Results highlight the effectiveness of continual Bayesian updates in preserving knowledge and adapting to evolving data.

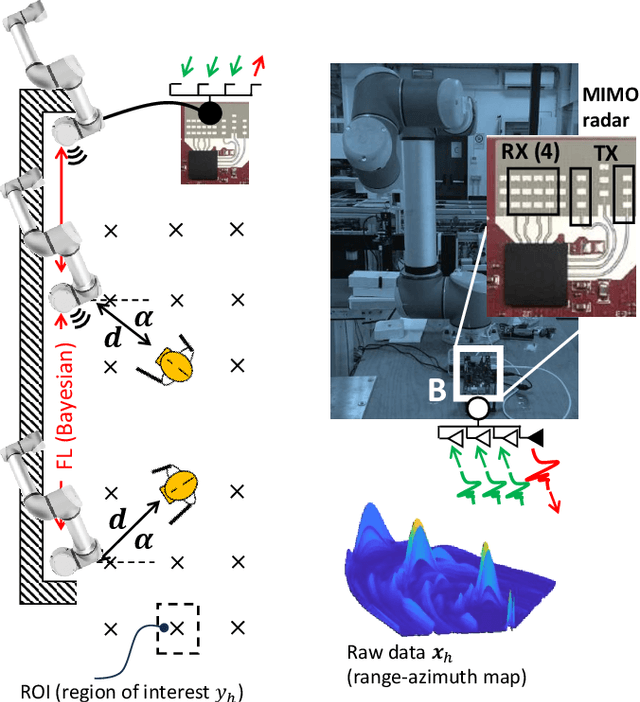

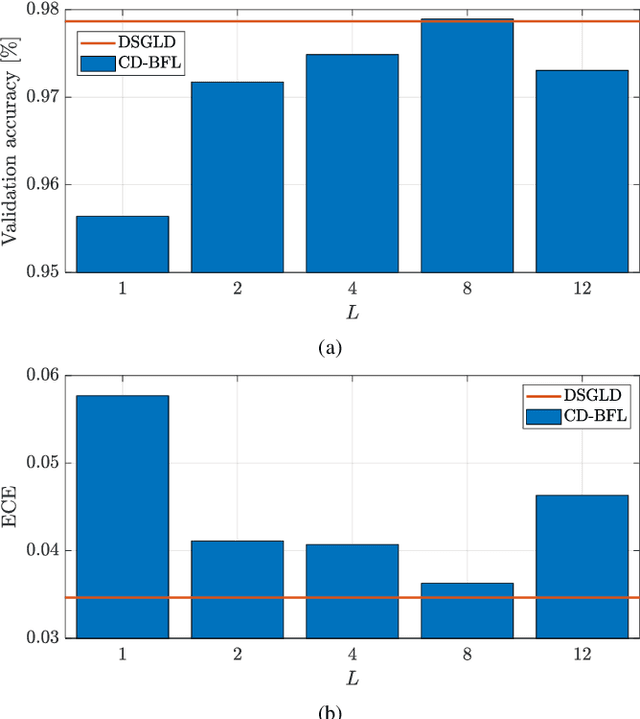

Compressed Bayesian Federated Learning for Reliable Passive Radio Sensing in Industrial IoT

May 09, 2024

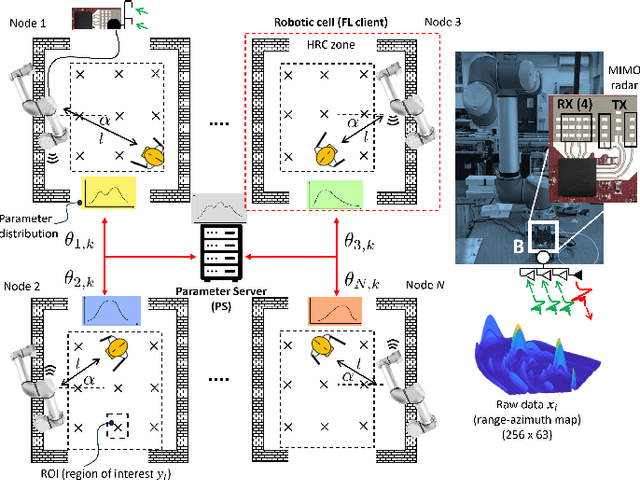

Abstract:Bayesian Federated Learning (FL) has been recently introduced to provide well-calibrated Machine Learning (ML) models quantifying the uncertainty of their predictions. Despite their advantages compared to frequentist FL setups, Bayesian FL tools implemented over decentralized networks are subject to high communication costs due to the iterated exchange of local posterior distributions among cooperating devices. Therefore, this paper proposes a communication-efficient decentralized Bayesian FL policy to reduce the communication overhead without sacrificing final learning accuracy and calibration. The proposed method integrates compression policies and allows devices to perform multiple optimization steps before sending the local posterior distributions. We integrate the developed tool in an Industrial Internet of Things (IIoT) use case where collaborating nodes equipped with autonomous radar sensors are tasked to reliably localize human operators in a workplace shared with robots. Numerical results show that the developed approach obtains highly accurate yet well-calibrated ML models compatible with the ones provided by conventional (uncompressed) Bayesian FL tools while substantially decreasing the communication overhead (i.e., up to 99%). Furthermore, the proposed approach is advantageous when compared with state-of-the-art compressed frequentist FL setups in terms of calibration, especially when the statistical distribution of the testing dataset changes.

A Secure and Trustworthy Network Architecture for Federated Learning Healthcare Applications

Apr 17, 2024

Abstract:Federated Learning (FL) has emerged as a promising approach for privacy-preserving machine learning, particularly in sensitive domains such as healthcare. In this context, the TRUSTroke project aims to leverage FL to assist clinicians in ischemic stroke prediction. This paper provides an overview of the TRUSTroke FL network infrastructure. The proposed architecture adopts a client-server model with a central Parameter Server (PS). We introduce a Docker-based design for the client nodes, offering a flexible solution for implementing FL processes in clinical settings. The impact of different communication protocols (HTTP or MQTT) on FL network operation is analyzed, with MQTT selected for its suitability in FL scenarios. A control plane to support the main operations required by FL processes is also proposed. The paper concludes with an analysis of security aspects of the FL architecture, addressing potential threats and proposing mitigation strategies to increase the trustworthiness level.

Deep Learning-based Cooperative LiDAR Sensing for Improved Vehicle Positioning

Feb 26, 2024Abstract:Accurate positioning is known to be a fundamental requirement for the deployment of Connected Automated Vehicles (CAVs). To meet this need, a new emerging trend is represented by cooperative methods where vehicles fuse information coming from navigation and imaging sensors via Vehicle-to-Everything (V2X) communications for joint positioning and environmental perception. In line with this trend, this paper proposes a novel data-driven cooperative sensing framework, termed Cooperative LiDAR Sensing with Message Passing Neural Network (CLS-MPNN), where spatially-distributed vehicles collaborate in perceiving the environment via LiDAR sensors. Vehicles process their LiDAR point clouds using a Deep Neural Network (DNN), namely a 3D object detector, to identify and localize possible static objects present in the driving environment. Data are then aggregated by a centralized infrastructure that performs Data Association (DA) using a Message Passing Neural Network (MPNN) and runs the Implicit Cooperative Positioning (ICP) algorithm. The proposed approach is evaluated using two realistic driving scenarios generated by a high-fidelity automated driving simulator. The results show that CLS-MPNN outperforms a conventional non-cooperative localization algorithm based on Global Navigation Satellite System (GNSS) and a state-of-the-art cooperative Simultaneous Localization and Mapping (SLAM) method while approaching the performances of an oracle system with ideal sensing and perfect association.

A Carbon Tracking Model for Federated Learning: Impact of Quantization and Sparsification

Oct 12, 2023

Abstract:Federated Learning (FL) methods adopt efficient communication technologies to distribute machine learning tasks across edge devices, reducing the overhead in terms of data storage and computational complexity compared to centralized solutions. Rather than moving large data volumes from producers (sensors, machines) to energy-hungry data centers, raising environmental concerns due to resource demands, FL provides an alternative solution to mitigate the energy demands of several learning tasks while enabling new Artificial Intelligence of Things (AIoT) applications. This paper proposes a framework for real-time monitoring of the energy and carbon footprint impacts of FL systems. The carbon tracking tool is evaluated for consensus (fully decentralized) and classical FL policies. For the first time, we present a quantitative evaluation of different computationally and communication efficient FL methods from the perspectives of energy consumption and carbon equivalent emissions, suggesting also general guidelines for energy-efficient design. Results indicate that consensus-driven FL implementations should be preferred for limiting carbon emissions when the energy efficiency of the communication is low (i.e., < 25 Kbit/Joule). Besides, quantization and sparsification operations are shown to strike a balance between learning performances and energy consumption, leading to sustainable FL designs.

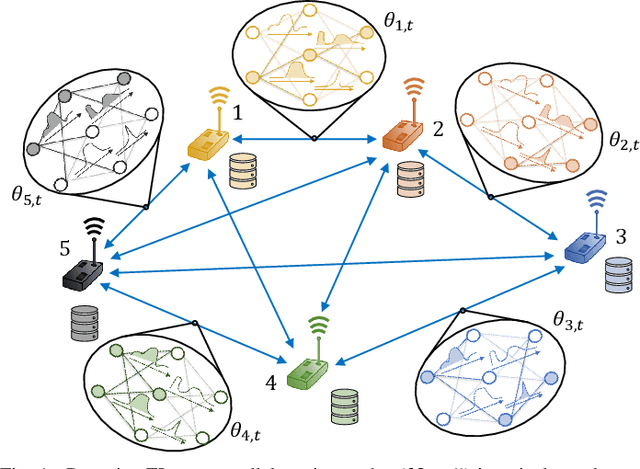

Channel-driven Decentralized Bayesian Federated Learning for Trustworthy Decision Making in D2D Networks

Oct 19, 2022

Abstract:Bayesian Federated Learning (FL) offers a principled framework to account for the uncertainty caused by limitations in the data available at the nodes implementing collaborative training. In Bayesian FL, nodes exchange information about local posterior distributions over the model parameters space. This paper focuses on Bayesian FL implemented in a device-to-device (D2D) network via Decentralized Stochastic Gradient Langevin Dynamics (DSGLD), a recently introduced gradient-based Markov Chain Monte Carlo (MCMC) method. Based on the observation that DSGLD applies random Gaussian perturbations of model parameters, we propose to leverage channel noise on the D2D links as a mechanism for MCMC sampling. The proposed approach is compared against a conventional implementation of frequentist FL based on compression and digital transmission, highlighting advantages and limitations.

Opportunities of Federated Learning in Connected, Cooperative and Automated Industrial Systems

Jan 12, 2021

Abstract:Next-generation autonomous and networked industrial systems (i.e., robots, vehicles, drones) have driven advances in ultra-reliable, low latency communications (URLLC) and computing. These networked multi-agent systems require fast, communication-efficient and distributed machine learning (ML) to provide mission critical control functionalities. Distributed ML techniques, including federated learning (FL), represent a mushrooming multidisciplinary research area weaving in sensing, communication and learning. FL enables continual model training in distributed wireless systems: rather than fusing raw data samples at a centralized server, FL leverages a cooperative fusion approach where networked agents, connected via URLLC, act as distributed learners that periodically exchange their locally trained model parameters. This article explores emerging opportunities of FL for the next-generation networked industrial systems. Open problems are discussed, focusing on cooperative driving in connected automated vehicles and collaborative robotics in smart manufacturing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge