Lu Meng

DeepQuark: deep-neural-network approach to multiquark bound states

Jun 25, 2025Abstract:For the first time, we implement the deep-neural-network-based variational Monte Carlo approach for the multiquark bound states, whose complexity surpasses that of electron or nucleon systems due to strong SU(3) color interactions. We design a novel and high-efficiency architecture, DeepQuark, to address the unique challenges in multiquark systems such as stronger correlations, extra discrete quantum numbers, and intractable confinement interaction. Our method demonstrates competitive performance with state-of-the-art approaches, including diffusion Monte Carlo and Gaussian expansion method, in the nucleon, doubly heavy tetraquark, and fully heavy tetraquark systems. Notably, it outperforms existing calculations for pentaquarks, exemplified by the triply heavy pentaquark. For the nucleon, we successfully incorporate three-body flux-tube confinement interactions without additional computational costs. In tetraquark systems, we consistently describe hadronic molecule $T_{cc}$ and compact tetraquark $T_{bb}$ with an unbiased form of wave function ansatz. In the pentaquark sector, we obtain weakly bound $\bar D^*\Xi_{cc}^*$ molecule $P_{cc\bar c}(5715)$ with $S=\frac{5}{2}$ and its bottom partner $P_{bb\bar b}(15569)$. They can be viewed as the analogs of the molecular $T_{cc}$. We recommend experimental search of $P_{cc\bar c}(5715)$ in the D-wave $J/\psi \Lambda_c$ channel. DeepQuark holds great promise for extension to larger multiquark systems, overcoming the computational barriers in conventional methods. It also serves as a powerful framework for exploring confining mechanism beyond two-body interactions in multiquark states, which may offer valuable insights into nonperturbative QCD and general many-body physics.

Dynamic Perturbation-Adaptive Adversarial Training on Medical Image Classification

Mar 11, 2024

Abstract:Remarkable successes were made in Medical Image Classification (MIC) recently, mainly due to wide applications of convolutional neural networks (CNNs). However, adversarial examples (AEs) exhibited imperceptible similarity with raw data, raising serious concerns on network robustness. Although adversarial training (AT), in responding to malevolent AEs, was recognized as an effective approach to improve robustness, it was challenging to overcome generalization decline of networks caused by the AT. In this paper, in order to reserve high generalization while improving robustness, we proposed a dynamic perturbation-adaptive adversarial training (DPAAT) method, which placed AT in a dynamic learning environment to generate adaptive data-level perturbations and provided a dynamically updated criterion by loss information collections to handle the disadvantage of fixed perturbation sizes in conventional AT methods and the dependence on external transference. Comprehensive testing on dermatology HAM10000 dataset showed that the DPAAT not only achieved better robustness improvement and generalization preservation but also significantly enhanced mean average precision and interpretability on various CNNs, indicating its great potential as a generic adversarial training method on the MIC.

Multi-View Spatial-Temporal Network for Continuous Sign Language Recognition

Apr 19, 2022

Abstract:Sign language is a beautiful visual language and is also the primary language used by speaking and hearing-impaired people. However, sign language has many complex expressions, which are difficult for the public to understand and master. Sign language recognition algorithms will significantly facilitate communication between hearing-impaired people and normal people. Traditional continuous sign language recognition often uses a sequence learning method based on Convolutional Neural Network (CNN) and Long Short-Term Memory Network (LSTM). These methods can only learn spatial and temporal features separately, which cannot learn the complex spatial-temporal features of sign language. LSTM is also difficult to learn long-term dependencies. To alleviate these problems, this paper proposes a multi-view spatial-temporal continuous sign language recognition network. The network consists of three parts. The first part is a Multi-View Spatial-Temporal Feature Extractor Network (MSTN), which can directly extract the spatial-temporal features of RGB and skeleton data; the second is a sign language encoder network based on Transformer, which can learn long-term dependencies; the third is a Connectionist Temporal Classification (CTC) decoder network, which is used to predict the whole meaning of the continuous sign language. Our algorithm is tested on two public sign language datasets SLR-100 and PHOENIX-Weather 2014T (RWTH). As a result, our method achieves excellent performance on both datasets. The word error rate on the SLR-100 dataset is 1.9%, and the word error rate on the RWTHPHOENIX-Weather dataset is 22.8%.

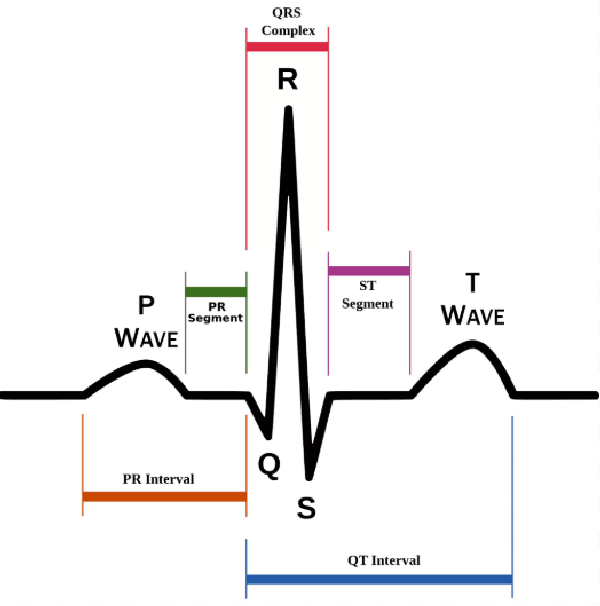

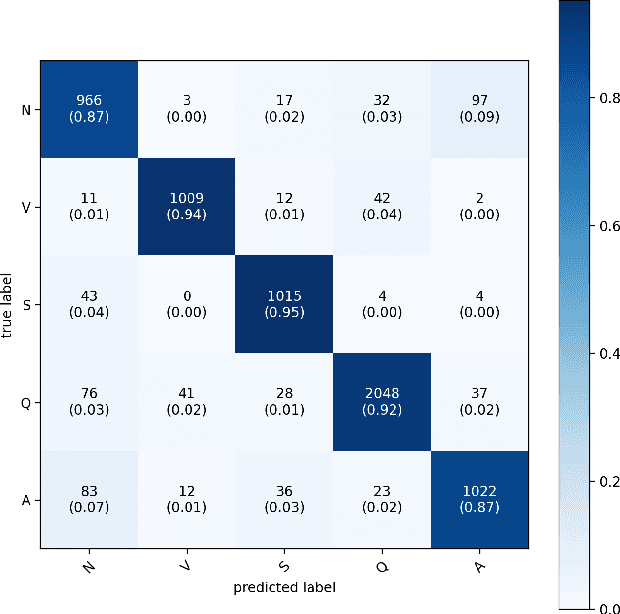

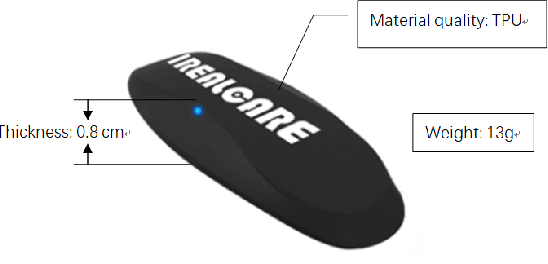

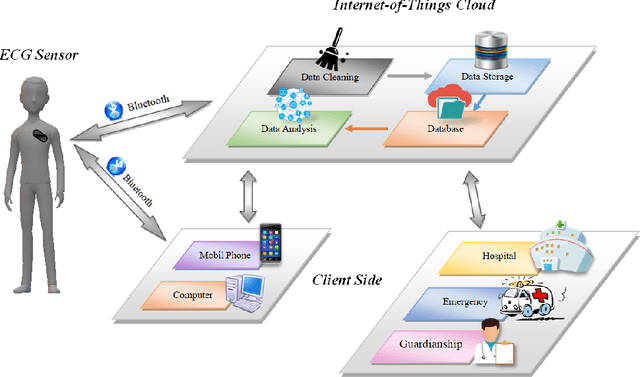

A Wearable ECG Monitor for Deep Learning Based Real-Time Cardiovascular Disease Detection

Jan 25, 2022

Abstract:Cardiovascular disease has become one of the most significant threats endangering human life and health. Recently, Electrocardiogram (ECG) monitoring has been transformed into remote cardiac monitoring by Holter surveillance. However, the widely used Holter can bring a great deal of discomfort and inconvenience to the individuals who carry them. We developed a new wireless ECG patch in this work and applied a deep learning framework based on the Convolutional Neural Network (CNN) and Long Short-term Memory (LSTM) models. However, we find that the models using the existing techniques are not able to differentiate two main heartbeat types (Supraventricular premature beat and Atrial fibrillation) in our newly obtained dataset, resulting in low accuracy of 58.0 %. We proposed a semi-supervised method to process the badly labelled data samples with using the confidence-level-based training. The experiment results conclude that the proposed method can approach an average accuracy of 90.2 %, i.e., 5.4 % higher than the accuracy of conventional ECG classification methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge