Lorenzo Nardi

Learning an Overlap-based Observation Model for 3D LiDAR Localization

May 25, 2021

Abstract:Localization is a crucial capability for mobile robots and autonomous cars. In this paper, we address learning an observation model for Monte-Carlo localization using 3D LiDAR data. We propose a novel, neural network-based observation model that computes the expected overlap of two 3D LiDAR scans. The model predicts the overlap and yaw angle offset between the current sensor reading and virtual frames generated from a pre-built map. We integrate this observation model into a Monte-Carlo localization framework and tested it on urban datasets collected with a car in different seasons. The experiments presented in this paper illustrate that our method can reliably localize a vehicle in typical urban environments. We furthermore provide comparisons to a beam-end point and a histogram-based method indicating a superior global localization performance of our method with fewer particles.

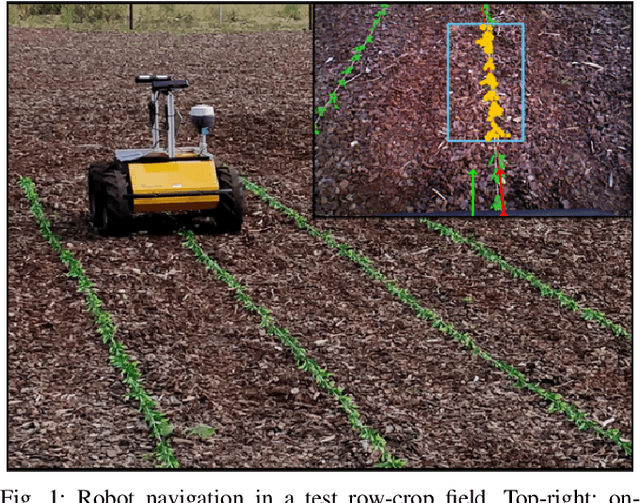

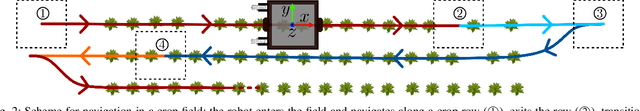

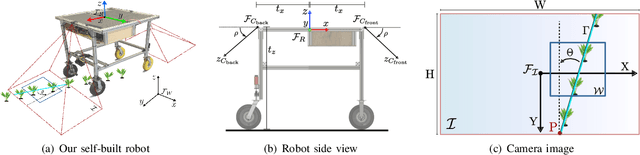

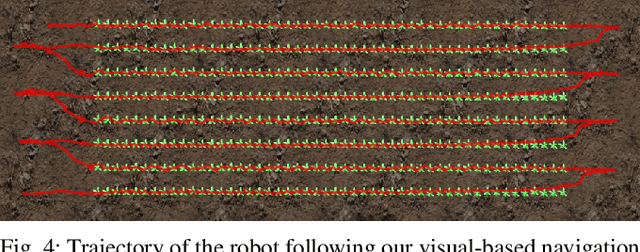

Visual Servoing-based Navigation for Monitoring Row-Crop Fields

Sep 27, 2019

Abstract:Autonomous navigation is a pre-requisite for field robots to carry out precision agriculture tasks. Typically, a robot has to navigate through a whole crop field several times during a season for monitoring the plants, for applying agrochemicals, or for performing targeted intervention actions. In this paper, we propose a framework tailored for navigation in row-crop fields by exploiting the regular crop-row structure present in the fields. Our approach uses only the images from on-board cameras without the need for performing explicit localization or maintaining a map of the field and thus can operate without expensive RTK-GPS solutions often used in agriculture automation systems. Our navigation approach allows the robot to follow the crop-rows accurately and handles the switch to the next row seamlessly within the same framework. We implemented our approach using C++ and ROS and thoroughly tested it in several simulated environments with different shapes and sizes of field. We also demonstrated the system running at frame-rate on an actual robot operating on a test row-crop field. The code and data have been published.

Long-Term Robot Navigation in Indoor Environments Estimating Patterns in Traversability Changes

Sep 27, 2019

Abstract:Nowadays, mobile robots are deployed in many indoor environments, such as offices or hospitals. These environments are subject to changes in the traversability that often happen by following repeating patterns. In this paper, we investigate the problem of navigating in such environments over extended periods of time by capturing these patterns and exploiting this knowledge to make informed decisions. Our approach incrementally estimates a model of the traversability changes from robot's observations and uses a probabilistic graphical model to make predictions at currently unobserved locations. In the belief space defined by the predictions, we plan paths that trade off the risk to encounter obstacles and the information gain of visiting unknown locations. We implemented our approach and tested it in different indoor environments. The experiments suggest that in the long run, our approach leads to navigation along shorter paths compared to following a greedy shortest path policy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge