Long Ho

Efficiently Assemble Normalization Layers and Regularization for Federated Domain Generalization

Mar 22, 2024Abstract:Domain shift is a formidable issue in Machine Learning that causes a model to suffer from performance degradation when tested on unseen domains. Federated Domain Generalization (FedDG) attempts to train a global model using collaborative clients in a privacy-preserving manner that can generalize well to unseen clients possibly with domain shift. However, most existing FedDG methods either cause additional privacy risks of data leakage or induce significant costs in client communication and computation, which are major concerns in the Federated Learning paradigm. To circumvent these challenges, here we introduce a novel architectural method for FedDG, namely gPerXAN, which relies on a normalization scheme working with a guiding regularizer. In particular, we carefully design Personalized eXplicitly Assembled Normalization to enforce client models selectively filtering domain-specific features that are biased towards local data while retaining discrimination of those features. Then, we incorporate a simple yet effective regularizer to guide these models in directly capturing domain-invariant representations that the global model's classifier can leverage. Extensive experimental results on two benchmark datasets, i.e., PACS and Office-Home, and a real-world medical dataset, Camelyon17, indicate that our proposed method outperforms other existing methods in addressing this particular problem.

The Impact of Extraneous Variables on the Performance of Recurrent Neural Network Models in Clinical Tasks

Apr 01, 2019

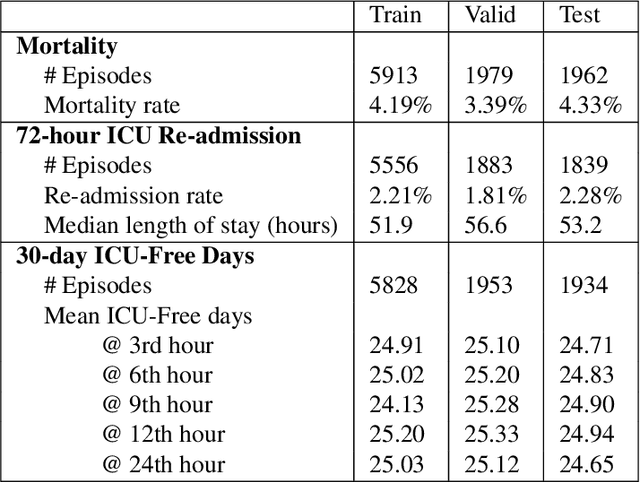

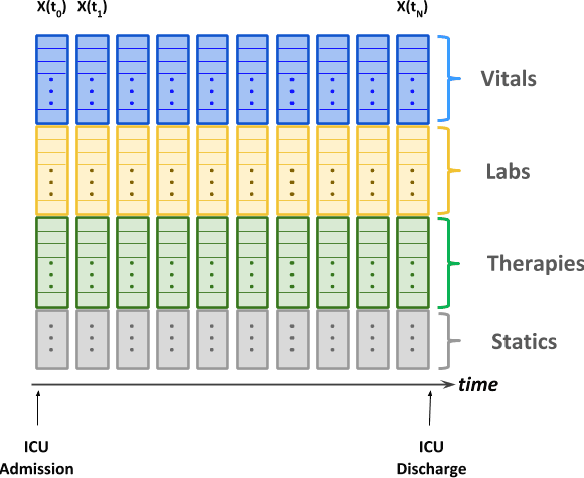

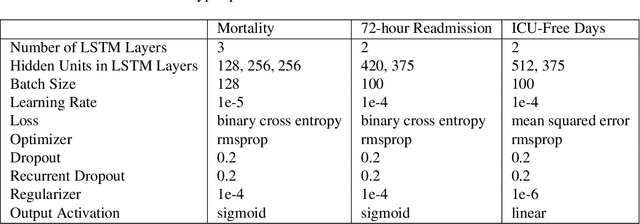

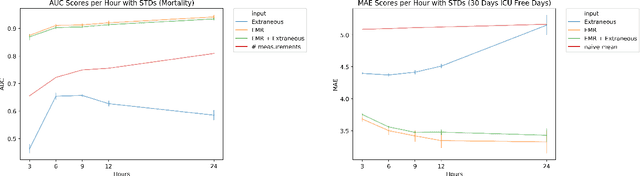

Abstract:Electronic Medical Records (EMR) are a rich source of patient information, including measurements reflecting physiologic signs and administered therapies. Identifying which variables are useful in predicting clinical outcomes can be challenging. Advanced algorithms such as deep neural networks were designed to process high-dimensional inputs containing variables in their measured form, thus bypass separate feature selection or engineering steps. We investigated the effect of extraneous input variables on the predictive performance of Recurrent Neural Networks (RNN) by including in the input vector extraneous variables randomly drawn from theoretical and empirical distributions. RNN models using different input vectors (EMR variables; EMR and extraneous variables; extraneous variables only) were trained to predict three clinical outcomes: in-ICU mortality, 72-hour ICU re-admission, and 30-day ICU-free days. The measured degradations of the RNN's predictive performance with the addition of extraneous variables to EMR variables were negligible.

The Dependence of Machine Learning on Electronic Medical Record Quality

Mar 23, 2017

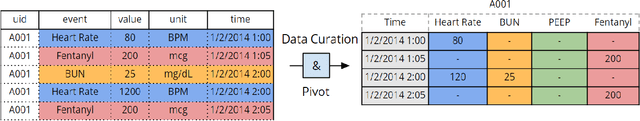

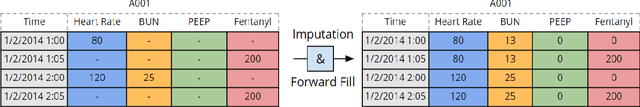

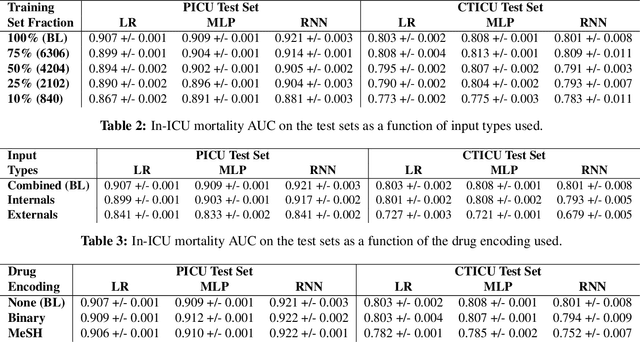

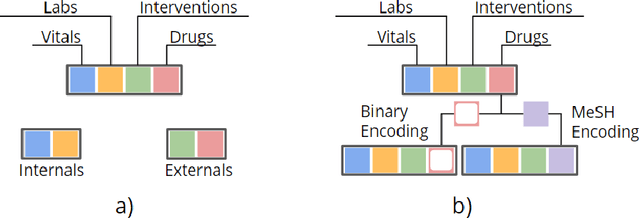

Abstract:There is growing interest in applying machine learning methods to Electronic Medical Records (EMR). Across different institutions, however, EMR quality can vary widely. This work investigated the impact of this disparity on the performance of three advanced machine learning algorithms: logistic regression, multilayer perceptron, and recurrent neural network. The EMR disparity was emulated using different permutations of the EMR collected at Children's Hospital Los Angeles (CHLA) Pediatric Intensive Care Unit (PICU) and Cardiothoracic Intensive Care Unit (CTICU). The algorithms were trained using patients from the PICU to predict in-ICU mortality for patients in a held out set of PICU and CTICU patients. The disparate patient populations between the PICU and CTICU provide an estimate of generalization errors across different ICUs. We quantified and evaluated the generalization of these algorithms on varying EMR size, input types, and fidelity of data.

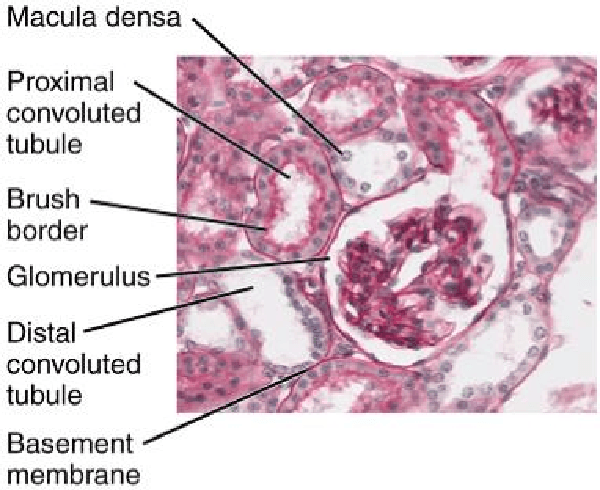

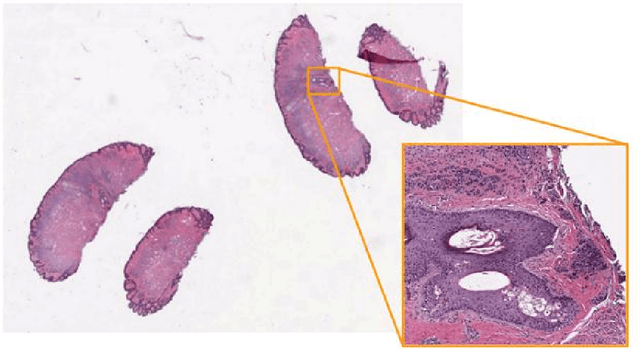

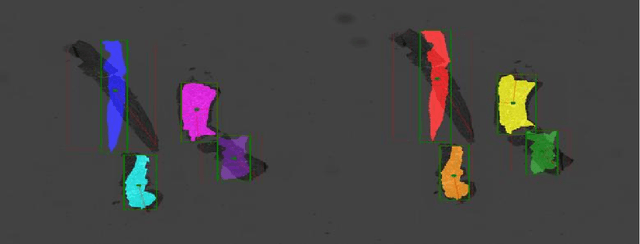

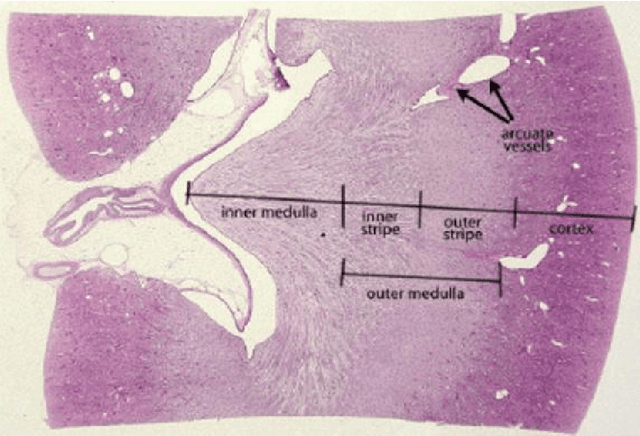

Prediction of Kidney Function from Biopsy Images Using Convolutional Neural Networks

Feb 06, 2017

Abstract:A Convolutional Neural Network was used to predict kidney function in patients with chronic kidney disease from high-resolution digital pathology scans of their kidney biopsies. Kidney biopsies were taken from participants of the NEPTUNE study, a longitudinal cohort study whose goal is to set up infrastructure for observing the evolution of 3 forms of idiopathic nephrotic syndrome, including developing predictors for progression of kidney disease. The knowledge of future kidney function is desirable as it can identify high-risk patients and influence treatment decisions, reducing the likelihood of irreversible kidney decline.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge