Lisheng Xu

Heterogeneous Complementary Distillation

Nov 14, 2025Abstract:Knowledge distillation (KD)transfers the dark knowledge from a complex teacher to a compact student. However, heterogeneous architecture distillation, such as Vision Transformer (ViT) to ResNet18, faces challenges due to differences in spatial feature representations.Traditional KD methods are mostly designed for homogeneous architectures and hence struggle to effectively address the disparity. Although heterogeneous KD approaches have been developed recently to solve these issues, they often incur high computational costs and complex designs, or overly rely on logit alignment, which limits their ability to leverage the complementary features. To overcome these limitations, we propose Heterogeneous Complementary Distillation (HCD),a simple yet effective framework that integrates complementary teacher and student features to align representations in shared logits.These logits are decomposed and constrained to facilitate diverse knowledge transfer to the student. Specifically, HCD processes the student's intermediate features through convolutional projector and adaptive pooling, concatenates them with teacher's feature from the penultimate layer and then maps them via the Complementary Feature Mapper (CFM) module, comprising fully connected layer,to produce shared logits.We further introduce Sub-logit Decoupled Distillation (SDD) that partitions the shared logits into n sub-logits, which are fused with teacher's logits to rectify classification.To ensure sub-logit diversity and reduce redundant knowledge transfer, we propose an Orthogonality Loss (OL).By preserving student-specific strengths and leveraging teacher knowledge,HCD enhances robustness and generalization in students.Extensive experiments on the CIFAR-100, Fine-grained (e.g., CUB200)and ImageNet-1K datasets demonstrate that HCD outperforms state-of-the-art KD methods,establishing it as an effective solution for heterogeneous KD.

Micro-Expression Recognition by Motion Feature Extraction based on Pre-training

Jul 10, 2024

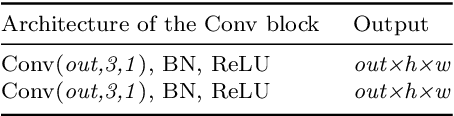

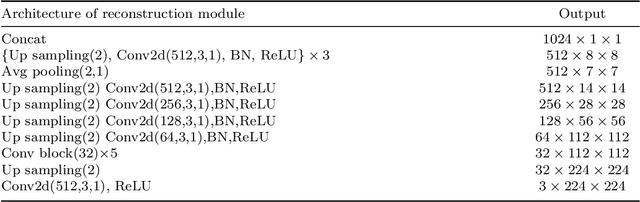

Abstract:Micro-expressions (MEs) are spontaneous, unconscious facial expressions that have promising applications in various fields such as psychotherapy and national security. Thus, micro-expression recognition (MER) has attracted more and more attention from researchers. Although various MER methods have emerged especially with the development of deep learning techniques, the task still faces several challenges, e.g. subtle motion and limited training data. To address these problems, we propose a novel motion extraction strategy (MoExt) for the MER task and use additional macro-expression data in the pre-training process. We primarily pretrain the feature separator and motion extractor using the contrastive loss, thus enabling them to extract representative motion features. In MoExt, shape features and texture features are first extracted separately from onset and apex frames, and then motion features related to MEs are extracted based on the shape features of both frames. To enable the model to more effectively separate features, we utilize the extracted motion features and the texture features from the onset frame to reconstruct the apex frame. Through pre-training, the module is enabled to extract inter-frame motion features of facial expressions while excluding irrelevant information. The feature separator and motion extractor are ultimately integrated into the MER network, which is then fine-tuned using the target ME data. The effectiveness of proposed method is validated on three commonly used datasets, i.e., CASME II, SMIC, SAMM, and CAS(ME)3 dataset. The results show that our method performs favorably against state-of-the-art methods.

LCAUnet: A skin lesion segmentation network with enhanced edge and body fusion

May 01, 2023Abstract:Accurate segmentation of skin lesions in dermatoscopic images is crucial for the early diagnosis of skin cancer and improving the survival rate of patients. However, it is still a challenging task due to the irregularity of lesion areas, the fuzziness of boundaries, and other complex interference factors. In this paper, a novel LCAUnet is proposed to improve the ability of complementary representation with fusion of edge and body features, which are often paid little attentions in traditional methods. First, two separate branches are set for edge and body segmentation with CNNs and Transformer based architecture respectively. Then, LCAF module is utilized to fuse feature maps of edge and body of the same level by local cross-attention operation in encoder stage. Furthermore, PGMF module is embedded for feature integration with prior guided multi-scale adaption. Comprehensive experiments on public available dataset ISIC 2017, ISIC 2018, and PH2 demonstrate that LCAUnet outperforms most state-of-the-art methods. The ablation studies also verify the effectiveness of the proposed fusion techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge