Lingyun Jiang

AdvJND: Generating Adversarial Examples with Just Noticeable Difference

Feb 01, 2020

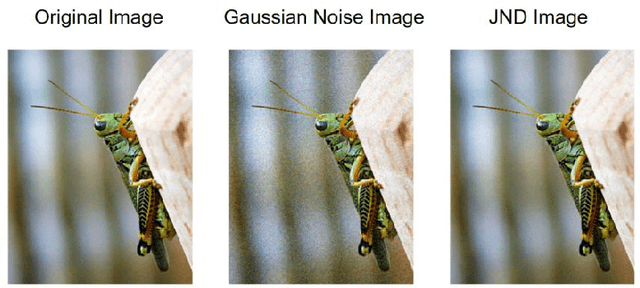

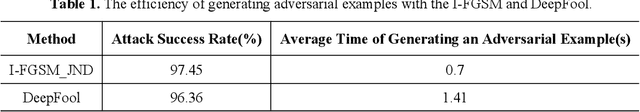

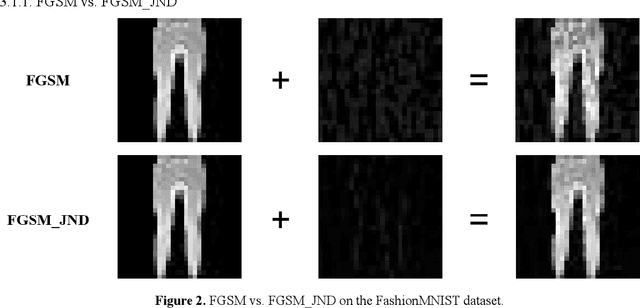

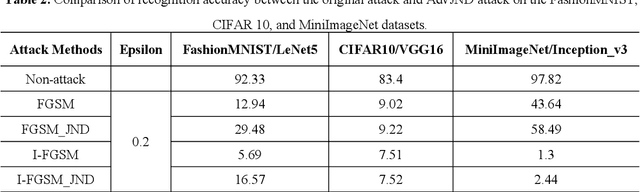

Abstract:Compared with traditional machine learning models, deep neural networks perform better, especially in image classification tasks. However, they are vulnerable to adversarial examples. Adding small perturbations on examples causes a good-performance model to misclassify the crafted examples, without category differences in the human eyes, and fools deep models successfully. There are two requirements for generating adversarial examples: the attack success rate and image fidelity metrics. Generally, perturbations are increased to ensure the adversarial examples' high attack success rate; however, the adversarial examples obtained have poor concealment. To alleviate the tradeoff between the attack success rate and image fidelity, we propose a method named AdvJND, adding visual model coefficients, just noticeable difference coefficients, in the constraint of a distortion function when generating adversarial examples. In fact, the visual subjective feeling of the human eyes is added as a priori information, which decides the distribution of perturbations, to improve the image quality of adversarial examples. We tested our method on the FashionMNIST, CIFAR10, and MiniImageNet datasets. Adversarial examples generated by our AdvJND algorithm yield gradient distributions that are similar to those of the original inputs. Hence, the crafted noise can be hidden in the original inputs, thus improving the attack concealment significantly.

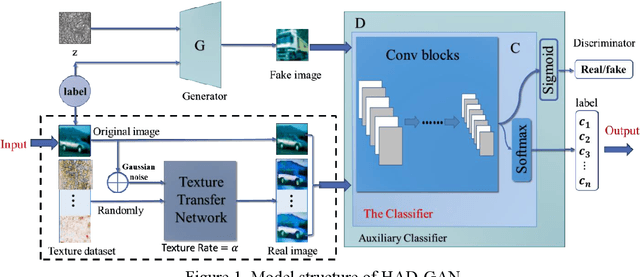

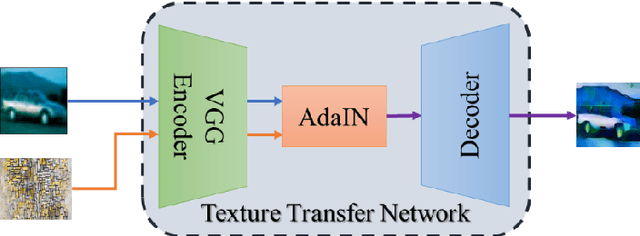

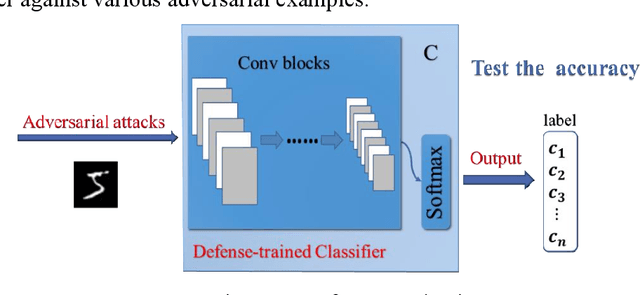

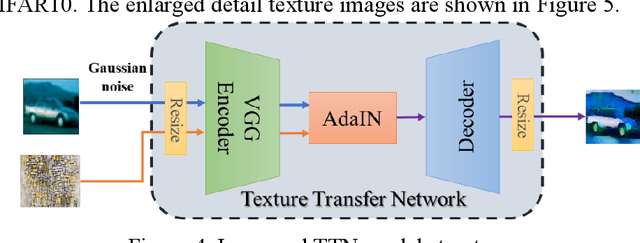

HAD-GAN: A Human-perception Auxiliary Defense GAN to Defend Adversarial Examples

Sep 25, 2019

Abstract:Adversarial examples reveal the vulnerability and unexplained nature of neural networks. Studying the defense of adversarial examples is of considerable practical importance. Most adversarial examples that misclassify networks are often undetectable by humans. In this paper, we propose a defense model to train the classifier into a human-perception classification model with shape preference. The proposed model comprising a texture transfer network (TTN) and an auxiliary defense generative adversarial networks (GAN) is called Human-perception Auxiliary Defense GAN (HAD-GAN). The TTN is used to extend the texture samples of a clean image and helps classifiers focus on its shape. GAN is utilized to form a training framework for the model and generate the necessary images. A series of experiments conducted on MNIST, Fashion-MNIST and CIFAR10 show that the proposed model outperforms the state-of-the-art defense methods for network robustness. The model also demonstrates a significant improvement on defense capability of adversarial examples.

Cycle-Consistent Adversarial GAN: the integration of adversarial attack and defense

Apr 12, 2019

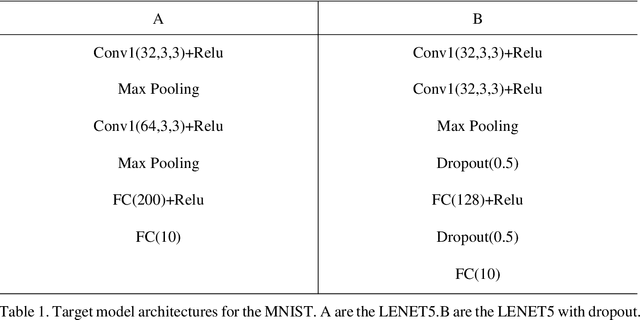

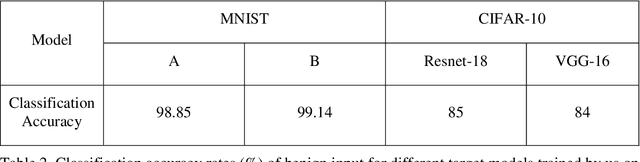

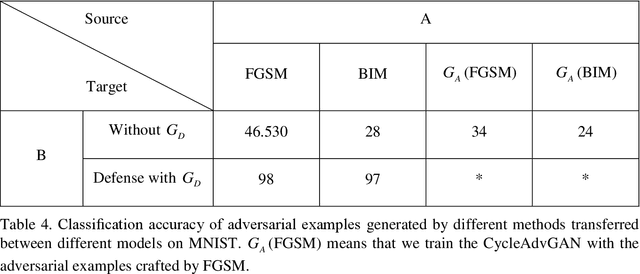

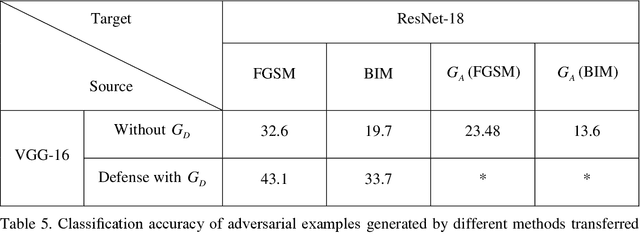

Abstract:In image classification of deep learning, adversarial examples where inputs intended to add small magnitude perturbations may mislead deep neural networks (DNNs) to incorrect results, which means DNNs are vulnerable to them. Different attack and defense strategies have been proposed to better research the mechanism of deep learning. However, those research in these networks are only for one aspect, either an attack or a defense, not considering that attacks and defenses should be interdependent and mutually reinforcing, just like the relationship between spears and shields. In this paper, we propose Cycle-Consistent Adversarial GAN (CycleAdvGAN) to generate adversarial examples, which can learn and approximate the distribution of original instances and adversarial examples. For CycleAdvGAN, once the Generator and are trained, can generate adversarial perturbations efficiently for any instance, so as to make DNNs predict wrong, and recovery adversarial examples to clean instances, so as to make DNNs predict correct. We apply CycleAdvGAN under semi-white box and black-box settings on two public datasets MNIST and CIFAR10. Using the extensive experiments, we show that our method has achieved the state-of-the-art adversarial attack method and also efficiently improve the defense ability, which make the integration of adversarial attack and defense come true. In additional, it has improved attack effect only trained on the adversarial dataset generated by any kind of adversarial attack.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge