Ling-Wei Huang

Nonlinear Kernel Support Vector Machine with 0-1 Soft Margin Loss

Mar 01, 2022

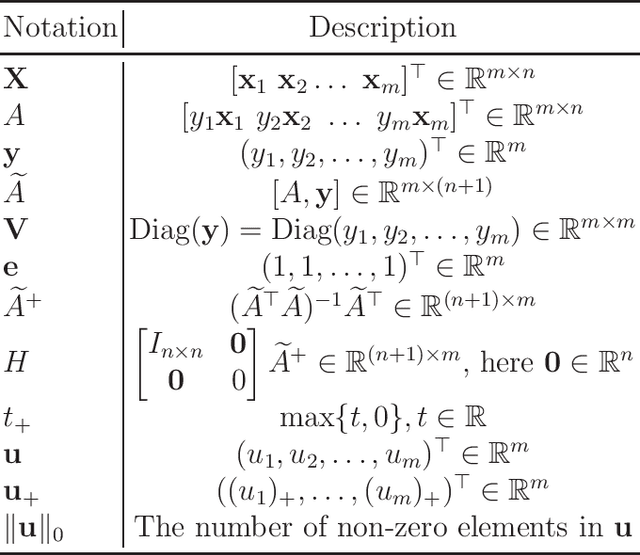

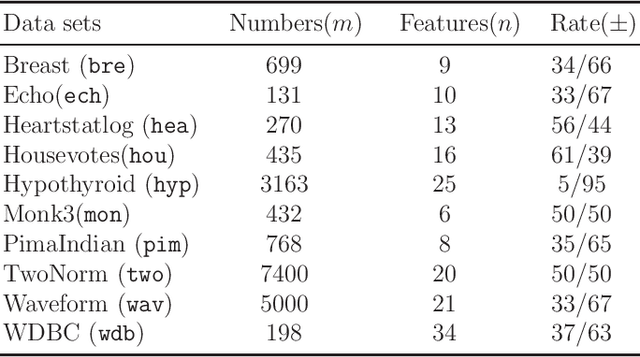

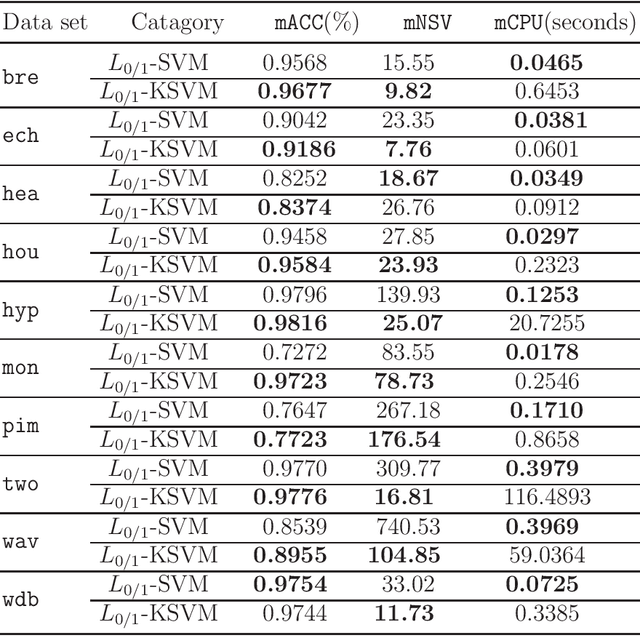

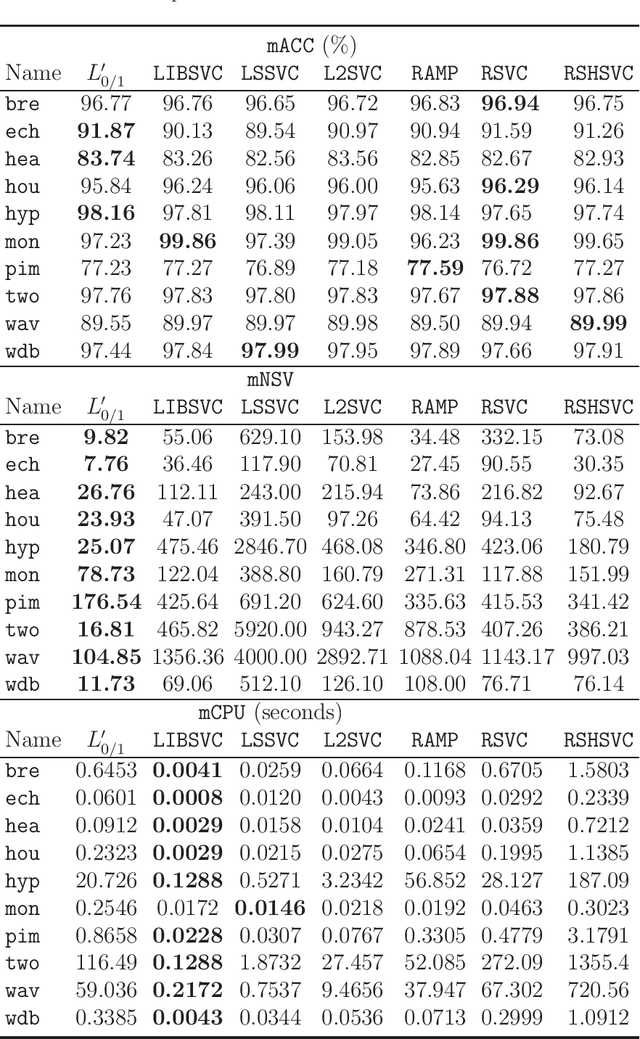

Abstract:Recent advance on linear support vector machine with the 0-1 soft margin loss ($L_{0/1}$-SVM) shows that the 0-1 loss problem can be solved directly. However, its theoretical and algorithmic requirements restrict us extending the linear solving framework to its nonlinear kernel form directly, the absence of explicit expression of Lagrangian dual function of $L_{0/1}$-SVM is one big deficiency among of them. In this paper, by applying the nonparametric representation theorem, we propose a nonlinear model for support vector machine with 0-1 soft margin loss, called $L_{0/1}$-KSVM, which cunningly involves the kernel technique into it and more importantly, follows the success on systematically solving its linear task. Its optimal condition is explored theoretically and a working set selection alternating direction method of multipliers (ADMM) algorithm is introduced to acquire its numerical solution. Moreover, we firstly present a closed-form definition to the support vector (SV) of $L_{0/1}$-KSVM. Theoretically, we prove that all SVs of $L_{0/1}$-KSVM are only located on the parallel decision surfaces. The experiment part also shows that $L_{0/1}$-KSVM has much fewer SVs, simultaneously with a decent predicting accuracy, when comparing to its linear peer $L_{0/1}$-SVM and the other six nonlinear benchmark SVM classifiers.

Single Versus Union: Non-parallel Support Vector Machine Frameworks

Oct 22, 2019

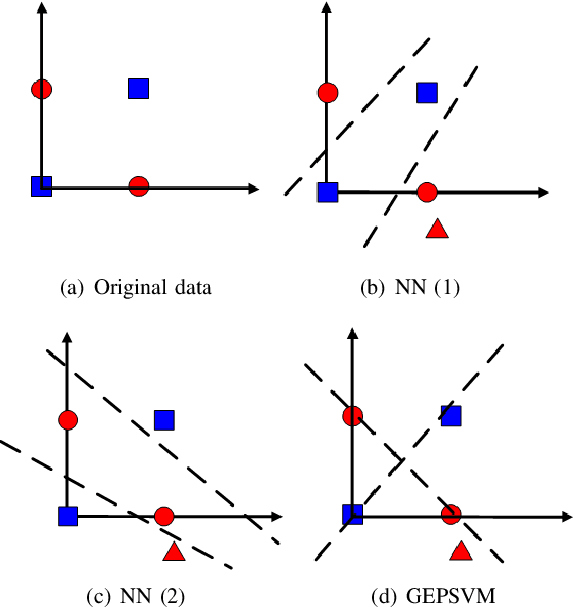

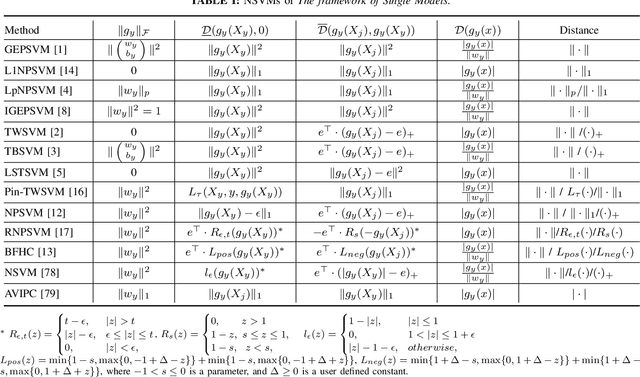

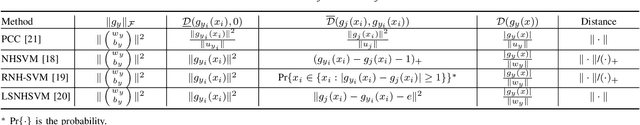

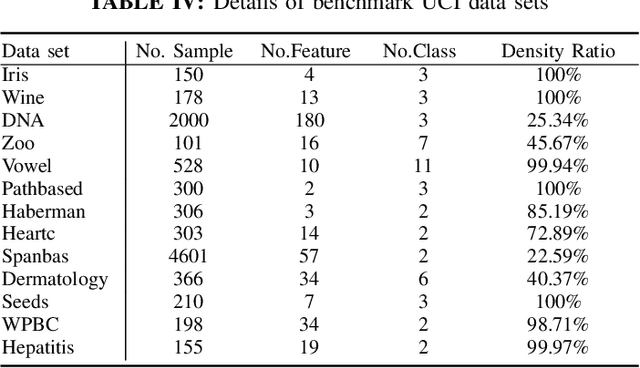

Abstract:Considering the classification problem, we summarize the nonparallel support vector machines with the nonparallel hyperplanes to two types of frameworks. The first type constructs the hyperplanes separately. It solves a series of small optimization problems to obtain a series of hyperplanes, but is hard to measure the loss of each sample. The other type constructs all the hyperplanes simultaneously, and it solves one big optimization problem with the ascertained loss of each sample. We give the characteristics of each framework and compare them carefully. In addition, based on the second framework, we construct a max-min distance-based nonparallel support vector machine for multiclass classification problem, called NSVM. It constructs hyperplanes with large distance margin by solving an optimization problem. Experimental results on benchmark data sets and human face databases show the advantages of our NSVM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge