Lihua Hu

From WSI-level to Patch-level: Structure Prior Guided Binuclear Cell Fine-grained Detection

Aug 26, 2022

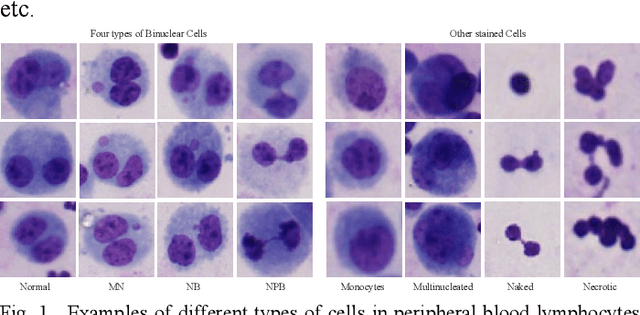

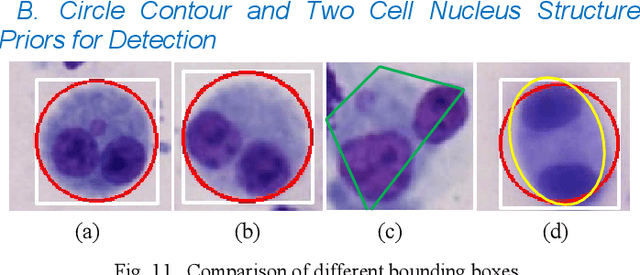

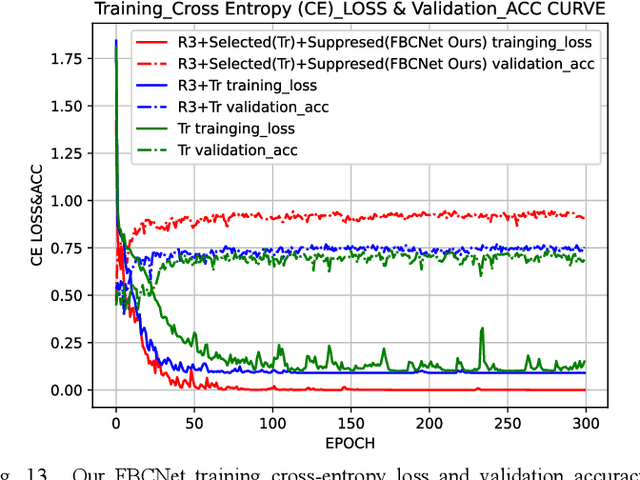

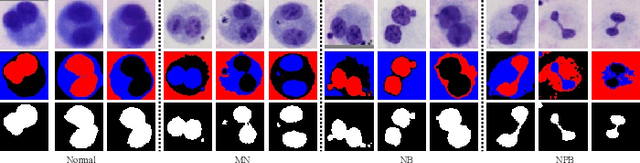

Abstract:Accurately and quickly binuclear cell (BC) detection plays a significant role in predicting the risk of leukemia and other malignant tumors. However, manual microscopy counting is time-consuming and lacks objectivity. Moreover, with the limitation of staining quality and diversity of morphology features in BC microscopy whole slide images (WSIs), traditional image processing approaches are helpless. To overcome this challenge, we propose a two-stage detection method inspired by the structure prior of BC based on deep learning, which cascades to implement BCs coarse detection at the WSI-level and fine-grained classification in patch-level. The coarse detection network is a multi-task detection framework based on circular bounding boxes for cells detection, and central key points for nucleus detection. The circle representation reduces the degrees of freedom, mitigates the effect of surrounding impurities compared to usual rectangular boxes and can be rotation invariant in WSI. Detecting key points in the nucleus can assist network perception and be used for unsupervised color layer segmentation in later fine-grained classification. The fine classification network consists of a background region suppression module based on color layer mask supervision and a key region selection module based on a transformer due to its global modeling capability. Additionally, an unsupervised and unpaired cytoplasm generator network is firstly proposed to expand the long-tailed distribution dataset. Finally, experiments are performed on BC multicenter datasets. The proposed BC fine detection method outperforms other benchmarks in almost all the evaluation criteria, providing clarification and support for tasks such as cancer screenings.

An Iterative Co-Training Transductive Framework for Zero Shot Learning

Mar 30, 2022

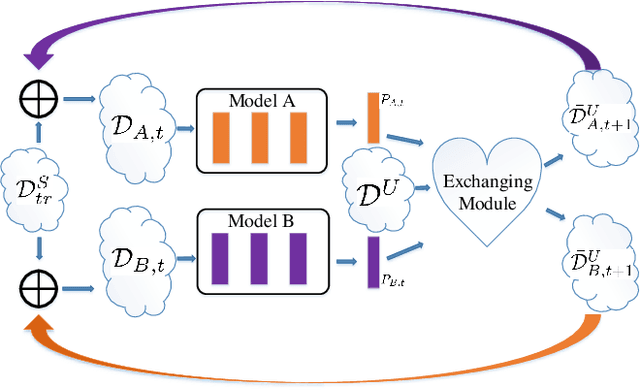

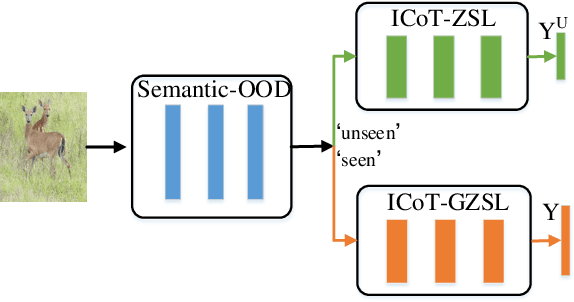

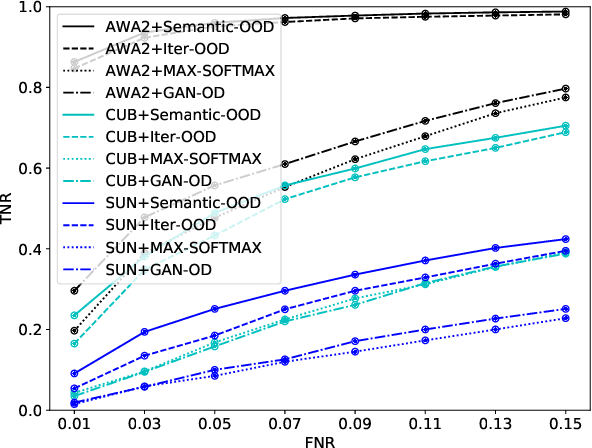

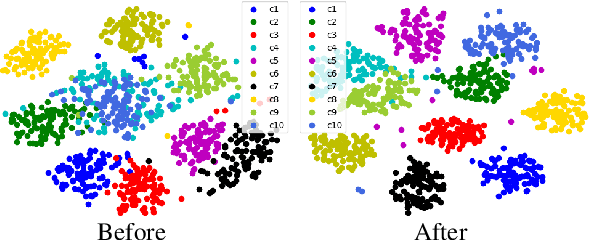

Abstract:In zero-shot learning (ZSL) community, it is generally recognized that transductive learning performs better than inductive one as the unseen-class samples are also used in its training stage. How to generate pseudo labels for unseen-class samples and how to use such usually noisy pseudo labels are two critical issues in transductive learning. In this work, we introduce an iterative co-training framework which contains two different base ZSL models and an exchanging module. At each iteration, the two different ZSL models are co-trained to separately predict pseudo labels for the unseen-class samples, and the exchanging module exchanges the predicted pseudo labels, then the exchanged pseudo-labeled samples are added into the training sets for the next iteration. By such, our framework can gradually boost the ZSL performance by fully exploiting the potential complementarity of the two models' classification capabilities. In addition, our co-training framework is also applied to the generalized ZSL (GZSL), in which a semantic-guided OOD detector is proposed to pick out the most likely unseen-class samples before class-level classification to alleviate the bias problem in GZSL. Extensive experiments on three benchmarks show that our proposed methods could significantly outperform about $31$ state-of-the-art ones.

* 15 pages, 7 figures

HardBoost: Boosting Zero-Shot Learning with Hard Classes

Jan 14, 2022

Abstract:This work is a systematical analysis on the so-called hard class problem in zero-shot learning (ZSL), that is, some unseen classes disproportionally affect the ZSL performances than others, as well as how to remedy the problem by detecting and exploiting hard classes. At first, we report our empirical finding that the hard class problem is a ubiquitous phenomenon and persists regardless of used specific methods in ZSL. Then, we find that high semantic affinity among unseen classes is a plausible underlying cause of hardness and design two metrics to detect hard classes. Finally, two frameworks are proposed to remedy the problem by detecting and exploiting hard classes, one under inductive setting, the other under transductive setting. The proposed frameworks could accommodate most existing ZSL methods to further significantly boost their performances with little efforts. Extensive experiments on three popular benchmarks demonstrate the benefits by identifying and exploiting the hard classes in ZSL.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge