An Iterative Co-Training Transductive Framework for Zero Shot Learning

Paper and Code

Mar 30, 2022

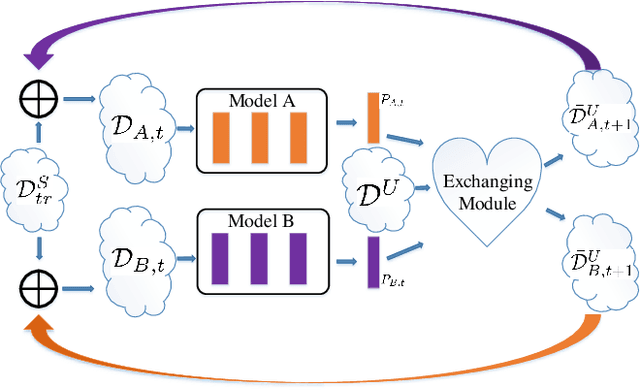

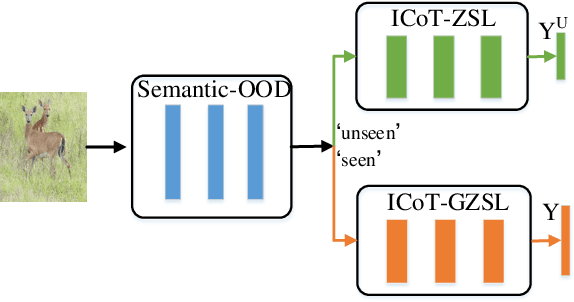

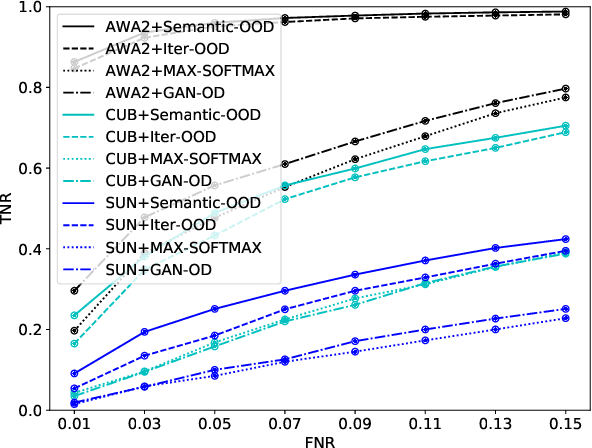

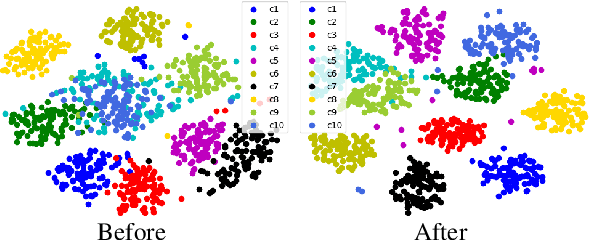

In zero-shot learning (ZSL) community, it is generally recognized that transductive learning performs better than inductive one as the unseen-class samples are also used in its training stage. How to generate pseudo labels for unseen-class samples and how to use such usually noisy pseudo labels are two critical issues in transductive learning. In this work, we introduce an iterative co-training framework which contains two different base ZSL models and an exchanging module. At each iteration, the two different ZSL models are co-trained to separately predict pseudo labels for the unseen-class samples, and the exchanging module exchanges the predicted pseudo labels, then the exchanged pseudo-labeled samples are added into the training sets for the next iteration. By such, our framework can gradually boost the ZSL performance by fully exploiting the potential complementarity of the two models' classification capabilities. In addition, our co-training framework is also applied to the generalized ZSL (GZSL), in which a semantic-guided OOD detector is proposed to pick out the most likely unseen-class samples before class-level classification to alleviate the bias problem in GZSL. Extensive experiments on three benchmarks show that our proposed methods could significantly outperform about $31$ state-of-the-art ones.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge