Lev Mukhanov

Towards LLM-based optimization compilers. Can LLMs learn how to apply a single peephole optimization? Reasoning is all LLMs need!

Dec 11, 2024Abstract:Large Language Models (LLMs) have demonstrated great potential in various language processing tasks, and recent studies have explored their application in compiler optimizations. However, all these studies focus on the conventional open-source LLMs, such as Llama2, which lack enhanced reasoning mechanisms. In this study, we investigate the errors produced by the fine-tuned 7B-parameter Llama2 model as it attempts to learn and apply a simple peephole optimization for the AArch64 assembly code. We provide an analysis of the errors produced by the LLM and compare it with state-of-the-art OpenAI models which implement advanced reasoning logic, including GPT-4o and GPT-o1 (preview). We demonstrate that OpenAI GPT-o1, despite not being fine-tuned, outperforms the fine-tuned Llama2 and GPT-4o. Our findings indicate that this advantage is largely due to the chain-of-thought reasoning implemented in GPT-o1. We hope our work will inspire further research on using LLMs with enhanced reasoning mechanisms and chain-of-thought for code generation and optimization.

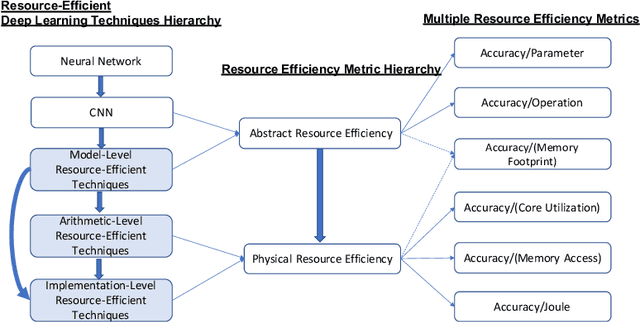

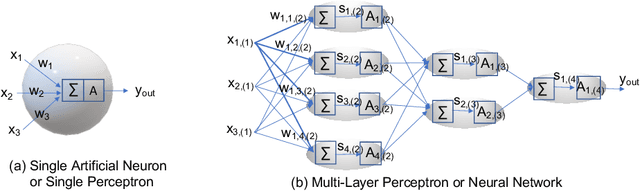

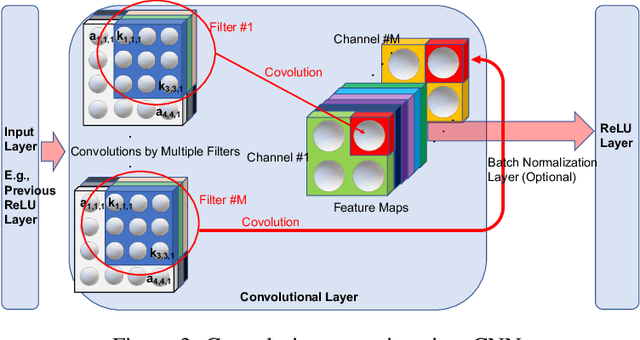

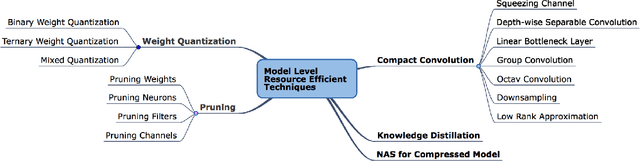

Resource-Efficient Deep Learning: A Survey on Model-, Arithmetic-, and Implementation-Level Techniques

Dec 30, 2021

Abstract:Deep learning is pervasive in our daily life, including self-driving cars, virtual assistants, social network services, healthcare services, face recognition, etc. However, deep neural networks demand substantial compute resources during training and inference. The machine learning community has mainly focused on model-level optimizations such as architectural compression of deep learning models, while the system community has focused on implementation-level optimization. In between, various arithmetic-level optimization techniques have been proposed in the arithmetic community. This article provides a survey on resource-efficient deep learning techniques in terms of model-, arithmetic-, and implementation-level techniques and identifies the research gaps for resource-efficient deep learning techniques across the three different level techniques. Our survey clarifies the influence from higher to lower-level techniques based on our resource-efficiency metric definition and discusses the future trend for resource-efficient deep learning research.

A case study on profiling of an EEG-based brain decoding interface on Cloud and Edge servers

Oct 04, 2021

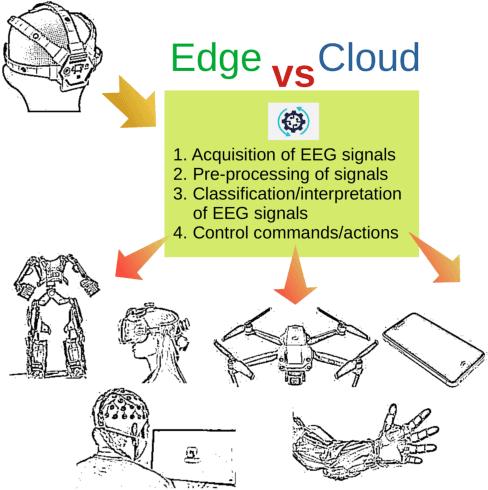

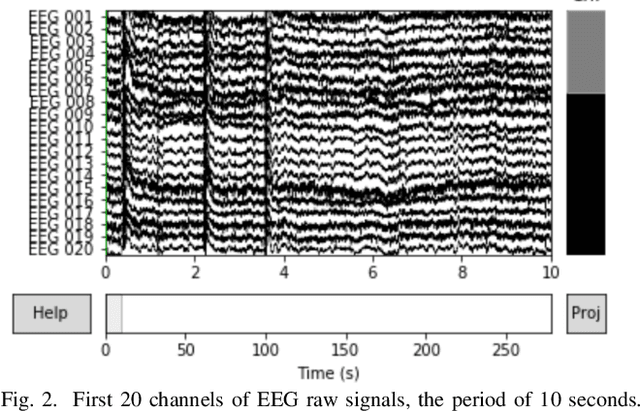

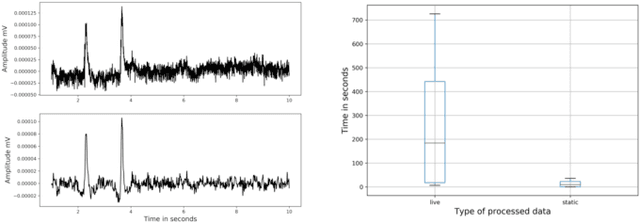

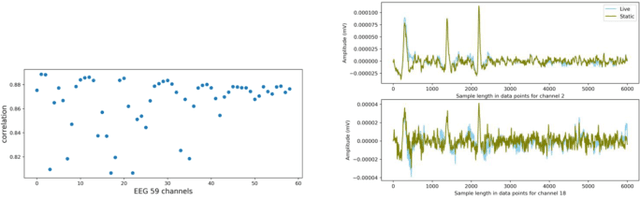

Abstract:Brain-Computer Interfaces (BCIs) enable converting the brain electrical activity of an interface user to the user commands. BCI research studies demonstrated encouraging results in different areas such as neurorehabilitation, control of artificial limbs, control of computer environments, communication and detection of diseases. Most of BCIs use scalp-electroencephalography (EEG), which is a non-invasive method to capture the brain activity. Although EEG monitoring devices are available in the market, these devices are generally lab-oriented and expensive. Day-to-day use of BCIs is impractical at this time due to the complex techniques required for data preprocessing and signal analysis. This implies that BCI technologies should be improved to facilitate its widespread adoption in Cloud and Edge datacenters. This paper presents a case study on profiling the accuracy and performance of a brain-computer interface which runs on typical Cloud and Edge servers. In particular, we investigate how the accuracy and execution time of the preprocessing phase, i.e. the brain signal filtering phase, of a brain-computer interface varies when processing static and live streaming data obtained in real time BCI devices. We identify the optimal size of the packets for sampling brain signals which provides the best trade-off between the accuracy and performance. Finally, we discuss the pros and cons of using typical Cloud and Edge servers to perform the BCI filtering phase.

Leveraging Transprecision Computing for Machine Vision Applications at the Edge

Aug 29, 2021

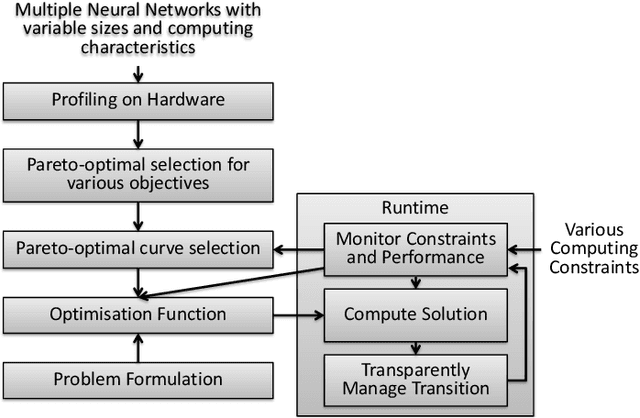

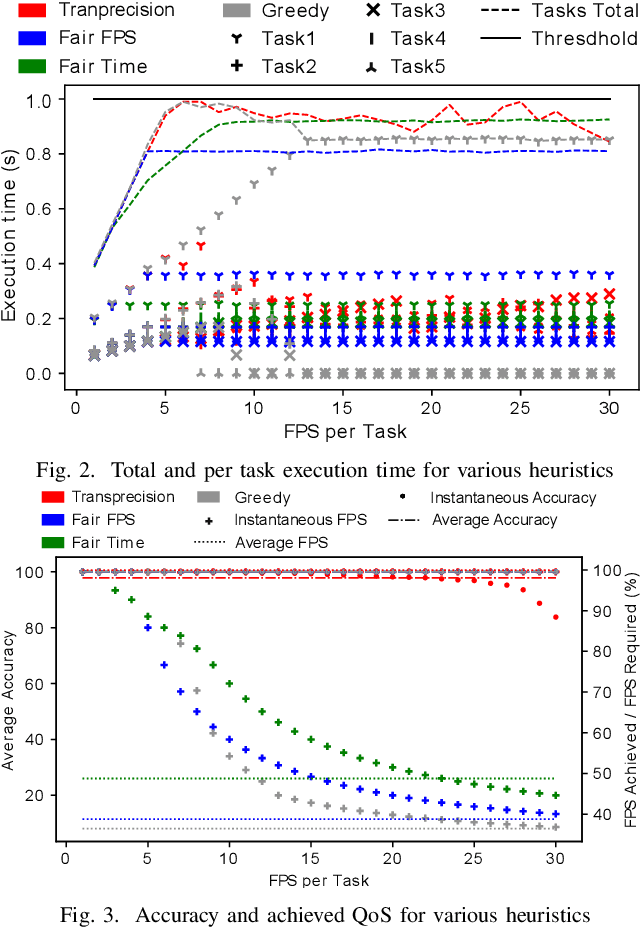

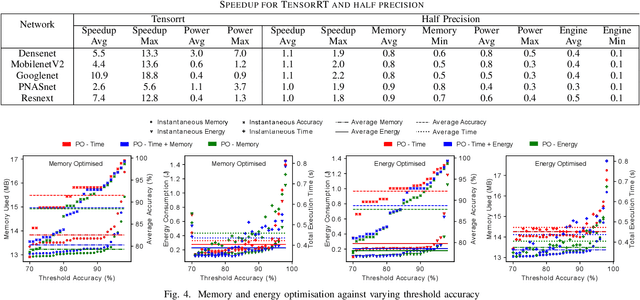

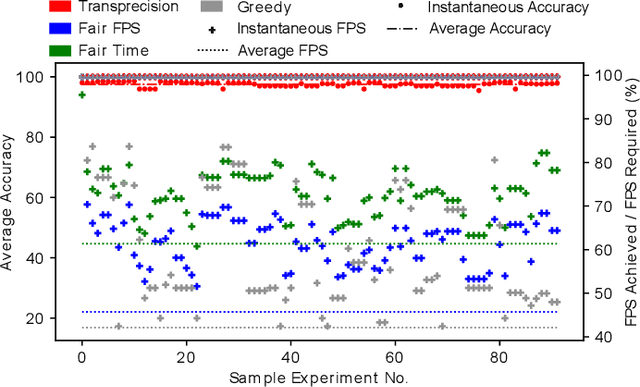

Abstract:Machine vision tasks present challenges for resource constrained edge devices, particularly as they execute multiple tasks with variable workloads. A robust approach that can dynamically adapt in runtime while maintaining the maximum quality of service (QoS) within resource constraints, is needed. The paper presents a lightweight approach that monitors the runtime workload constraint and leverages accuracy-throughput trade-off. Optimisation techniques are included which find the configurations for each task for optimal accuracy, energy and memory and manages transparent switching between configurations. For an accuracy drop of 1%, we show a 1.6x higher achieved frame processing rate with further improvements possible at lower accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge