A case study on profiling of an EEG-based brain decoding interface on Cloud and Edge servers

Paper and Code

Oct 04, 2021

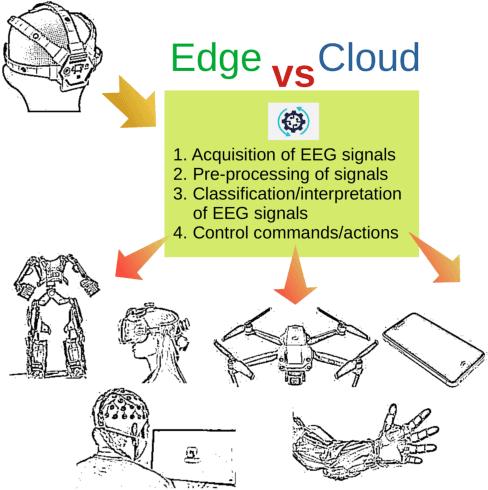

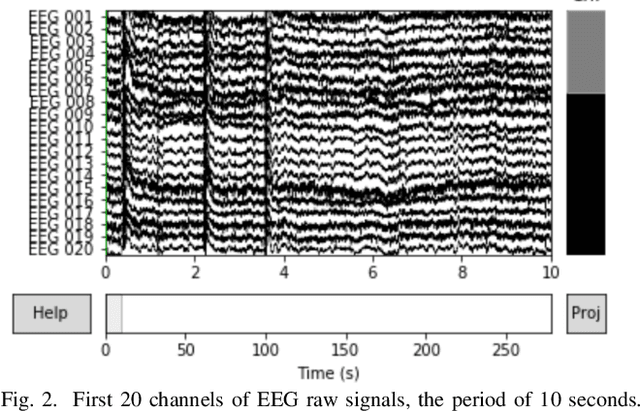

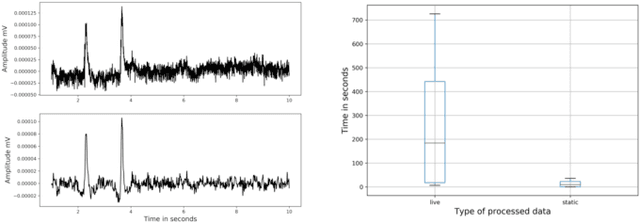

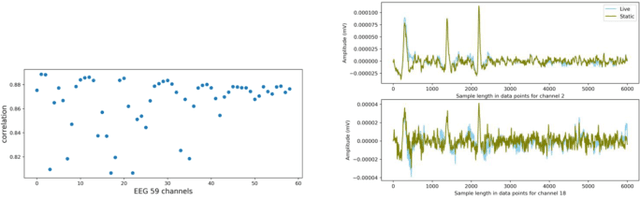

Brain-Computer Interfaces (BCIs) enable converting the brain electrical activity of an interface user to the user commands. BCI research studies demonstrated encouraging results in different areas such as neurorehabilitation, control of artificial limbs, control of computer environments, communication and detection of diseases. Most of BCIs use scalp-electroencephalography (EEG), which is a non-invasive method to capture the brain activity. Although EEG monitoring devices are available in the market, these devices are generally lab-oriented and expensive. Day-to-day use of BCIs is impractical at this time due to the complex techniques required for data preprocessing and signal analysis. This implies that BCI technologies should be improved to facilitate its widespread adoption in Cloud and Edge datacenters. This paper presents a case study on profiling the accuracy and performance of a brain-computer interface which runs on typical Cloud and Edge servers. In particular, we investigate how the accuracy and execution time of the preprocessing phase, i.e. the brain signal filtering phase, of a brain-computer interface varies when processing static and live streaming data obtained in real time BCI devices. We identify the optimal size of the packets for sampling brain signals which provides the best trade-off between the accuracy and performance. Finally, we discuss the pros and cons of using typical Cloud and Edge servers to perform the BCI filtering phase.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge