Leslie Ching Ow Tiong

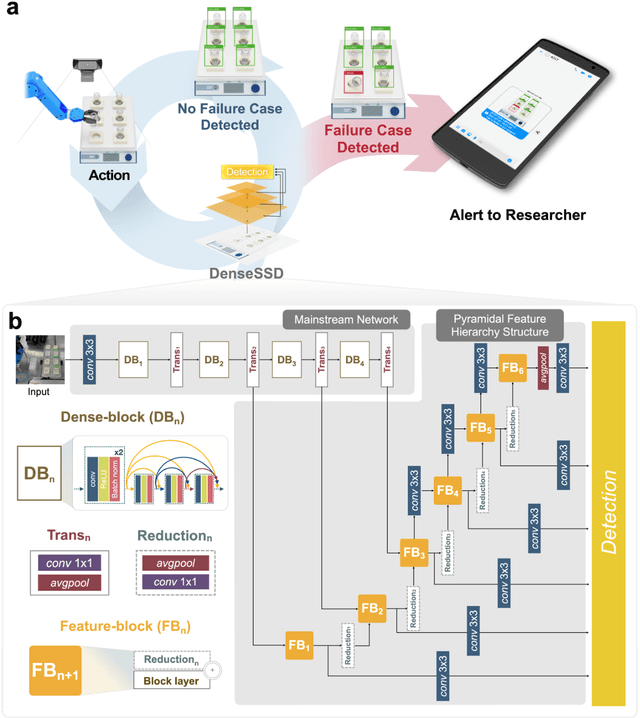

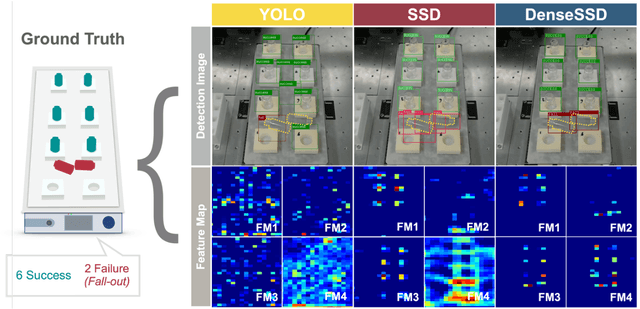

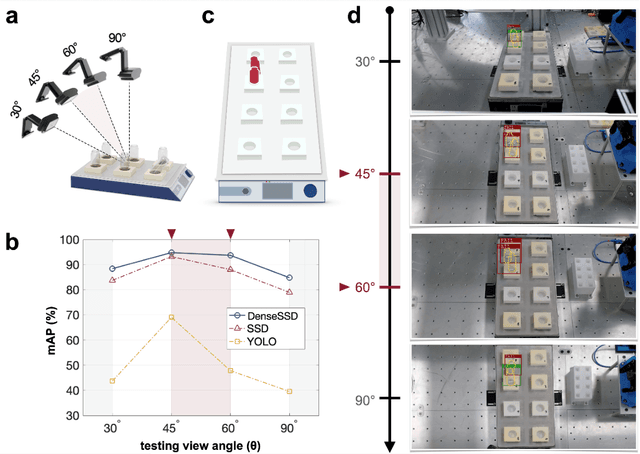

Machine vision for vial positioning detection toward the safe automation of material synthesis

Jun 15, 2022

Abstract:Although robot-based automation in chemistry laboratories can accelerate the material development process, surveillance-free environments may lead to dangerous accidents primarily due to machine control errors. Object detection techniques can play vital roles in addressing these safety issues; however, state-of-the-art detectors, including single-shot detector (SSD) models, suffer from insufficient accuracy in environments involving complex and noisy scenes. With the aim of improving safety in a surveillance-free laboratory, we report a novel deep learning (DL)-based object detector, namely, DenseSSD. For the foremost and frequent problem of detecting vial positions, DenseSSD achieved a mean average precision (mAP) over 95% based on a complex dataset involving both empty and solution-filled vials, greatly exceeding those of conventional detectors; such high precision is critical to minimizing failure-induced accidents. Additionally, DenseSSD was observed to be highly insensitive to the environmental changes, maintaining its high precision under the variations of solution colors or testing view angles. The robustness of DenseSSD would allow the utilized equipment settings to be more flexible. This work demonstrates that DenseSSD is useful for enhancing safety in an automated material synthesis environment, and it can be extended to various applications where high detection accuracy and speed are both needed.

3D-C2FT: Coarse-to-fine Transformer for Multi-view 3D Reconstruction

May 29, 2022

Abstract:Recently, the transformer model has been successfully employed for the multi-view 3D reconstruction problem. However, challenges remain on designing an attention mechanism to explore the multiview features and exploit their relations for reinforcing the encoding-decoding modules. This paper proposes a new model, namely 3D coarse-to-fine transformer (3D-C2FT), by introducing a novel coarse-to-fine(C2F) attention mechanism for encoding multi-view features and rectifying defective 3D objects. C2F attention mechanism enables the model to learn multi-view information flow and synthesize 3D surface correction in a coarse to fine-grained manner. The proposed model is evaluated by ShapeNet and Multi-view Real-life datasets. Experimental results show that 3D-C2FT achieves notable results and outperforms several competing models on these datasets.

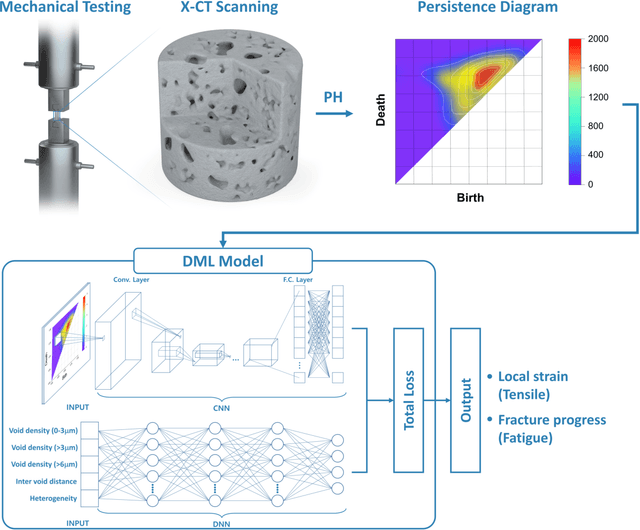

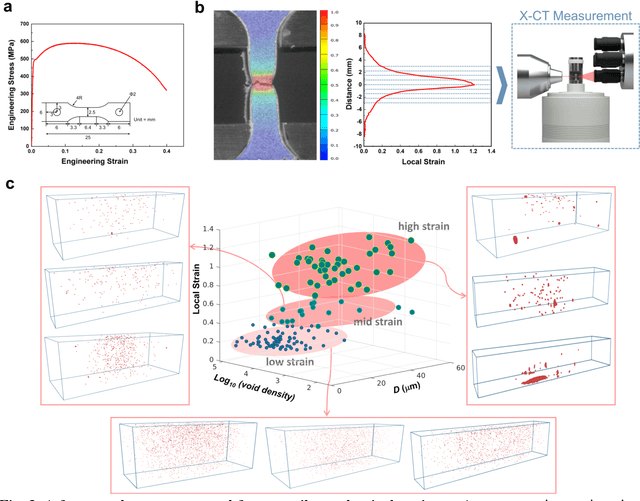

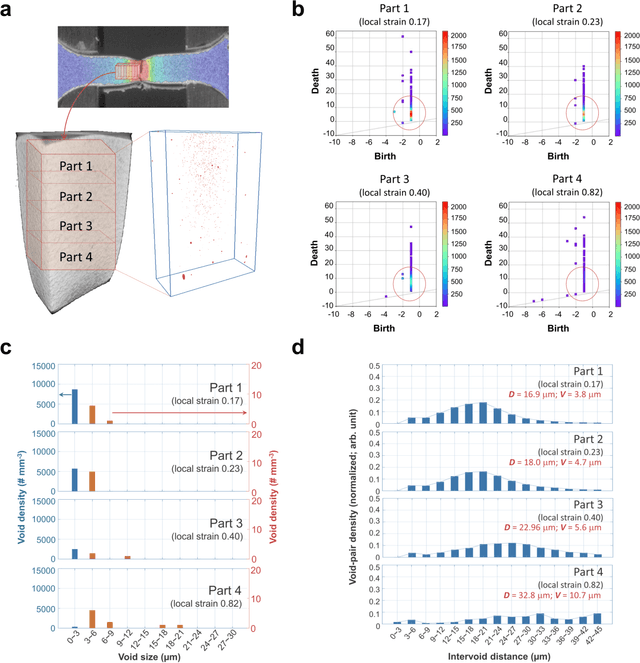

Predicting failure characteristics of structural materials via deep learning based on nondestructive void topology

May 17, 2022

Abstract:Accurate predictions of the failure progression of structural materials is critical for preventing failure-induced accidents. Despite considerable mechanics modeling-based efforts, accurate prediction remains a challenging task in real-world environments due to unexpected damage factors and defect evolutions. Here, we report a novel method for predicting material failure characteristics that uniquely combines nondestructive X-ray computed tomography (X-CT), persistent homology (PH), and deep multimodal learning (DML). The combined method exploits the microstructural defect state at the time of material examination as an input, and outputs the failure-related properties. Our method is demonstrated to be effective using two types of fracture datasets (tensile and fatigue datasets) with ferritic low alloy steel as a representative structural material. The method achieves a mean absolute error (MAE) of 0.09 in predicting the local strain with the tensile dataset and an MAE of 0.14 in predicting the fracture progress with the fatigue dataset. These high accuracies are mainly due to PH processing of the X-CT images, which transforms complex and noisy three-dimensional X-CT images into compact two-dimensional persistence diagrams that preserve key topological features such as the internal void size, density, and distribution. The combined PH and DML processing of 3D X-CT data is our unique approach enabling reliable failure predictions at the time of material examination based on void topology progressions, and the method can be extended to various nondestructive failure tests for practical use.

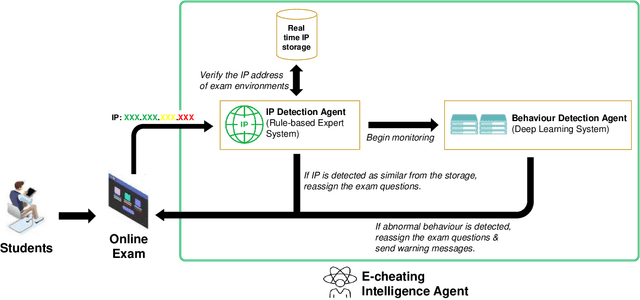

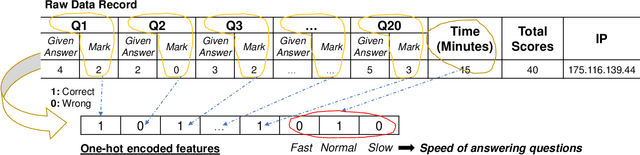

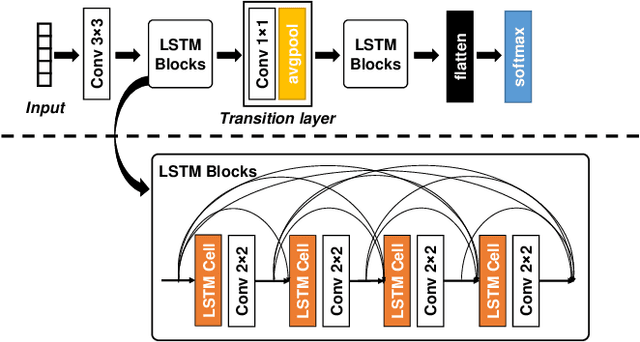

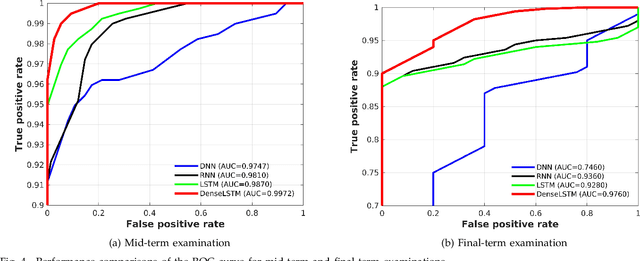

Online Assessment Misconduct Detection using Internet Protocol and Behavioural Classification

Jan 24, 2022Abstract:With the recent prevalence of remote education, academic assessments are often conducted online, leading to further concerns surrounding assessment misconducts. This paper investigates the potentials of online assessment misconduct (e-cheating) and proposes practical countermeasures against them. The mechanism for detecting the practices of online cheating is presented in the form of an e-cheating intelligent agent, comprising of an internet protocol (IP) detector and a behavioural monitor. The IP detector is an auxiliary detector which assigns randomised and unique assessment sets as an early procedure to reduce potential misconducts. The behavioural monitor scans for irregularities in assessment responses from the candidates, further reducing any misconduct attempts. This is highlighted through the proposal of the DenseLSTM using a deep learning approach. Additionally, a new PT Behavioural Database is presented and made publicly available. Experiments conducted on this dataset confirm the effectiveness of the DenseLSTM, resulting in classification accuracies of up to 90.7%.

E-cheating Prevention Measures: Detection of Cheating at Online Examinations Using Deep Learning Approach -- A Case Study

Jan 25, 2021

Abstract:This study addresses the current issues in online assessments, which are particularly relevant during the Covid-19 pandemic. Our focus is on academic dishonesty associated with online assessments. We investigated the prevalence of potential e-cheating using a case study and propose preventive measures that could be implemented. We have utilised an e-cheating intelligence agent as a mechanism for detecting the practices of online cheating, which is composed of two major modules: the internet protocol (IP) detector and the behaviour detector. The intelligence agent monitors the behaviour of the students and has the ability to prevent and detect any malicious practices. It can be used to assign randomised multiple-choice questions in a course examination and be integrated with online learning programs to monitor the behaviour of the students. The proposed method was tested on various data sets confirming its effectiveness. The results revealed accuracies of 68% for the deep neural network (DNN); 92% for the long-short term memory (LSTM); 95% for the DenseLSTM; and, 86% for the recurrent neural network (RNN).

Periocular in the Wild Embedding Learning with Cross-Modal Consistent Knowledge Distillation

Dec 12, 2020

Abstract:Periocular biometric, or peripheral area of ocular, is a collaborative alternative to face, especially if a face is occluded or masked. In practice, sole periocular biometric captures least salient facial features, thereby suffering from intra-class compactness and inter-class dispersion issues particularly in the wild environment. To address these problems, we transfer useful information from face to support periocular modality by means of knowledge distillation (KD) for embedding learning. However, applying typical KD techniques to heterogeneous modalities directly is suboptimal. We put forward in this paper a deep face-to-periocular distillation networks, coined as cross-modal consistent knowledge distillation (CM-CKD) henceforward. The three key ingredients of CM-CKD are (1) shared-weight networks, (2) consistent batch normalization, and (3) a bidirectional consistency distillation for face and periocular through an effectual CKD loss. To be more specific, we leverage face modality for periocular embedding learning, but only periocular images are targeted for identification or verification tasks. Extensive experiments on six constrained and unconstrained periocular datasets disclose that the CM-CKD-learned periocular embeddings extend identification and verification performance by 50% in terms of relative performance gain computed based upon face and periocular baselines. The experiments also reveal that the CM-CKD-learned periocular features enjoy better subject-wise cluster separation, thereby refining the overall accuracy performance.

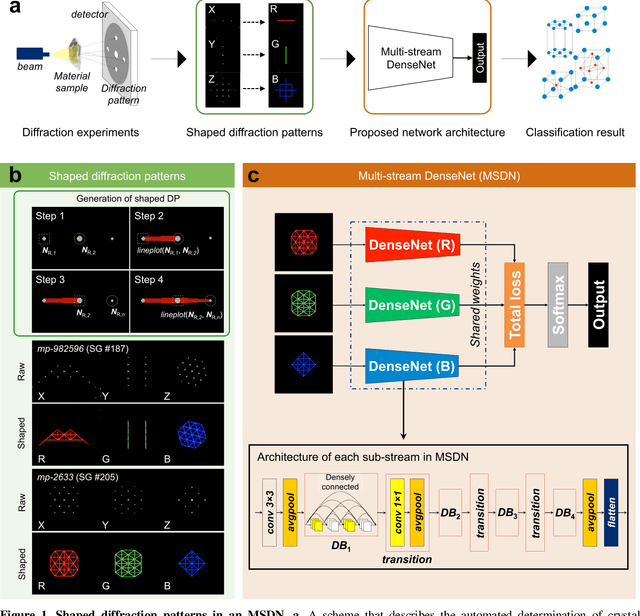

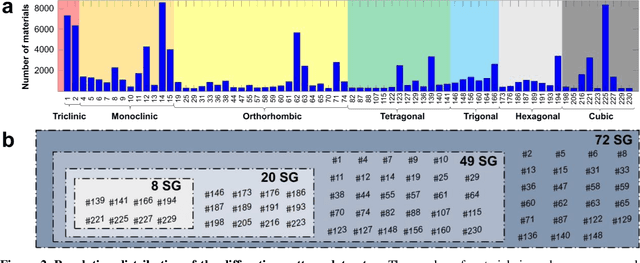

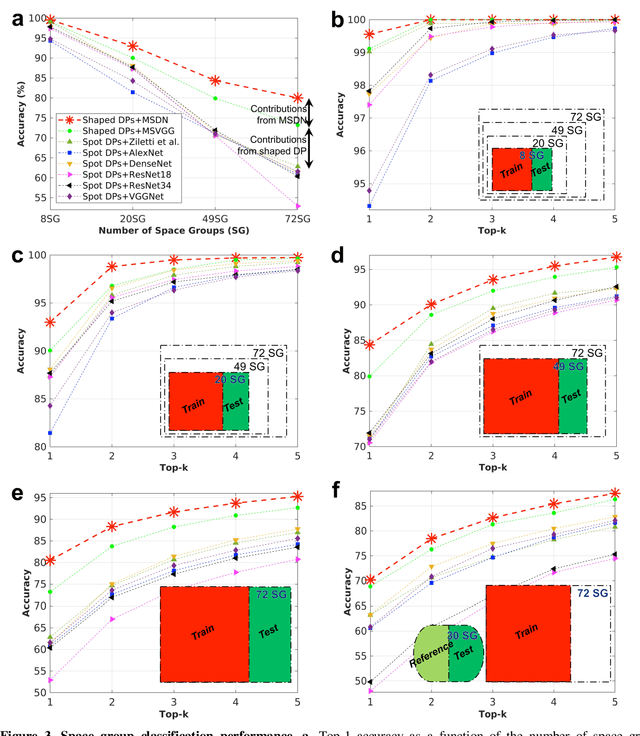

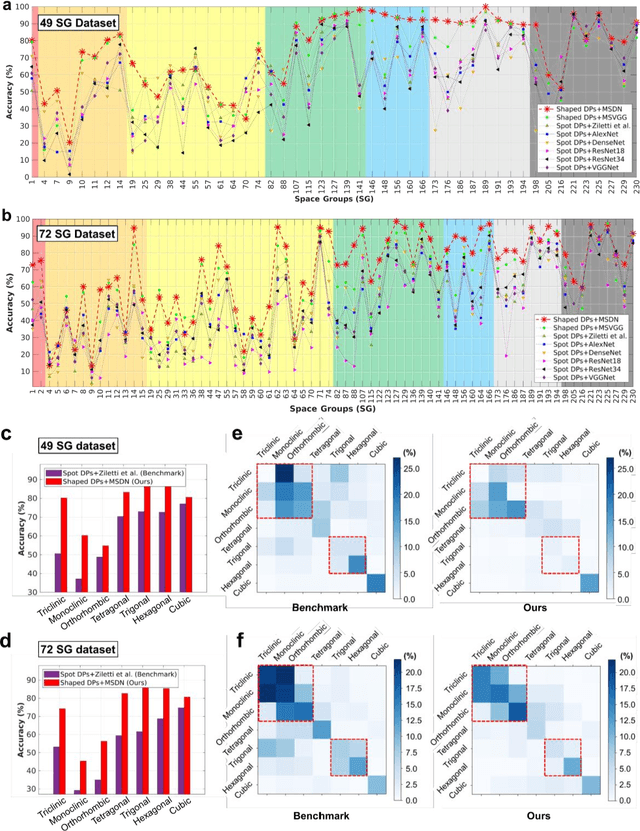

Identification of Crystal Symmetry from Noisy Diffraction Patterns by A Shape Analysis and Deep Learning

May 26, 2020

Abstract:The robust and automated determination of crystal symmetry is of utmost importance in material characterization and analysis. Recent studies have shown that deep learning (DL) methods can effectively reveal the correlations between X-ray or electron-beam diffraction patterns and crystal symmetry. Despite their promise, most of these studies have been limited to identifying relatively few classes into which a target material may be grouped. On the other hand, the DL-based identification of crystal symmetry suffers from a drastic drop in accuracy for problems involving classification into tens or hundreds of symmetry classes (e.g., up to 230 space groups), severely limiting its practical usage. Here, we demonstrate that a combined approach of shaping diffraction patterns and implementing them in a multistream DenseNet (MSDN) substantially improves the accuracy of classification. Even with an imbalanced dataset of 108,658 individual crystals sampled from 72 space groups, our model achieves 80.2% space group classification accuracy, outperforming conventional benchmark models by 17-27 percentage points (%p). The enhancement can be largely attributed to the pattern shaping strategy, through which the subtle changes in patterns between symmetrically close crystal systems (e.g., monoclinic vs. orthorhombic or trigonal vs. hexagonal) are well differentiated. We additionally find that the novel MSDN architecture is advantageous for capturing patterns in a richer but less redundant manner relative to conventional convolutional neural networks. The newly proposed protocols in regard to both input descriptor processing and DL architecture enable accurate space group classification and thus improve the practical usage of the DL approach in crystal symmetry identification.

Periocular Recognition in the Wild with Orthogonal Combination of Local Binary Coded Pattern in Dual-stream Convolutional Neural Network

Mar 19, 2019

Abstract:In spite of the advancements made in the periocular recognition, the dataset and periocular recognition in the wild remains a challenge. In this paper, we propose a multilayer fusion approach by means of a pair of shared parameters (dual-stream) convolutional neural network where each network accepts RGB data and a novel colour-based texture descriptor, namely Orthogonal Combination-Local Binary Coded Pattern (OC-LBCP) for periocular recognition in the wild. Specifically, two distinct late-fusion layers are introduced in the dual-stream network to aggregate the RGB data and OC-LBCP. Thus, the network beneficial from this new feature of the late-fusion layers for accuracy performance gain. We also introduce and share a new dataset for periocular in the wild, namely Ethnic-ocular dataset for benchmarking. The proposed network has also been assessed on one publicly available dataset, namely UBIPr. The proposed network outperforms several competing approaches on these datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge