Leonardo Teixeira

Contextual Unsupervised Outlier Detection in Sequences

Nov 06, 2021

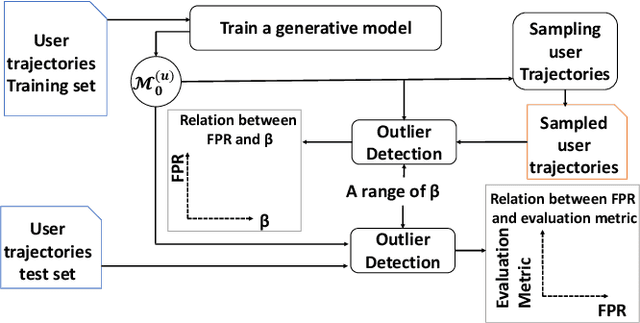

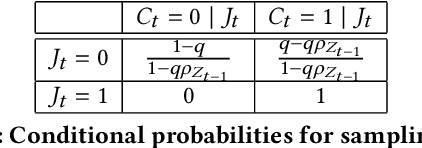

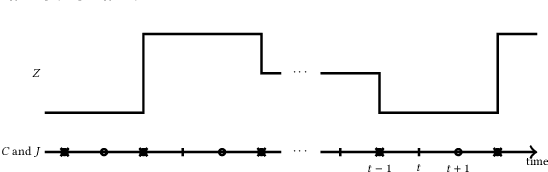

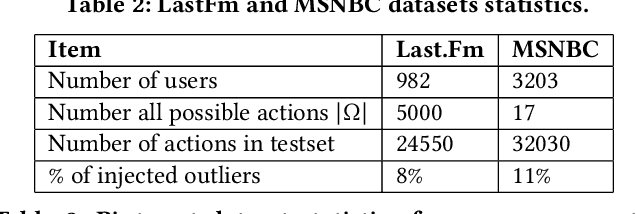

Abstract:This work proposes an unsupervised learning framework for trajectory (sequence) outlier detection that combines ranking tests with user sequence models. The overall framework identifies sequence outliers at a desired false positive rate (FPR), in an otherwise parameter-free manner. We evaluate our methodology on a collection of real and simulated datasets based on user actions at the websites last.fm and msnbc.com, where we know ground truth, and demonstrate improved accuracy over existing approaches. We also apply our approach to a large real-world dataset of Pinterest and Facebook users, where we find that users tend to re-share Pinterest posts of Facebook friends significantly more than other types of users, pointing to a potential influence of Facebook friendship on sharing behavior on Pinterest.

Deep Lifetime Clustering

Oct 02, 2019Abstract:The goal of lifetime clustering is to develop an inductive model that maps subjects into $K$ clusters according to their underlying (unobserved) lifetime distribution. We introduce a neural-network based lifetime clustering model that can find cluster assignments by directly maximizing the divergence between the empirical lifetime distributions of the clusters. Accordingly, we define a novel clustering loss function over the lifetime distributions (of entire clusters) based on a tight upper bound of the two-sample Kuiper test p-value. The resultant model is robust to the modeling issues associated with the unobservability of termination signals, and does not assume proportional hazards. Our results in real and synthetic datasets show significantly better lifetime clusters (as evaluated by C-index, Brier Score, Logrank score and adjusted Rand index) as compared to competing approaches.

Are Graph Neural Networks Miscalibrated?

Jun 03, 2019

Abstract:Graph Neural Networks (GNNs) have proven to be successful in many classification tasks, outperforming previous state-of-the-art methods in terms of accuracy. However, accuracy alone is not enough for high-stakes decision making. Decision makers want to know the likelihood that a specific GNN prediction is correct. For this purpose, obtaining calibrated models is essential. In this work, we perform an empirical evaluation of the calibration of state-of-the-art GNNs on multiple datasets. Our experiments show that GNNs can be calibrated in some datasets but also badly miscalibrated in others, and that state-of-the-art calibration methods are helpful but do not fix the problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge