Mohamed A. Zahran

Contextual Unsupervised Outlier Detection in Sequences

Nov 06, 2021

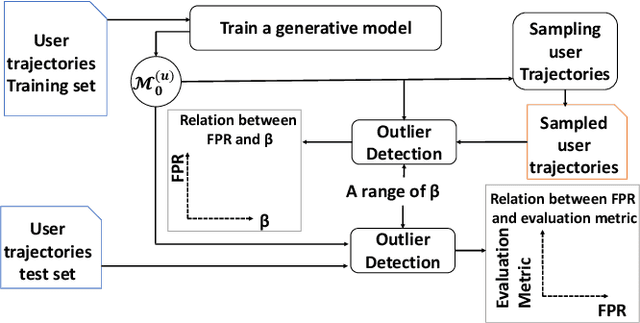

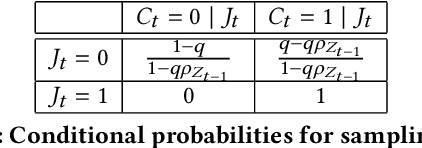

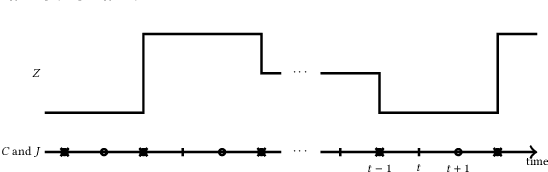

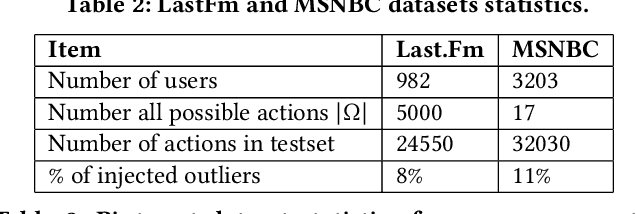

Abstract:This work proposes an unsupervised learning framework for trajectory (sequence) outlier detection that combines ranking tests with user sequence models. The overall framework identifies sequence outliers at a desired false positive rate (FPR), in an otherwise parameter-free manner. We evaluate our methodology on a collection of real and simulated datasets based on user actions at the websites last.fm and msnbc.com, where we know ground truth, and demonstrate improved accuracy over existing approaches. We also apply our approach to a large real-world dataset of Pinterest and Facebook users, where we find that users tend to re-share Pinterest posts of Facebook friends significantly more than other types of users, pointing to a potential influence of Facebook friendship on sharing behavior on Pinterest.

Infinity Learning: Learning Markov Chains from Aggregate Steady-State Observations

Feb 11, 2020

Abstract:We consider the task of learning a parametric Continuous Time Markov Chain (CTMC) sequence model without examples of sequences, where the training data consists entirely of aggregate steady-state statistics. Making the problem harder, we assume that the states we wish to predict are unobserved in the training data. Specifically, given a parametric model over the transition rates of a CTMC and some known transition rates, we wish to extrapolate its steady state distribution to states that are unobserved. A technical roadblock to learn a CTMC from its steady state has been that the chain rule to compute gradients will not work over the arbitrarily long sequences necessary to reach steady state ---from where the aggregate statistics are sampled. To overcome this optimization challenge, we propose $\infty$-SGD, a principled stochastic gradient descent method that uses randomly-stopped estimators to avoid infinite sums required by the steady state computation, while learning even when only a subset of the CTMC states can be observed. We apply $\infty$-SGD to a real-world testbed and synthetic experiments showcasing its accuracy, ability to extrapolate the steady state distribution to unobserved states under unobserved conditions (heavy loads, when training under light loads), and succeeding in difficult scenarios where even a tailor-made extension of existing methods fails.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge