Lazarus Chok

AutoEncoding Tree for City Generation and Applications

Sep 27, 2023

Abstract:City modeling and generation have attracted an increased interest in various applications, including gaming, urban planning, and autonomous driving. Unlike previous works focused on the generation of single objects or indoor scenes, the huge volumes of spatial data in cities pose a challenge to the generative models. Furthermore, few publicly available 3D real-world city datasets also hinder the development of methods for city generation. In this paper, we first collect over 3,000,000 geo-referenced objects for the city of New York, Zurich, Tokyo, Berlin, Boston and several other large cities. Based on this dataset, we propose AETree, a tree-structured auto-encoder neural network, for city generation. Specifically, we first propose a novel Spatial-Geometric Distance (SGD) metric to measure the similarity between building layouts and then construct a binary tree over the raw geometric data of building based on the SGD metric. Next, we present a tree-structured network whose encoder learns to extract and merge spatial information from bottom-up iteratively. The resulting global representation is reversely decoded for reconstruction or generation. To address the issue of long-dependency as the level of the tree increases, a Long Short-Term Memory (LSTM) Cell is employed as a basic network element of the proposed AETree. Moreover, we introduce a novel metric, Overlapping Area Ratio (OAR), to quantitatively evaluate the generation results. Experiments on the collected dataset demonstrate the effectiveness of the proposed model on 2D and 3D city generation. Furthermore, the latent features learned by AETree can serve downstream urban planning applications.

Segmenting across places: The need for fair transfer learning with satellite imagery

Apr 15, 2022

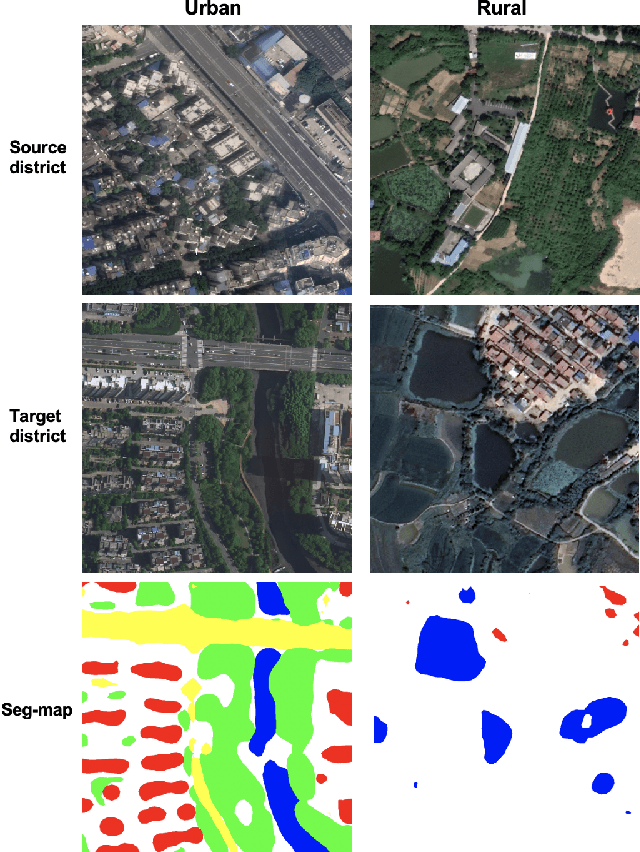

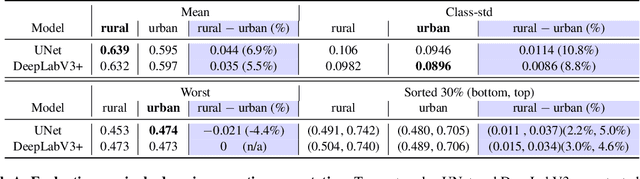

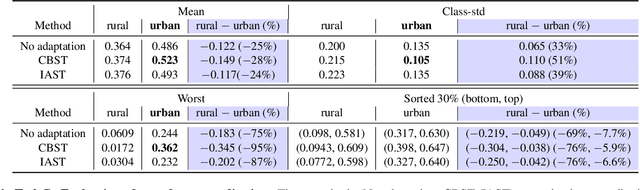

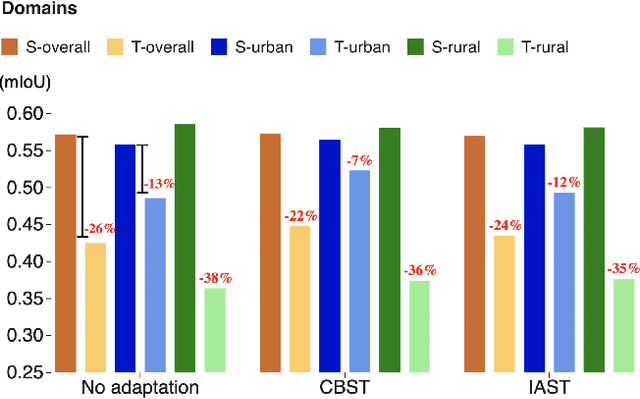

Abstract:The increasing availability of high-resolution satellite imagery has enabled the use of machine learning to support land-cover measurement and inform policy-making. However, labelling satellite images is expensive and is available for only some locations. This prompts the use of transfer learning to adapt models from data-rich locations to others. Given the potential for high-impact applications of satellite imagery across geographies, a systematic assessment of transfer learning implications is warranted. In this work, we consider the task of land-cover segmentation and study the fairness implications of transferring models across locations. We leverage a large satellite image segmentation benchmark with 5987 images from 18 districts (9 urban and 9 rural). Via fairness metrics we quantify disparities in model performance along two axes -- across urban-rural locations and across land-cover classes. Findings show that state-of-the-art models have better overall accuracy in rural areas compared to urban areas, through unsupervised domain adaptation methods transfer learning better to urban versus rural areas and enlarge fairness gaps. In analysis of reasons for these findings, we show that raw satellite images are overall more dissimilar between source and target districts for rural than for urban locations. This work highlights the need to conduct fairness analysis for satellite imagery segmentation models and motivates the development of methods for fair transfer learning in order not to introduce disparities between places, particularly urban and rural locations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge