Laura L. Pullum

Review of Metrics to Measure the Stability, Robustness and Resilience of Reinforcement Learning

Mar 22, 2022

Abstract:Reinforcement learning has received significant interest in recent years, due primarily to the successes of deep reinforcement learning at solving many challenging tasks such as playing Chess, Go and online computer games. However, with the increasing focus on reinforcement learning, applications outside of gaming and simulated environments require understanding the robustness, stability, and resilience of reinforcement learning methods. To this end, we conducted a comprehensive literature review to characterize the available literature on these three behaviors as they pertain to reinforcement learning. We classify the quantitative and theoretical approaches used to indicate or measure robustness, stability, and resilience behaviors. In addition, we identified the action or event to which the quantitative approaches were attempting to be stable, robust, or resilient. Finally, we provide a decision tree useful for selecting metrics to quantify the behaviors. We believe that this is the first comprehensive review of stability, robustness and resilience specifically geared towards reinforcement learning.

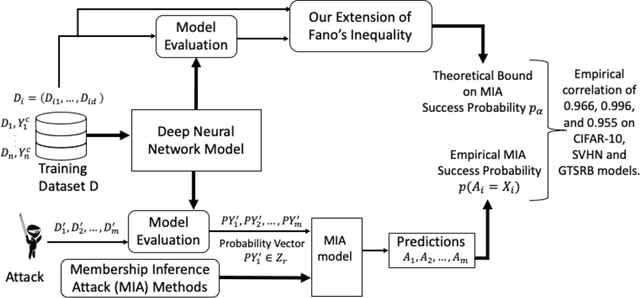

An Extension of Fano's Inequality for Characterizing Model Susceptibility to Membership Inference Attacks

Sep 17, 2020

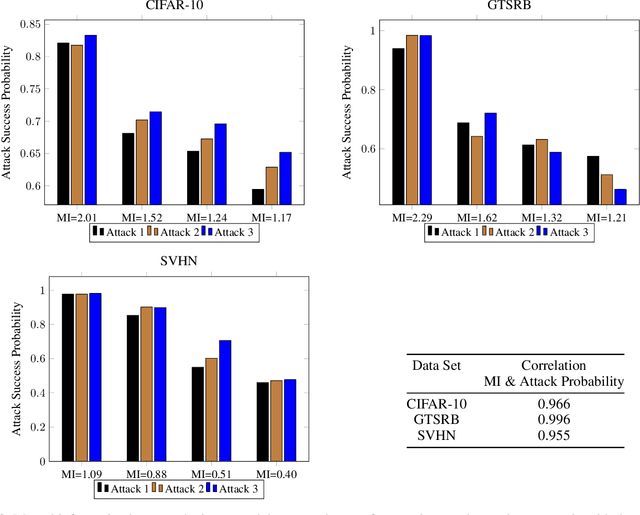

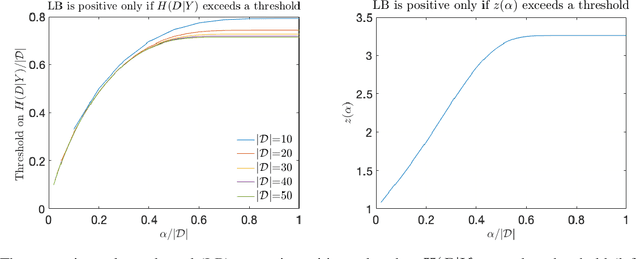

Abstract:Deep neural networks have been shown to be vulnerable to membership inference attacks wherein the attacker aims to detect whether specific input data were used to train the model. These attacks can potentially leak private or proprietary data. We present a new extension of Fano's inequality and employ it to theoretically establish that the probability of success for a membership inference attack on a deep neural network can be bounded using the mutual information between its inputs and its activations. This enables the use of mutual information to measure the susceptibility of a DNN model to membership inference attacks. In our empirical evaluation, we show that the correlation between the mutual information and the susceptibility of the DNN model to membership inference attacks is 0.966, 0.996, and 0.955 for CIFAR-10, SVHN and GTSRB models, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge