Lasse Vuursteen

Optimal Federated Learning for Functional Mean Estimation under Heterogeneous Privacy Constraints

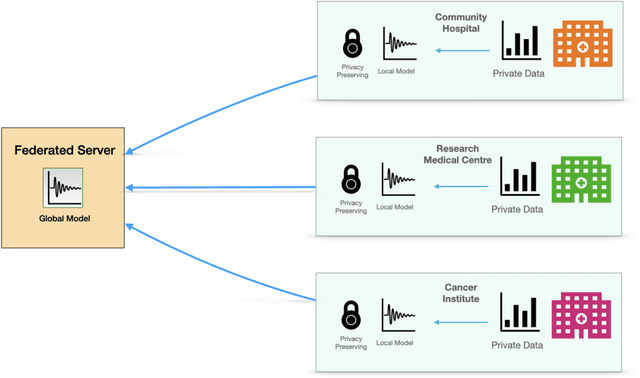

Dec 25, 2024Abstract:Federated learning (FL) is a distributed machine learning technique designed to preserve data privacy and security, and it has gained significant importance due to its broad range of applications. This paper addresses the problem of optimal functional mean estimation from discretely sampled data in a federated setting. We consider a heterogeneous framework where the number of individuals, measurements per individual, and privacy parameters vary across one or more servers, under both common and independent design settings. In the common design setting, the same design points are measured for each individual, whereas in the independent design, each individual has their own random collection of design points. Within this framework, we establish minimax upper and lower bounds for the estimation error of the underlying mean function, highlighting the nuanced differences between common and independent designs under distributed privacy constraints. We propose algorithms that achieve the optimal trade-off between privacy and accuracy and provide optimality results that quantify the fundamental limits of private functional mean estimation across diverse distributed settings. These results characterize the cost of privacy and offer practical insights into the potential for privacy-preserving statistical analysis in federated environments.

Federated Nonparametric Hypothesis Testing with Differential Privacy Constraints: Optimal Rates and Adaptive Tests

Jun 10, 2024Abstract:Federated learning has attracted significant recent attention due to its applicability across a wide range of settings where data is collected and analyzed across disparate locations. In this paper, we study federated nonparametric goodness-of-fit testing in the white-noise-with-drift model under distributed differential privacy (DP) constraints. We first establish matching lower and upper bounds, up to a logarithmic factor, on the minimax separation rate. This optimal rate serves as a benchmark for the difficulty of the testing problem, factoring in model characteristics such as the number of observations, noise level, and regularity of the signal class, along with the strictness of the $(\epsilon,\delta)$-DP requirement. The results demonstrate interesting and novel phase transition phenomena. Furthermore, the results reveal an interesting phenomenon that distributed one-shot protocols with access to shared randomness outperform those without access to shared randomness. We also construct a data-driven testing procedure that possesses the ability to adapt to an unknown regularity parameter over a large collection of function classes with minimal additional cost, all while maintaining adherence to the same set of DP constraints.

Optimal Federated Learning for Nonparametric Regression with Heterogeneous Distributed Differential Privacy Constraints

Jun 10, 2024

Abstract:This paper studies federated learning for nonparametric regression in the context of distributed samples across different servers, each adhering to distinct differential privacy constraints. The setting we consider is heterogeneous, encompassing both varying sample sizes and differential privacy constraints across servers. Within this framework, both global and pointwise estimation are considered, and optimal rates of convergence over the Besov spaces are established. Distributed privacy-preserving estimators are proposed and their risk properties are investigated. Matching minimax lower bounds, up to a logarithmic factor, are established for both global and pointwise estimation. Together, these findings shed light on the tradeoff between statistical accuracy and privacy preservation. In particular, we characterize the compromise not only in terms of the privacy budget but also concerning the loss incurred by distributing data within the privacy framework as a whole. This insight captures the folklore wisdom that it is easier to retain privacy in larger samples, and explores the differences between pointwise and global estimation under distributed privacy constraints.

Optimal high-dimensional and nonparametric distributed testing under communication constraints

Feb 02, 2022Abstract:We derive minimax testing errors in a distributed framework where the data is split over multiple machines and their communication to a central machine is limited to $b$ bits. We investigate both the $d$- and infinite-dimensional signal detection problem under Gaussian white noise. We also derive distributed testing algorithms reaching the theoretical lower bounds. Our results show that distributed testing is subject to fundamentally different phenomena that are not observed in distributed estimation. Among our findings, we show that testing protocols that have access to shared randomness can perform strictly better in some regimes than those that do not. Furthermore, we show that consistent nonparametric distributed testing is always possible, even with as little as $1$-bit of communication and the corresponding test outperforms the best local test using only the information available at a single local machine.

Optimal distributed testing in high-dimensional Gaussian models

Dec 09, 2020

Abstract:In this paper study the problem of signal detection in Gaussian noise in a distributed setting. We derive a lower bound on the size that the signal needs to have in order to be detectable. Moreover, we exhibit optimal distributed testing strategies that attain the lower bound.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge